Wenlong Cheng

MFAGAN: A Compression Framework for Memory-Efficient On-Device Super-Resolution GAN

Jul 27, 2021

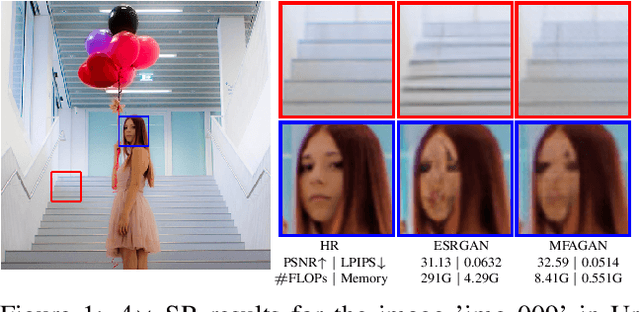

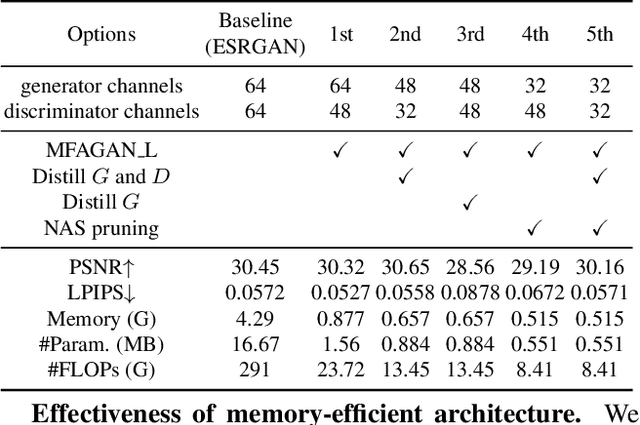

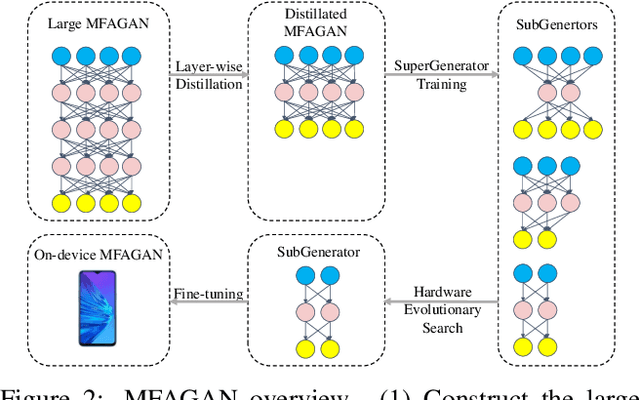

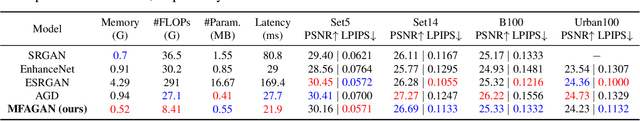

Abstract:Generative adversarial networks (GANs) have promoted remarkable advances in single-image super-resolution (SR) by recovering photo-realistic images. However, high memory consumption of GAN-based SR (usually generators) causes performance degradation and more energy consumption, hindering the deployment of GAN-based SR into resource-constricted mobile devices. In this paper, we propose a novel compression framework \textbf{M}ulti-scale \textbf{F}eature \textbf{A}ggregation Net based \textbf{GAN} (MFAGAN) for reducing the memory access cost of the generator. First, to overcome the memory explosion of dense connections, we utilize a memory-efficient multi-scale feature aggregation net as the generator. Second, for faster and more stable training, our method introduces the PatchGAN discriminator. Third, to balance the student discriminator and the compressed generator, we distill both the generator and the discriminator. Finally, we perform a hardware-aware neural architecture search (NAS) to find a specialized SubGenerator for the target mobile phone. Benefiting from these improvements, the proposed MFAGAN achieves up to \textbf{8.3}$\times$ memory saving and \textbf{42.9}$\times$ computation reduction, with only minor visual quality degradation, compared with ESRGAN. Empirical studies also show $\sim$\textbf{70} milliseconds latency on Qualcomm Snapdragon 865 chipset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge