Wen Gong

360-GS: Layout-guided Panoramic Gaussian Splatting For Indoor Roaming

Feb 01, 2024

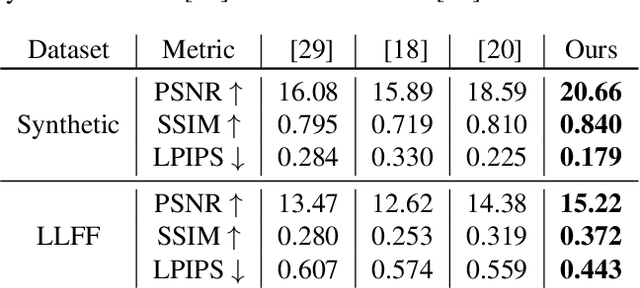

Abstract:3D Gaussian Splatting (3D-GS) has recently attracted great attention with real-time and photo-realistic renderings. This technique typically takes perspective images as input and optimizes a set of 3D elliptical Gaussians by splatting them onto the image planes, resulting in 2D Gaussians. However, applying 3D-GS to panoramic inputs presents challenges in effectively modeling the projection onto the spherical surface of ${360^\circ}$ images using 2D Gaussians. In practical applications, input panoramas are often sparse, leading to unreliable initialization of 3D Gaussians and subsequent degradation of 3D-GS quality. In addition, due to the under-constrained geometry of texture-less planes (e.g., walls and floors), 3D-GS struggles to model these flat regions with elliptical Gaussians, resulting in significant floaters in novel views. To address these issues, we propose 360-GS, a novel $360^{\circ}$ Gaussian splatting for a limited set of panoramic inputs. Instead of splatting 3D Gaussians directly onto the spherical surface, 360-GS projects them onto the tangent plane of the unit sphere and then maps them to the spherical projections. This adaptation enables the representation of the projection using Gaussians. We guide the optimization of 360-GS by exploiting layout priors within panoramas, which are simple to obtain and contain strong structural information about the indoor scene. Our experimental results demonstrate that 360-GS allows panoramic rendering and outperforms state-of-the-art methods with fewer artifacts in novel view synthesis, thus providing immersive roaming in indoor scenarios.

Self-NeRF: A Self-Training Pipeline for Few-Shot Neural Radiance Fields

Mar 10, 2023

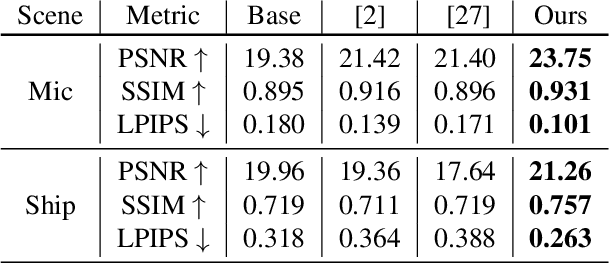

Abstract:Recently, Neural Radiance Fields (NeRF) have emerged as a potent method for synthesizing novel views from a dense set of images. Despite its impressive performance, NeRF is plagued by its necessity for numerous calibrated views and its accuracy diminishes significantly in a few-shot setting. To address this challenge, we propose Self-NeRF, a self-evolved NeRF that iteratively refines the radiance fields with very few number of input views, without incorporating additional priors. Basically, we train our model under the supervision of reference and unseen views simultaneously in an iterative procedure. In each iteration, we label unseen views with the predicted colors or warped pixels generated by the model from the preceding iteration. However, these expanded pseudo-views are afflicted by imprecision in color and warping artifacts, which degrades the performance of NeRF. To alleviate this issue, we construct an uncertainty-aware NeRF with specialized embeddings. Some techniques such as cone entropy regularization are further utilized to leverage the pseudo-views in the most efficient manner. Through experiments under various settings, we verified that our Self-NeRF is robust to input with uncertainty and surpasses existing methods when trained on limited training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge