Weiguo Sheng

A Comprehensive Survey of 3D Dense Captioning: Localizing and Describing Objects in 3D Scenes

Mar 12, 2024

Abstract:Three-Dimensional (3D) dense captioning is an emerging vision-language bridging task that aims to generate multiple detailed and accurate descriptions for 3D scenes. It presents significant potential and challenges due to its closer representation of the real world compared to 2D visual captioning, as well as complexities in data collection and processing of 3D point cloud sources. Despite the popularity and success of existing methods, there is a lack of comprehensive surveys summarizing the advancements in this field, which hinders its progress. In this paper, we provide a comprehensive review of 3D dense captioning, covering task definition, architecture classification, dataset analysis, evaluation metrics, and in-depth prosperity discussions. Based on a synthesis of previous literature, we refine a standard pipeline that serves as a common paradigm for existing methods. We also introduce a clear taxonomy of existing models, summarize technologies involved in different modules, and conduct detailed experiment analysis. Instead of a chronological order introduction, we categorize the methods into different classes to facilitate exploration and analysis of the differences and connections among existing techniques. We also provide a reading guideline to assist readers with different backgrounds and purposes in reading efficiently. Furthermore, we propose a series of promising future directions for 3D dense captioning by identifying challenges and aligning them with the development of related tasks, offering valuable insights and inspiring future research in this field. Our aim is to provide a comprehensive understanding of 3D dense captioning, foster further investigations, and contribute to the development of novel applications in multimedia and related domains.

Feature Interaction based Neural Network for Click-Through Rate Prediction

Jun 07, 2020

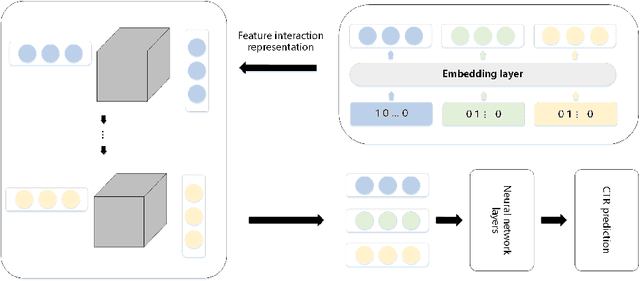

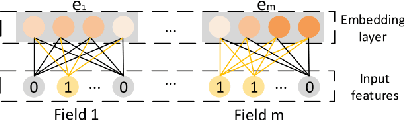

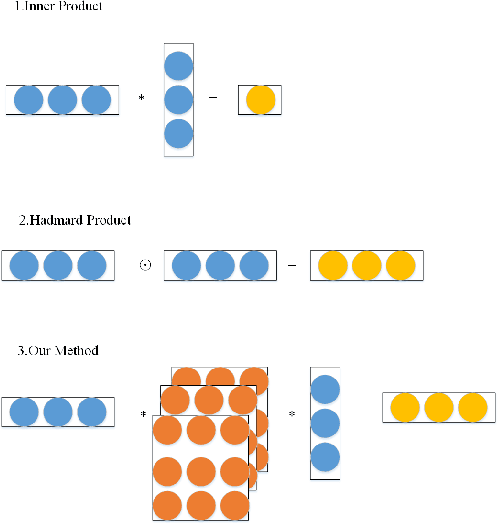

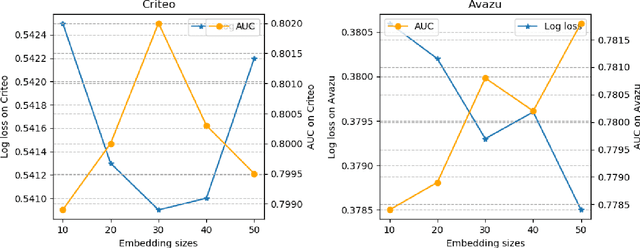

Abstract:Click-Through Rate (CTR) prediction is one of the most important and challenging in calculating advertisements and recommendation systems. To build a machine learning system with these data, it is important to properly model the interaction among features. However, many current works calculate the feature interactions in a simple way such as inner product and element-wise product. This paper aims to fully utilize the information between features and improve the performance of deep neural networks in the CTR prediction task. In this paper, we propose a Feature Interaction based Neural Network (FINN) which is able to model feature interaction via a 3-dimention relation tensor. FINN provides representations for the feature interactions on the the bottom layer and the non-linearity of neural network in modelling higher-order feature interactions. We evaluate our models on CTR prediction tasks compared with classical baselines and show that our deep FINN model outperforms other state-of-the-art deep models such as PNN and DeepFM. Evaluation results demonstrate that feature interaction contains significant information for better CTR prediction. It also indicates that our models can effectively learn the feature interactions, and achieve better performances in real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge