Wei-Fan Hu

A discontinuity-capturing neural network with categorical embedding and its application to anisotropic elliptic interface problems

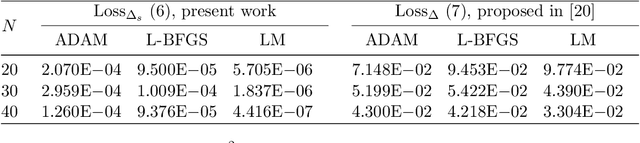

Mar 19, 2025Abstract:In this paper, we propose a discontinuity-capturing shallow neural network with categorical embedding to represent piecewise smooth functions. The network comprises three hidden layers, a discontinuity-capturing layer, a categorical embedding layer, and a fully-connected layer. Under such a design, we show that a piecewise smooth function, even with a large number of pieces, can be approximated by a single neural network with high prediction accuracy. We then leverage the proposed network model to solve anisotropic elliptic interface problems. The network is trained by minimizing the mean squared error loss of the system. Our results show that, despite its simple and shallow structure, the proposed neural network model exhibits comparable efficiency and accuracy to traditional grid-based numerical methods.

A cusp-capturing PINN for elliptic interface problems

Oct 16, 2022

Abstract:In this paper, we propose a cusp-capturing physics-informed neural network (PINN) to solve variable-coefficient elliptic interface problems whose solution is continuous but has discontinuous first derivatives on the interface. To find such a solution using neural network representation, we introduce a cusp-enforced level set function as an additional feature input to the network to retain the inherent solution properties, capturing the solution cusps (where the derivatives are discontinuous) sharply. In addition, the proposed neural network has the advantage of being mesh-free, so it can easily handle problems in irregular domains. We train the network using the physics-informed framework in which the loss function comprises the residual of the differential equation together with a certain interface and boundary conditions. We conduct a series of numerical experiments to demonstrate the effectiveness of the cusp-capturing technique and the accuracy of the present network model. Numerical results show that even a one-hidden-layer (shallow) network with a moderate number of neurons ($40-60$) and sufficient training data points, the present network model can achieve high prediction accuracy (relative $L^2$ errors in the order of $10^{-5}-10^{-6}$), which outperforms several existing neural network models and traditional grid-based methods in the literature.

A hybrid neural-network and finite-difference method for solving Poisson equation with jump discontinuities on interfaces

Oct 11, 2022

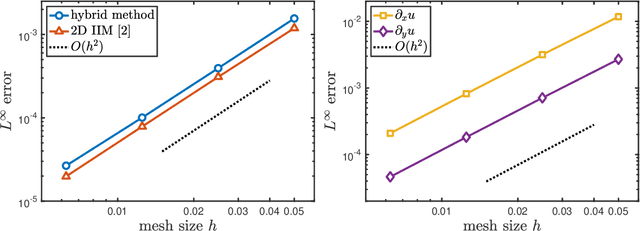

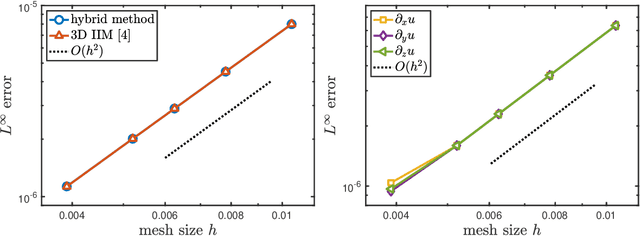

Abstract:In this work, a new hybrid neural-network and finite-difference method is developed for solving Poisson equation in a regular domain with jump discontinuities on an embedded irregular interface. Since the solution has low regularity across the interface, when applying finite difference discretization to this problem, an additional treatment accounting for the jump discontinuities must be employed at grid points near the interface. Here, we aim to elevate such an extra effort to ease our implementation. The key idea is to decompose the solution into two parts: singular (non-smooth) and regular (smooth) parts. The neural network learning machinery incorporating given jump conditions finds the singular solution, while the standard finite difference method is used to obtain the regular solution with associated boundary conditions. Regardless of the interface geometry, these two tasks only require a supervised learning task of function approximation and a fast direct solver of the Poisson equation, making the hybrid method easy to implement and efficient. The two- and three-dimensional numerical results show that the present hybrid method preserves second-order accuracy for the solution and its derivatives, and it is comparable with the traditional immersed interface method in the literature.

A shallow physics-informed neural network for solving partial differential equations on surfaces

Mar 03, 2022

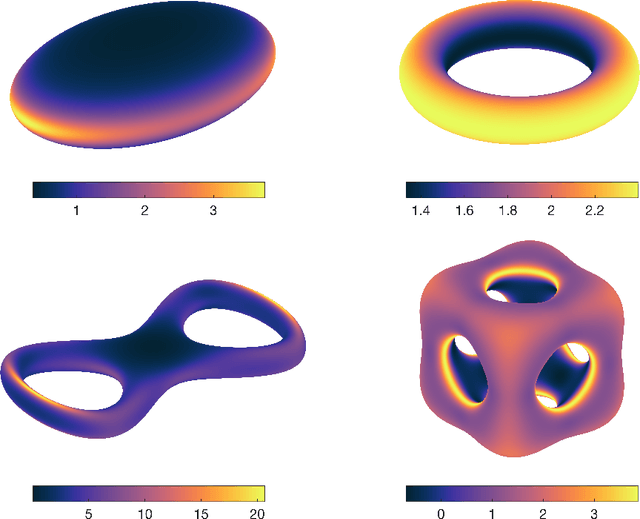

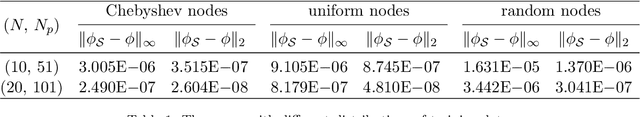

Abstract:In this paper, we introduce a mesh-free physics-informed neural network for solving partial differential equations on surfaces. Based on the idea of embedding techniques, we write the underlying surface differential equations using conventional Cartesian differential operators. With the aid of level set function, the surface geometrical quantities, such as the normal and mean curvature of the surface, can be computed directly and used in our surface differential expressions. So instead of imposing the normal extension constraints used in literature, we take the whole Cartesian differential expressions into account in our loss function. Meanwhile, we adopt a completely shallow (one hidden layer) network so the present model is easy to implement and train. We perform a series of numerical experiments on both stationary and time-dependent partial differential equations on complicated surface geometries. The result shows that, with just a few hundred trainable parameters, our network model is able to achieve high predictive accuracy.

A Shallow Ritz Method for elliptic problems with Singular Sources

Jul 26, 2021

Abstract:In this paper, a shallow Ritz-type neural network for solving elliptic problems with delta function singular sources on an interface is developed. There are three novel features in the present work; namely, (i) the delta function singularity is naturally removed, (ii) level set function is introduced as a feather input, (iii) it is completely shallow consisting of only one hidden layer. We first introduce the energy functional of the problem and then transform the contribution of singular sources to a regular surface integral along the interface. In such a way the delta function singularity can be naturally removed without the introduction of discrete delta function that is commonly used in traditional regularization methods such as the well-known immersed boundary method. The original problem is then reformulated as a minimization problem. We propose a shallow Ritz-type neural network with one hidden layer to approximate the global minimizer of the energy functional. As a result, the network is trained by minimizing the loss function that is a discrete version of the energy. In addition, we include the level set function of the interface as a feature input and find that it significantly improves the training efficiency and accuracy. We perform a series of numerical tests to demonstrate the accuracy of the present network as well as its capability for problems in irregular domains and in higher dimensions.

A Discontinuity Capturing Shallow Neural Network for Elliptic Interface Problems

Jun 10, 2021

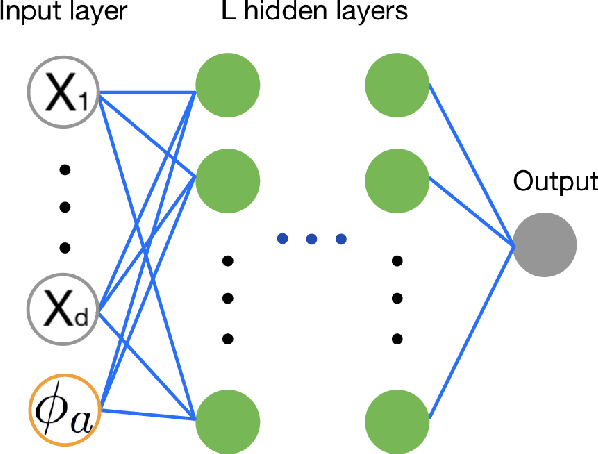

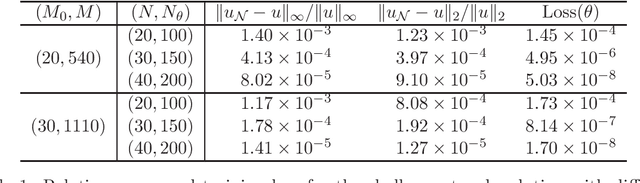

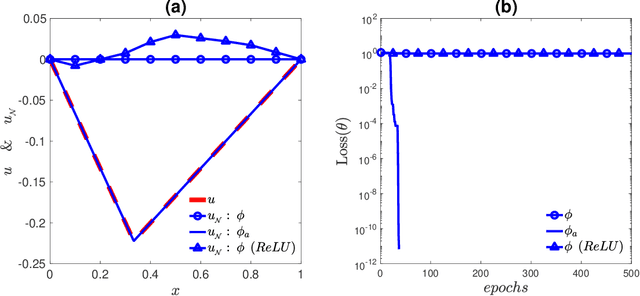

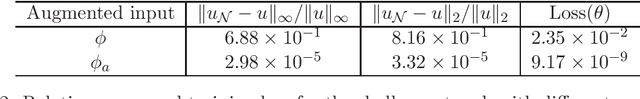

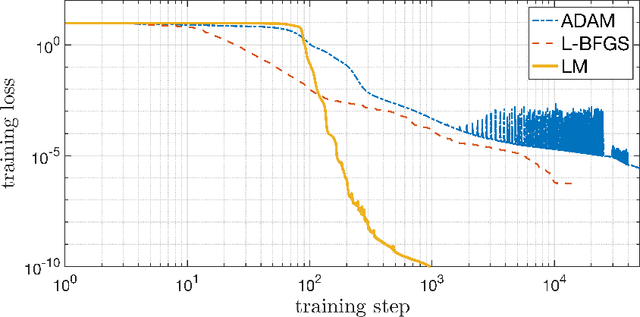

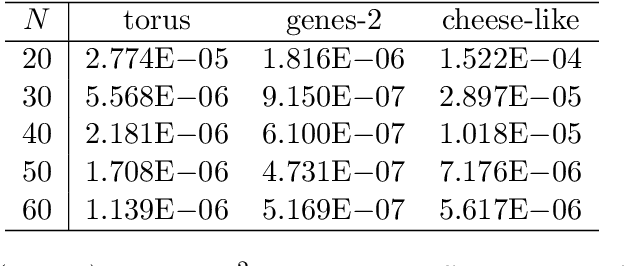

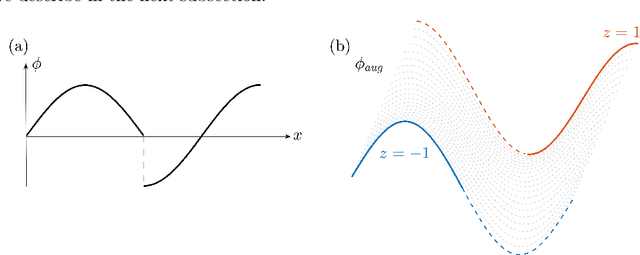

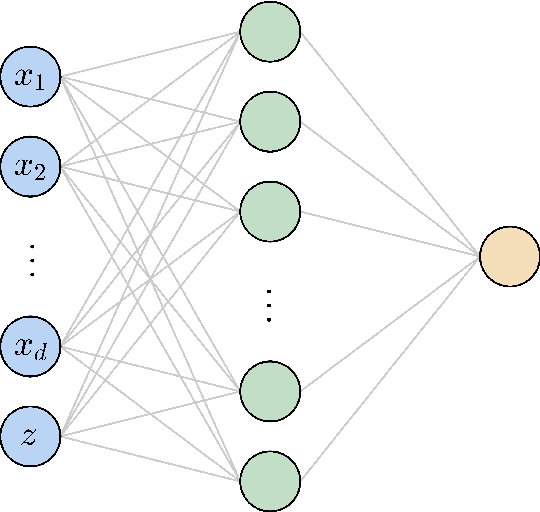

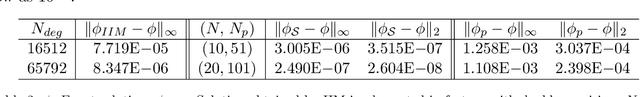

Abstract:In this paper, a new Discontinuity Capturing Shallow Neural Network (DCSNN) for approximating $d$-dimensional piecewise continuous functions and for solving elliptic interface problems is developed. There are three novel features in the present network; namely, (i) jump discontinuity is captured sharply, (ii) it is completely shallow consisting of only one hidden layer, (iii) it is completely mesh-free for solving partial differential equations (PDEs). We first continuously extend the $d$-dimensional piecewise continuous function in $(d+1)$-dimensional space by augmenting one coordinate variable to label the pieces of discontinuous function, and then construct a shallow neural network to express this new augmented function. Since only one hidden layer is employed, the number of training parameters (weights and biases) scales linearly with the dimension and the neurons used in the hidden layer. For solving elliptic interface equations, the network is trained by minimizing the mean squared error loss that consists of the residual of governing equation, boundary condition, and the interface jump conditions. We perform a series of numerical tests to compare the accuracy and efficiency of the present network. Our DCSNN model is comparably efficient due to only moderate number of parameters needed to be trained (a few hundreds of parameters used throughout all numerical examples here), and the result shows better accuracy (and less parameters) than other method using piecewise deep neural network in literature. We also compare the results obtained by the traditional grid-based immersed interface method (IIM) which is designed particularly for elliptic interface problems. Again, the present results show better accuracy than the ones obtained by IIM. We conclude by solving a six-dimensional problem to show the capability of the present network for high-dimensional applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge