Wei-Chuen Yau

Integrated Neural Network and Machine Vision Approach For Leather Defect Classification

May 28, 2019

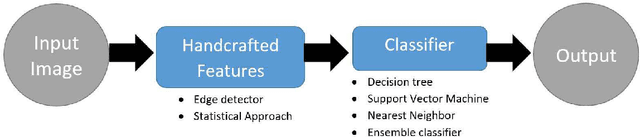

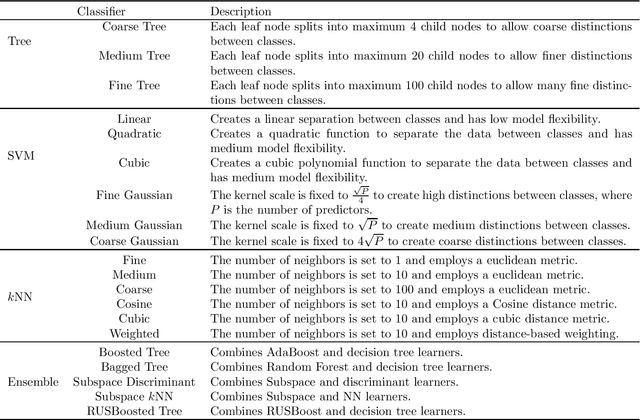

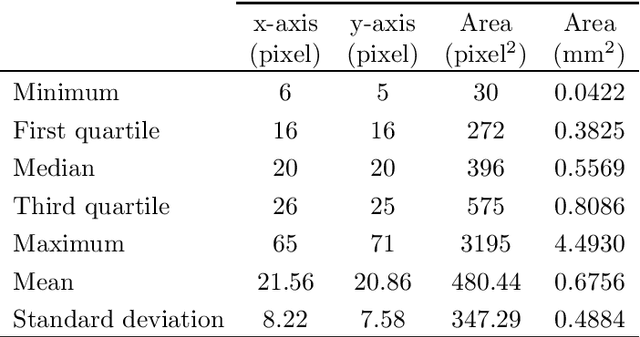

Abstract:Leather is a type of natural, durable, flexible, soft, supple and pliable material with smooth texture. It is commonly used as a raw material to manufacture luxury consumer goods for high-end customers. To ensure good quality control on the leather products, one of the critical processes is the visual inspection step to spot the random defects on the leather surfaces and it is usually conducted by experienced experts. This paper presents an automatic mechanism to perform the leather defect classification. In particular, we focus on detecting tick-bite defects on a specific type of calf leather. Both the handcrafted feature extractors (i.e., edge detectors and statistical approach) and data-driven (i.e., artificial neural network) methods are utilized to represent the leather patches. Then, multiple classifiers (i.e., decision trees, Support Vector Machines, nearest neighbour and ensemble classifiers) are exploited to determine whether the test sample patches contain defective segments. Using the proposed method, we managed to get a classification accuracy rate of 84% from a sample of approximately 2500 pieces of 400 * 400 leather patches.

OFF-ApexNet on Micro-expression Recognition System

May 10, 2018

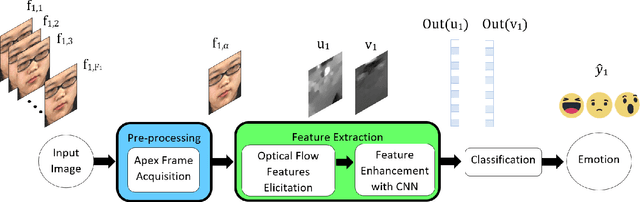

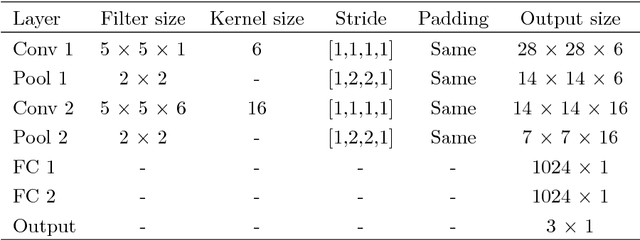

Abstract:When a person attempts to conceal an emotion, the genuine emotion is manifest as a micro-expression. Exploration of automatic facial micro-expression recognition systems is relatively new in the computer vision domain. This is due to the difficulty in implementing optimal feature extraction methods to cope with the subtlety and brief motion characteristics of the expression. Most of the existing approaches extract the subtle facial movements based on hand-crafted features. In this paper, we address the micro-expression recognition task with a convolutional neural network (CNN) architecture, which well integrates the features extracted from each video. A new feature descriptor, Optical Flow Features from Apex frame Network (OFF-ApexNet) is introduced. This feature descriptor combines the optical ow guided context with the CNN. Firstly, we obtain the location of the apex frame from each video sequence as it portrays the highest intensity of facial motion among all frames. Then, the optical ow information are attained from the apex frame and a reference frame (i.e., onset frame). Finally, the optical flow features are fed into a pre-designed CNN model for further feature enhancement as well as to carry out the expression classification. To evaluate the effectiveness of OFF-ApexNet, comprehensive evaluations are conducted on three public spontaneous micro-expression datasets (i.e., SMIC, CASME II and SAMM). The promising recognition result suggests that the proposed method can optimally describe the significant micro-expression details. In particular, we report that, in a multi-database with leave-one-subject-out cross-validation experimental protocol, the recognition performance reaches 74.60% of recognition accuracy and F-measure of 71.04%. We also note that this is the first work that performs cross-dataset validation on three databases in this domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge