Vyoma Raman

Bias, Consistency, and Partisanship in U.S. Asylum Cases: A Machine Learning Analysis of Extraneous Factors in Immigration Court Decisions

May 25, 2023

Abstract:In this study, we introduce a novel two-pronged scoring system to measure individual and systemic bias in immigration courts under the U.S. Executive Office of Immigration Review (EOIR). We analyze nearly 6 million immigration court proceedings and 228 case features to build on prior research showing that U.S. asylum decisions vary dramatically based on factors that are extraneous to the merits of a case. We close a critical gap in the literature of variability metrics that can span space and time. Using predictive modeling, we explain 58.54% of the total decision variability using two metrics: partisanship and inter-judge cohort consistency. Thus, whether the EOIR grants asylum to an applicant or not depends in majority on the combined effects of the political climate and the individual variability of the presiding judge - not the individual merits of the case. Using time series analysis, we also demonstrate that partisanship increased in the early 1990s but plateaued following the turn of the century. These conclusions are striking to the extent that they diverge from the U.S. immigration system's commitments to independence and due process. Our contributions expose systemic inequities in the U.S. asylum decision-making process, and we recommend improved and standardized variability metrics to better diagnose and monitor these issues.

Centering the Margins: Outlier-Based Identification of Harmed Populations in Toxicity Detection

May 24, 2023

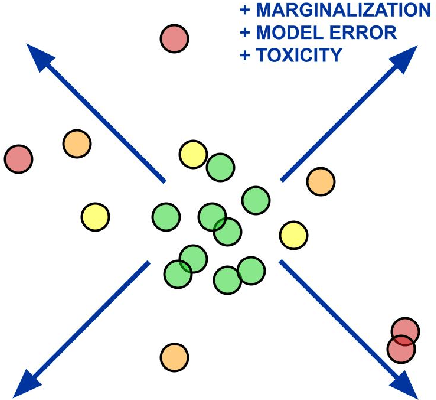

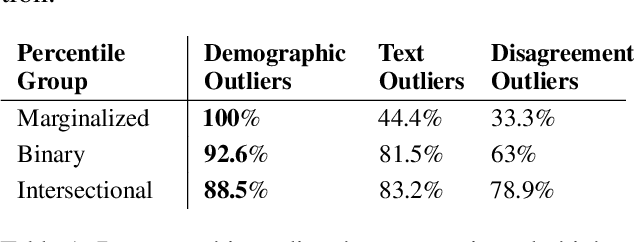

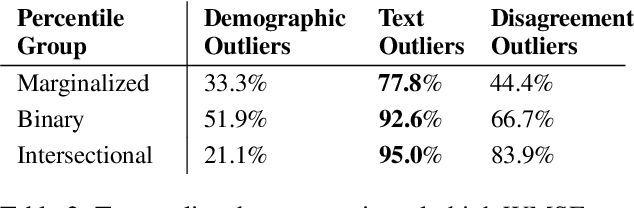

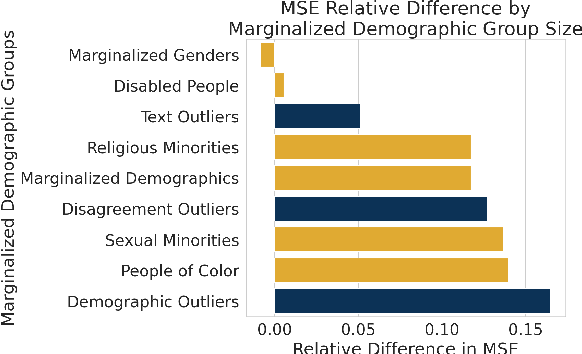

Abstract:A standard method for measuring the impacts of AI on marginalized communities is to determine performance discrepancies between specified demographic groups. These approaches aim to address harms toward vulnerable groups, but they obscure harm patterns faced by intersectional subgroups or shared across demographic groups. We instead operationalize "the margins" as data points that are statistical outliers due to having demographic attributes distant from the "norm" and measure harms toward these outliers. We propose a Group-Based Performance Disparity Index (GPDI) that measures the extent to which a subdivision of a dataset into subgroups identifies those facing increased harms. We apply our approach to detecting disparities in toxicity detection and find that text targeting outliers is 28% to 86% more toxic for all types of toxicity examined. We also discover that model performance is consistently worse for demographic outliers, with disparities in error between outliers and non-outliers ranging from 28% to 71% across toxicity types. Our outlier-based analysis has comparable or higher GPDI than traditional subgroup-based analyses, suggesting that outlier analysis enhances identification of subgroups facing greater harms. Finally, we find that minoritized racial and religious groups are most associated with outliers, which suggests that outlier analysis is particularly beneficial for identifying harms against those groups.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge