Vinicius Ferraris

Coupled dictionary learning for unsupervised change detection between multi-sensor remote sensing images

Jul 21, 2018

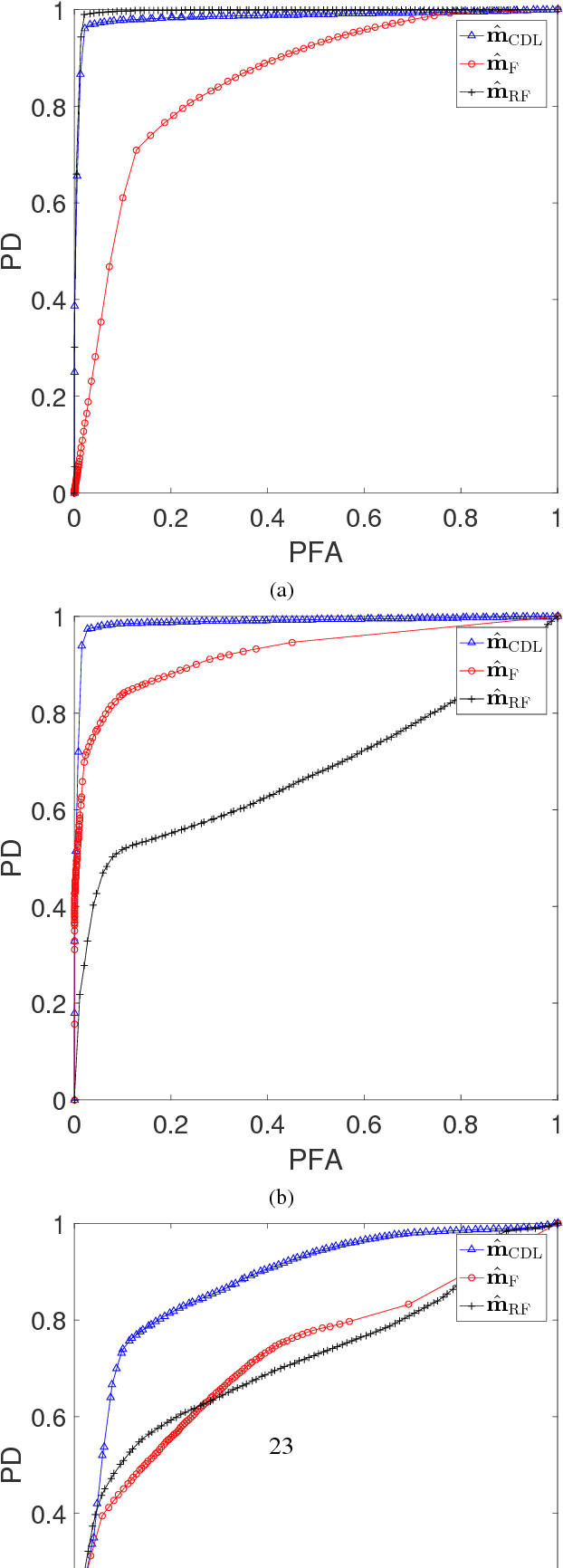

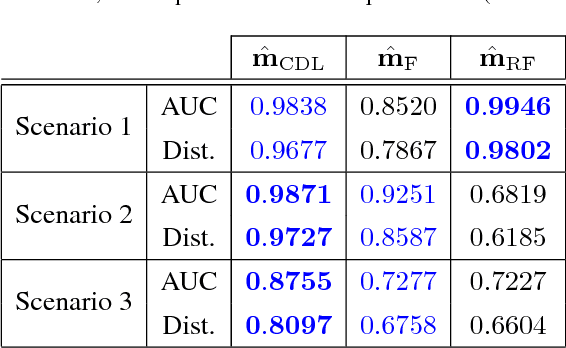

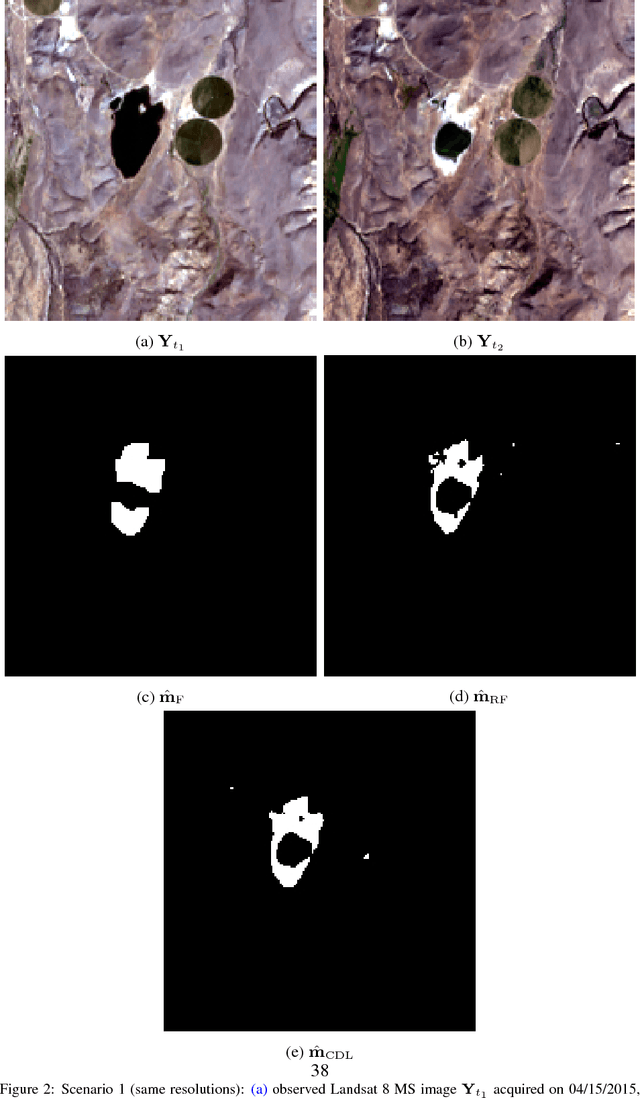

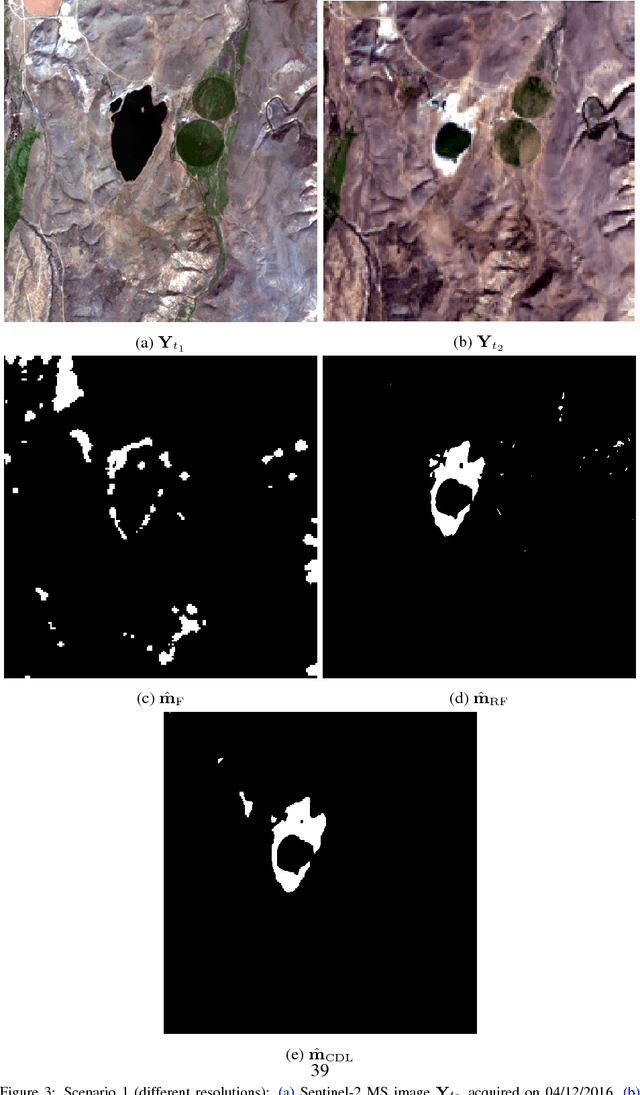

Abstract:Archetypal scenarios for change detection generally consider two images acquired through sensors of the same modality. However, in some specific cases such as emergency situations, the only images available may be those acquired through sensors with different characteristics. This paper addresses the problem of unsupervisedly detecting changes between two observed images acquired by different sensors. These sensor dissimilarities introduce additional issues in the context of operational change detection that are not addressed by most of classical methods. This paper introduces a novel framework to effectively exploit the available information by modeling the two observed images as a sparse linear combination of atoms belonging to an overcomplete pair of coupled dictionaries learnt from each observed image. As they cover the same geographical location, codes are expected to be globally similar except for possible changes in sparse spatial locations. Thus, the change detection task is envisioned through a dual code estimation which enforces spatial sparsity in the difference between the estimated codes associated with each image. This problem is formulated as an inverse problem which is iteratively solved using an efficient proximal alternating minimization algorithm accounting for nonsmooth and nonconvex functions. The proposed method is applied to real multisensor images with simulated yet realistic and real images. A comparison with state-of-the-art change detection methods evidences the accuracy of the proposed strategy.

Robust fusion algorithms for unsupervised change detection between multi-band optical images - A comprehensive case study

Apr 09, 2018

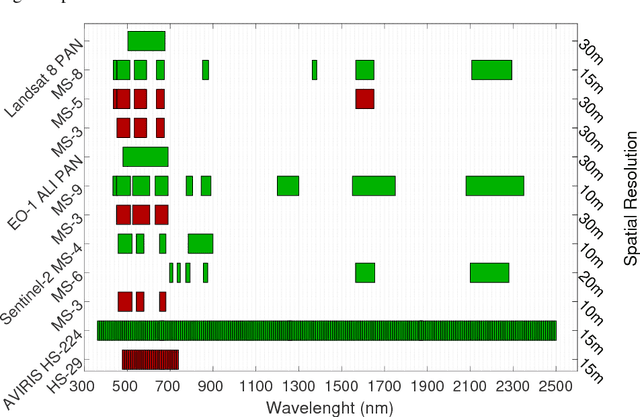

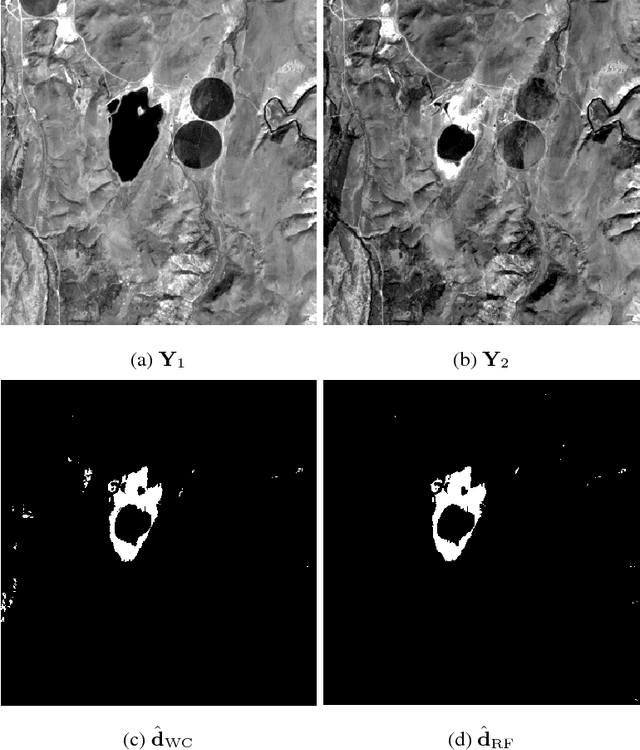

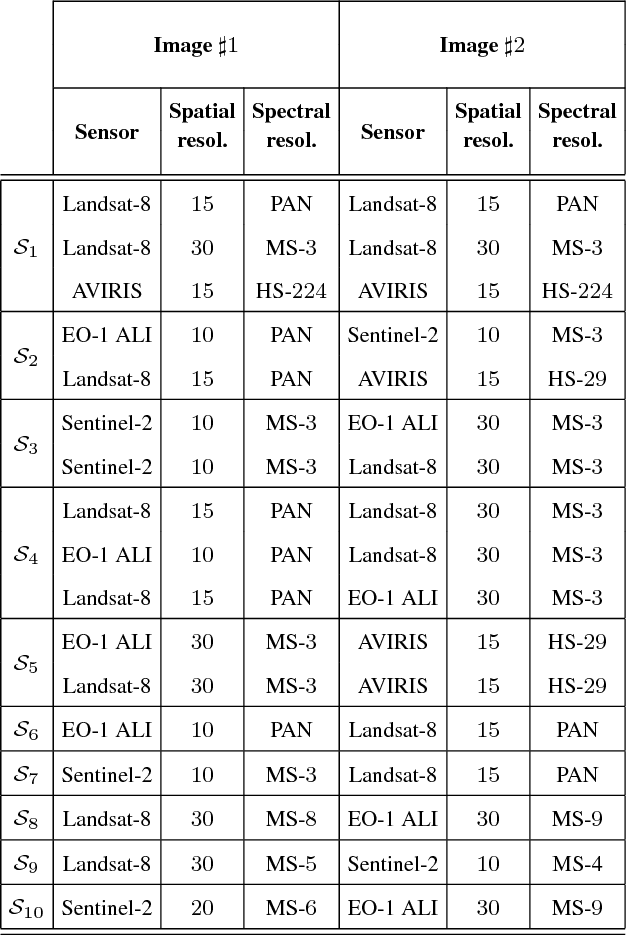

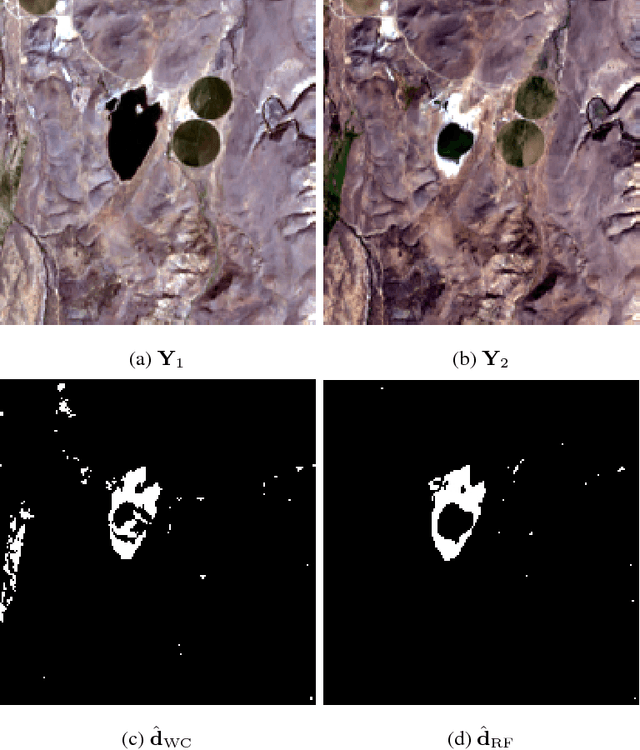

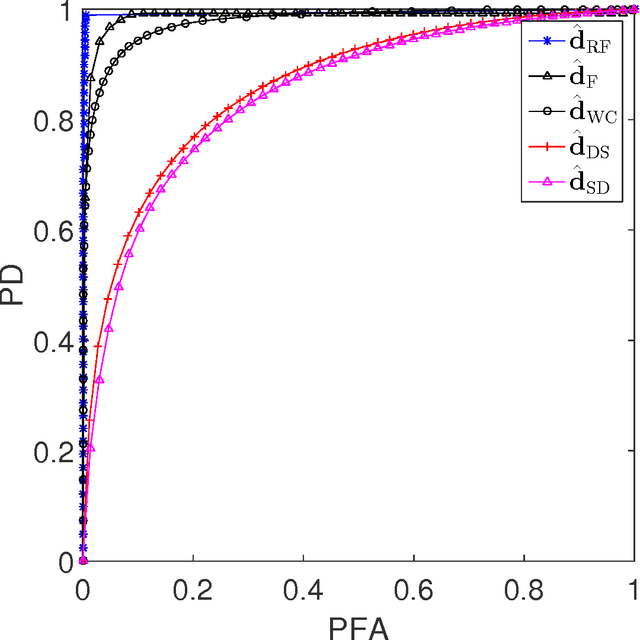

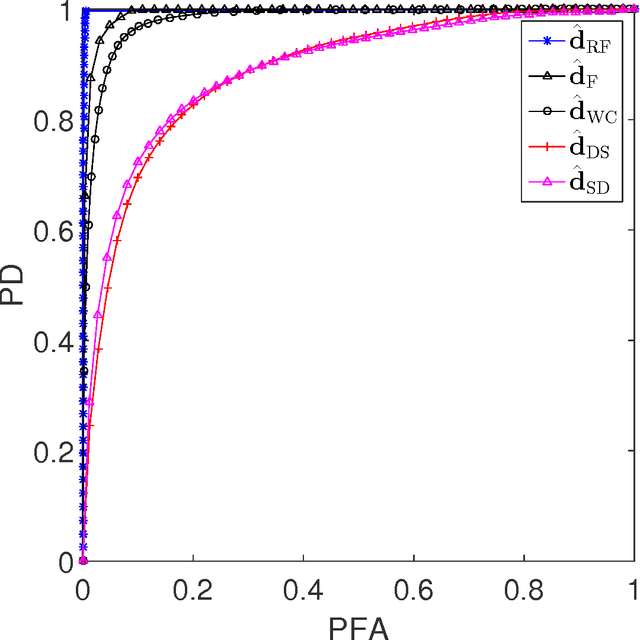

Abstract:Unsupervised change detection techniques are generally constrained to two multi-band optical images acquired at different times through sensors sharing the same spatial and spectral resolution. This scenario is suitable for a straight comparison of homologous pixels such as pixel-wise differencing. However, in some specific cases such as emergency situations, the only available images may be those acquired through different kinds of sensors with different resolutions. Recently some change detection techniques dealing with images with different spatial and spectral resolutions, have been proposed. Nevertheless, they are focused on a specific scenario where one image has a high spatial and low spectral resolution while the other has a low spatial and high spectral resolution. This paper addresses the problem of detecting changes between any two multi-band optical images disregarding their spatial and spectral resolution disparities. We propose a method that effectively uses the available information by modeling the two observed images as spatially and spectrally degraded versions of two (unobserved) latent images characterized by the same high spatial and high spectral resolutions. Covering the same scene, the latent images are expected to be globally similar except for possible changes in spatially sparse locations. Thus, the change detection task is envisioned through a robust fusion task which enforces the differences between the estimated latent images to be spatially sparse. We show that this robust fusion can be formulated as an inverse problem which is iteratively solved using an alternate minimization strategy. The proposed framework is implemented for an exhaustive list of applicative scenarios and applied to real multi-band optical images. A comparison with state-of-the-art change detection methods evidences the accuracy of the proposed robust fusion-based strategy.

Robust Fusion of Multi-Band Images with Different Spatial and Spectral Resolutions for Change Detection

Sep 20, 2016

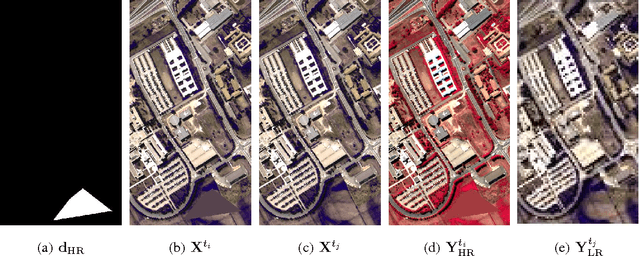

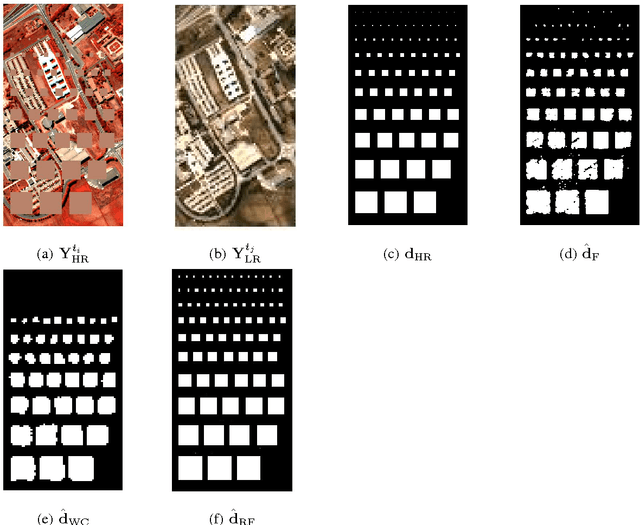

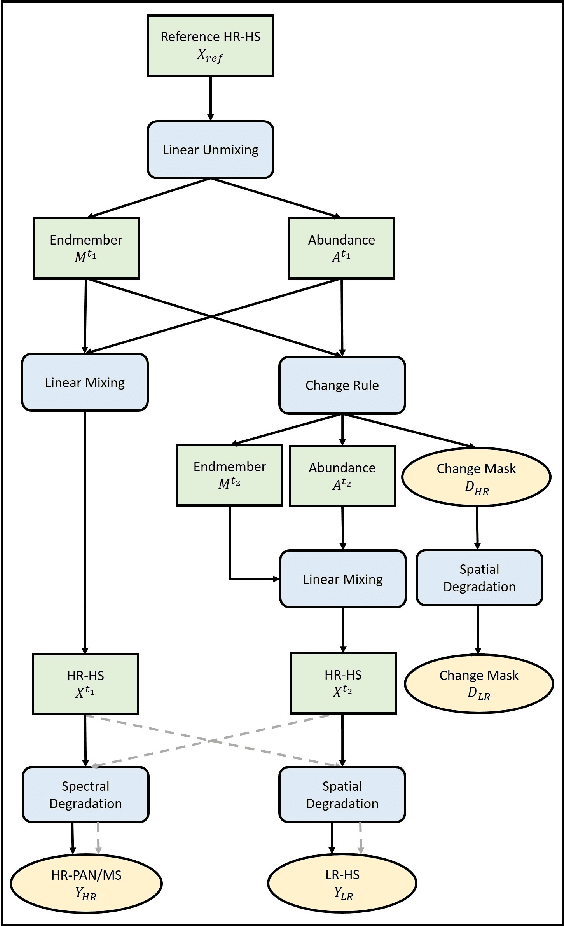

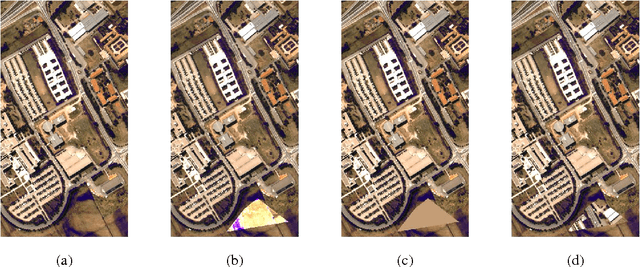

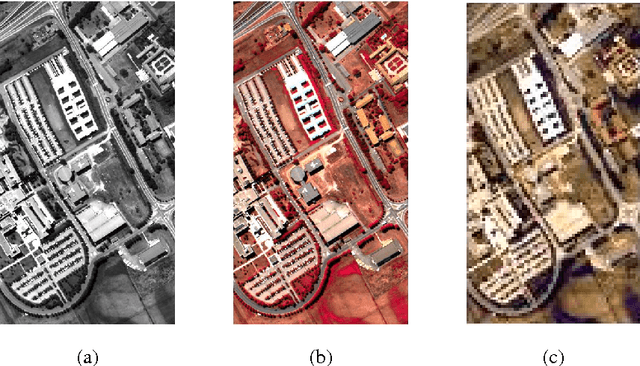

Abstract:Archetypal scenarios for change detection generally consider two images acquired through sensors of the same modality. However, in some specific cases such as emergency situations, the only images available may be those acquired through different kinds of sensors. More precisely, this paper addresses the problem of detecting changes between two multi-band optical images characterized by different spatial and spectral resolutions. This sensor dissimilarity introduces additional issues in the context of operational change detection. To alleviate these issues, classical change detection methods are applied after independent preprocessing steps (e.g., resampling) used to get the same spatial and spectral resolutions for the pair of observed images. Nevertheless, these preprocessing steps tend to throw away relevant information. Conversely, in this paper, we propose a method that more effectively uses the available information by modeling the two observed images as spatial and spectral versions of two (unobserved) latent images characterized by the same high spatial and high spectral resolutions. As they cover the same scene, these latent images are expected to be globally similar except for possible changes in sparse spatial locations. Thus, the change detection task is envisioned through a robust multi-band image fusion method which enforces the differences between the estimated latent images to be spatially sparse. This robust fusion problem is formulated as an inverse problem which is iteratively solved using an efficient block-coordinate descent algorithm. The proposed method is applied to real panchormatic/multispectral and hyperspectral images with simulated realistic changes. A comparison with state-of-the-art change detection methods evidences the accuracy of the proposed strategy.

Detecting Changes Between Optical Images of Different Spatial and Spectral Resolutions: a Fusion-Based Approach

Sep 20, 2016

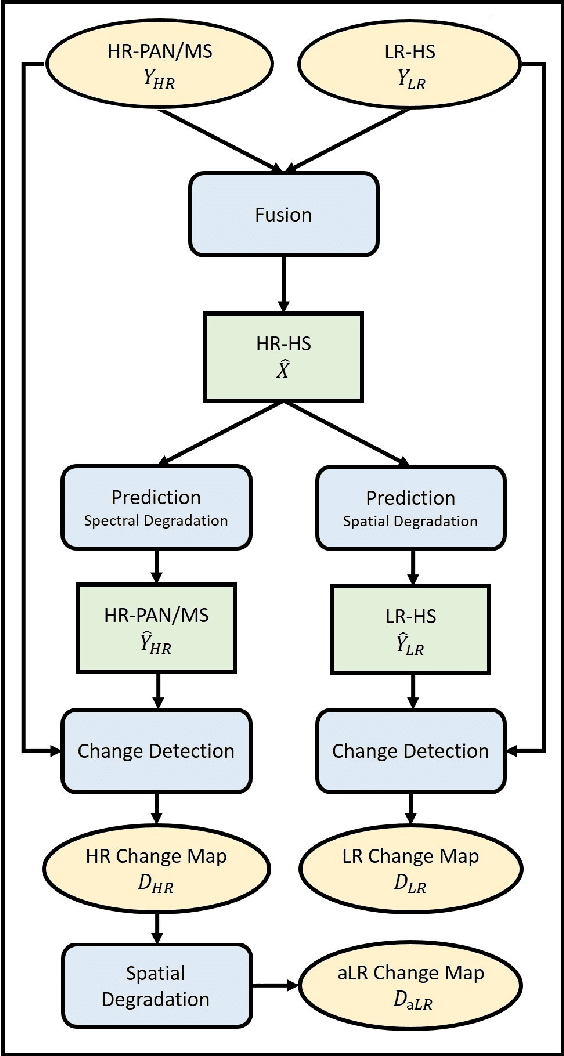

Abstract:Change detection is one of the most challenging issues when analyzing remotely sensed images. Comparing several multi-date images acquired through the same kind of sensor is the most common scenario. Conversely, designing robust, flexible and scalable algorithms for change detection becomes even more challenging when the images have been acquired by two different kinds of sensors. This situation arises in case of emergency under critical constraints. This paper presents, to the best of authors' knowledge, the first strategy to deal with optical images characterized by dissimilar spatial and spectral resolutions. Typical considered scenarios include change detection between panchromatic or multispectral and hyperspectral images. The proposed strategy consists of a 3-step procedure: i) inferring a high spatial and spectral resolution image by fusion of the two observed images characterized one by a low spatial resolution and the other by a low spectral resolution, ii) predicting two images with respectively the same spatial and spectral resolutions as the observed images by degradation of the fused one and iii) implementing a decision rule to each pair of observed and predicted images characterized by the same spatial and spectral resolutions to identify changes. The performance of the proposed framework is evaluated on real images with simulated realistic changes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge