Vincent Wade

Who Is Lagging Behind: Profiling Student Behaviors with Graph-Level Encoding in Curriculum-Based Online Learning Systems

Aug 26, 2025Abstract:The surge in the adoption of Intelligent Tutoring Systems (ITSs) in education, while being integral to curriculum-based learning, can inadvertently exacerbate performance gaps. To address this problem, student profiling becomes crucial for tracking progress, identifying struggling students, and alleviating disparities among students. Such profiling requires measuring student behaviors and performance across different aspects, such as content coverage, learning intensity, and proficiency in different concepts within a learning topic. In this study, we introduce CTGraph, a graph-level representation learning approach to profile learner behaviors and performance in a self-supervised manner. Our experiments demonstrate that CTGraph can provide a holistic view of student learning journeys, accounting for different aspects of student behaviors and performance, as well as variations in their learning paths as aligned to the curriculum structure. We also show that our approach can identify struggling students and provide comparative analysis of diverse groups to pinpoint when and where students are struggling. As such, our approach opens more opportunities to empower educators with rich insights into student learning journeys and paves the way for more targeted interventions.

Comparing Abstractive Summaries Generated by ChatGPT to Real Summaries Through Blinded Reviewers and Text Classification Algorithms

Mar 30, 2023Abstract:Large Language Models (LLMs) have gathered significant attention due to their impressive performance on a variety of tasks. ChatGPT, developed by OpenAI, is a recent addition to the family of language models and is being called a disruptive technology by a few, owing to its human-like text-generation capabilities. Although, many anecdotal examples across the internet have evaluated ChatGPT's strength and weakness, only a few systematic research studies exist. To contribute to the body of literature of systematic research on ChatGPT, we evaluate the performance of ChatGPT on Abstractive Summarization by the means of automated metrics and blinded human reviewers. We also build automatic text classifiers to detect ChatGPT generated summaries. We found that while text classification algorithms can distinguish between real and generated summaries, humans are unable to distinguish between real summaries and those produced by ChatGPT.

An Empirical Study of Topic Transition in Dialogue

Nov 30, 2021

Abstract:Transitioning between topics is a natural component of human-human dialog. Although topic transition has been studied in dialogue for decades, only a handful of corpora based studies have been performed to investigate the subtleties of topic transitions. Thus, this study annotates 215 conversations from the switchboard corpus and investigates how variables such as length, number of topic transitions, topic transitions share by participants and turns/topic are related. This work presents an empirical study on topic transition in switchboard corpus followed by modelling topic transition with a precision of 83% for in-domain(id) test set and 82% on 10 out-of-domain}(ood) test set. It is envisioned that this work will help in emulating human-human like topic transition in open-domain dialog systems.

Enhancing Self-Disclosure In Neural Dialog Models By Candidate Re-ranking

Sep 10, 2021

Abstract:Neural language modelling has progressed the state-of-the-art in different downstream Natural Language Processing (NLP) tasks. One such area is of open-domain dialog modelling, neural dialog models based on GPT-2 such as DialoGPT have shown promising performance in single-turn conversation. However, such (neural) dialog models have been criticized for generating responses which although may have relevance to the previous human response, tend to quickly dissipate human interest and descend into trivial conversation. One reason for such performance is the lack of explicit conversation strategy being employed in human-machine conversation. Humans employ a range of conversation strategies while engaging in a conversation, one such key social strategies is Self-disclosure(SD). A phenomenon of revealing information about one-self to others. Social penetration theory (SPT) proposes that communication between two people moves from shallow to deeper levels as the relationship progresses primarily through self-disclosure. Disclosure helps in creating rapport among the participants engaged in a conversation. In this paper, Self-disclosure enhancement architecture (SDEA) is introduced utilizing Self-disclosure Topic Model (SDTM) during inference stage of a neural dialog model to re-rank response candidates to enhance self-disclosure in single-turn responses from from the model.

Topic Detection from Conversational Dialogue Corpus with Parallel Dirichlet Allocation Model and Elbow Method

Jun 05, 2020

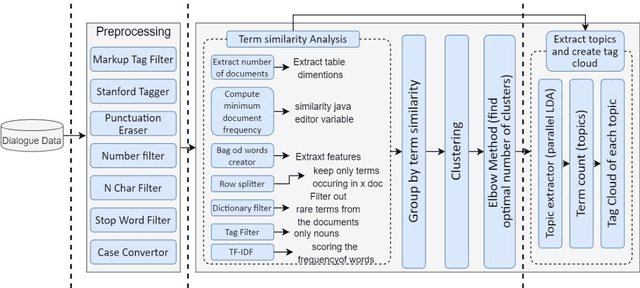

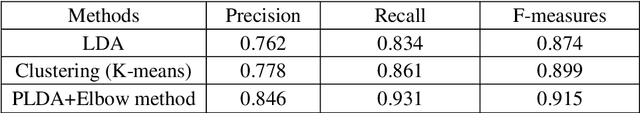

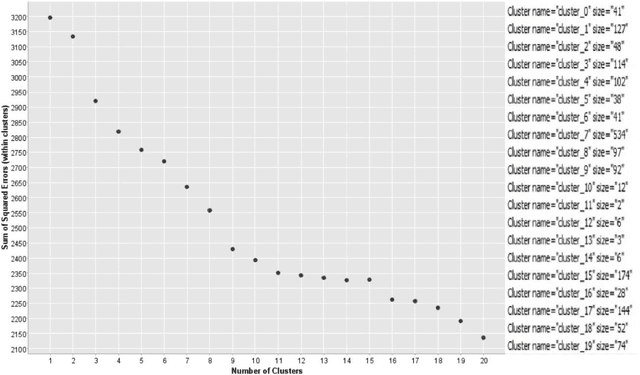

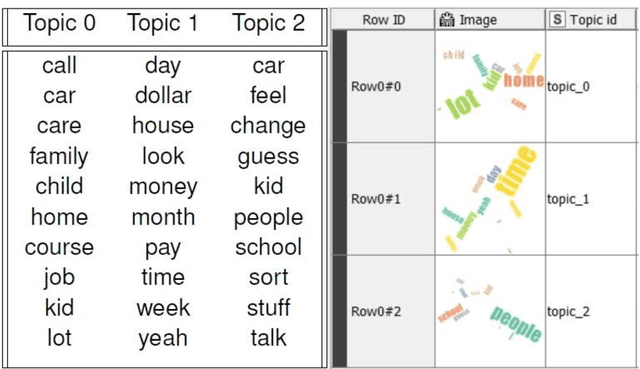

Abstract:A conversational system needs to know how to switch between topics to continue the conversation for a more extended period. For this topic detection from dialogue corpus has become an important task for a conversation and accurate prediction of conversation topics is important for creating coherent and engaging dialogue systems. In this paper, we proposed a topic detection approach with Parallel Latent Dirichlet Allocation (PLDA) Model by clustering a vocabulary of known similar words based on TF-IDF scores and Bag of Words (BOW) technique. In the experiment, we use K-mean clustering with Elbow Method for interpretation and validation of consistency within-cluster analysis to select the optimal number of clusters. We evaluate our approach by comparing it with traditional LDA and clustering technique. The experimental results show that combining PLDA with Elbow method selects the optimal number of clusters and refine the topics for the conversation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge