Vilde B. Gjærum

Real-Time Counterfactual Explanations For Robotic Systems With Multiple Continuous Outputs

Dec 08, 2022

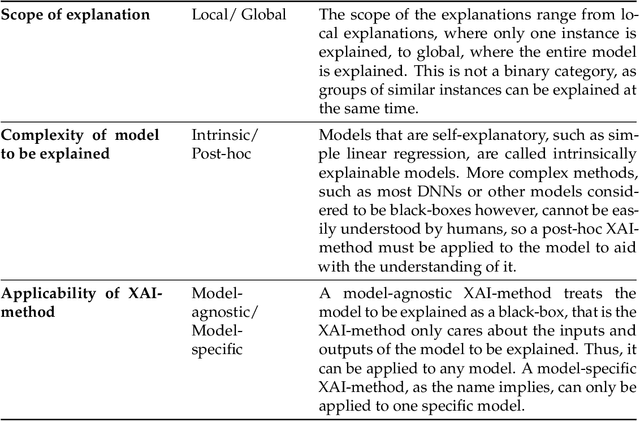

Abstract:Although many machine learning methods, especially from the field of deep learning, have been instrumental in addressing challenges within robotic applications, we cannot take full advantage of such methods before these can provide performance and safety guarantees. The lack of trust that impedes the use of these methods mainly stems from a lack of human understanding of what exactly machine learning models have learned, and how robust their behaviour is. This is the problem the field of explainable artificial intelligence aims to solve. Based on insights from the social sciences, we know that humans prefer contrastive explanations, i.e.\ explanations answering the hypothetical question "what if?". In this paper, we show that linear model trees are capable of producing answers to such questions, so-called counterfactual explanations, for robotic systems, including in the case of multiple, continuous inputs and outputs. We demonstrate the use of this method to produce counterfactual explanations for two robotic applications. Additionally, we explore the issue of infeasibility, which is of particular interest in systems governed by the laws of physics.

Approximating a deep reinforcement learning docking agent using linear model trees

Mar 01, 2022

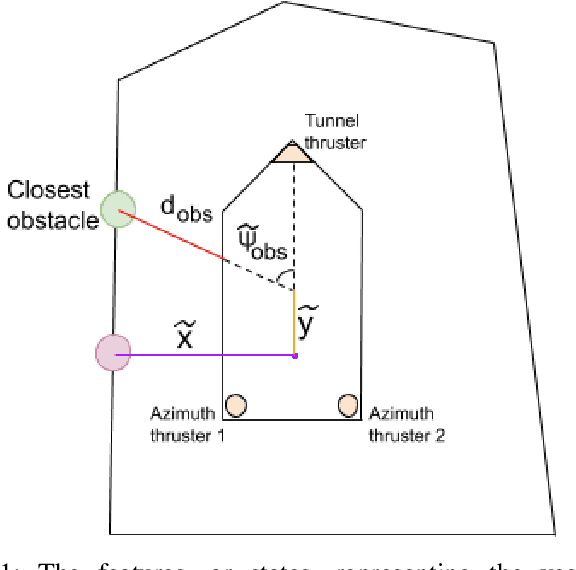

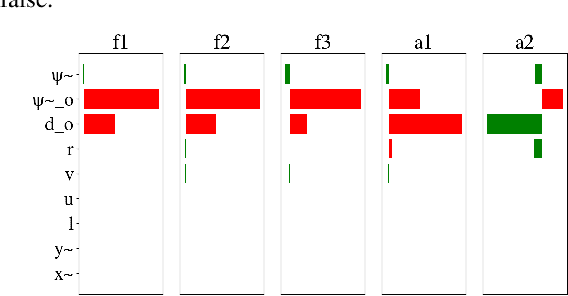

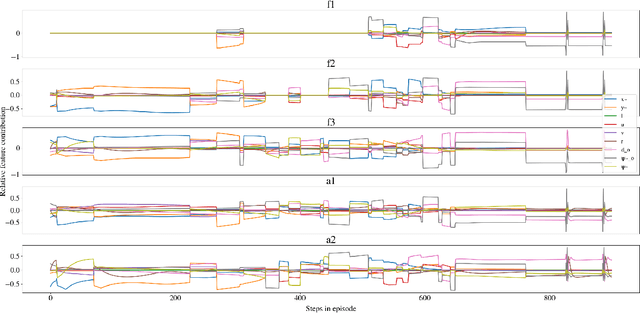

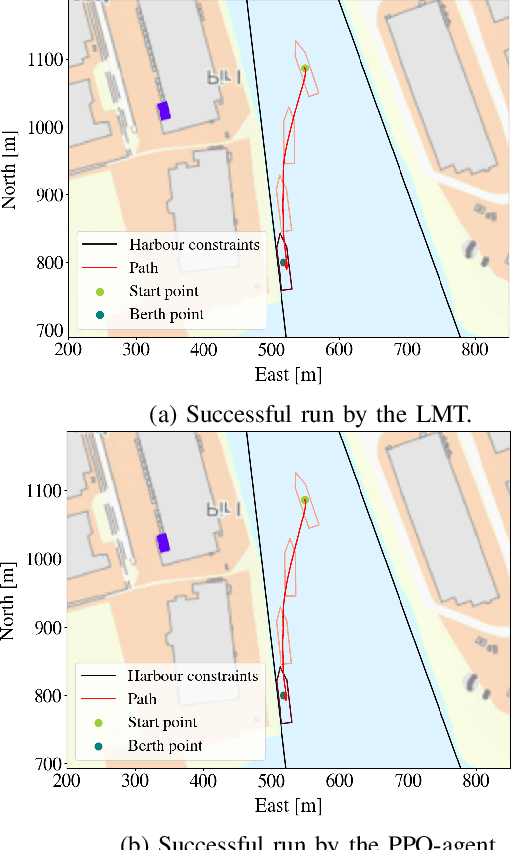

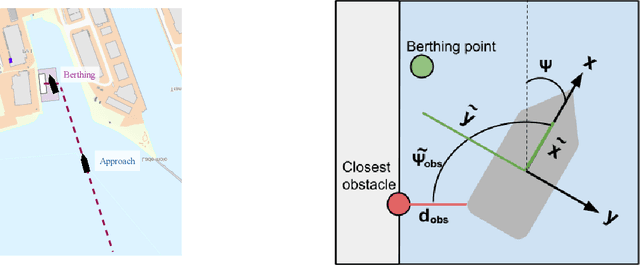

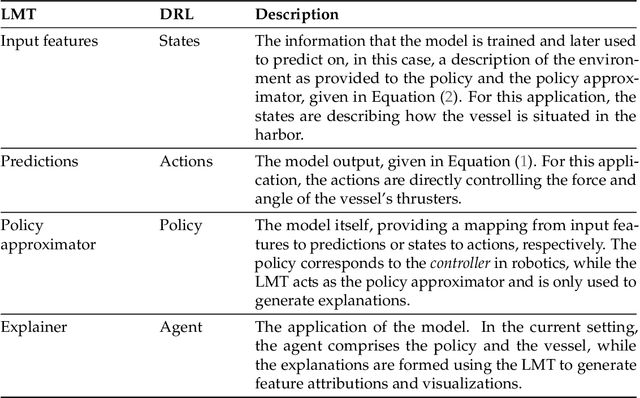

Abstract:Deep reinforcement learning has led to numerous notable results in robotics. However, deep neural networks (DNNs) are unintuitive, which makes it difficult to understand their predictions and strongly limits their potential for real-world applications due to economic, safety, and assurance reasons. To remedy this problem, a number of explainable AI methods have been presented, such as SHAP and LIME, but these can be either be too costly to be used in real-time robotic applications or provide only local explanations. In this paper, the main contribution is the use of a linear model tree (LMT) to approximate a DNN policy, originally trained via proximal policy optimization(PPO), for an autonomous surface vehicle with five control inputs performing a docking operation. The two main benefits of the proposed approach are: a) LMTs are transparent which makes it possible to associate directly the outputs (control actions, in our case) with specific values of the input features, b) LMTs are computationally efficient and can provide information in real-time. In our simulations, the opaque DNN policy controls the vehicle and the LMT runs in parallel to provide explanations in the form of feature attributions. Our results indicate that LMTs can be a useful component within digital assurance frameworks for autonomous ships.

Explaining a Deep Reinforcement Learning Docking Agent Using Linear Model Trees with User Adapted Visualization

Mar 01, 2022

Abstract:Deep neural networks (DNNs) can be useful within the marine robotics field, but their utility value is restricted by their black-box nature. Explainable artificial intelligence methods attempt to understand how such black-boxes make their decisions. In this work, linear model trees (LMTs) are used to approximate the DNN controlling an autonomous surface vessel (ASV) in a simulated environment and then run in parallel with the DNN to give explanations in the form of feature attributions in real-time. How well a model can be understood depends not only on the explanation itself, but also on how well it is presented and adapted to the receiver of said explanation. Different end-users may need both different types of explanations, as well as different representations of these. The main contributions of this work are (1) significantly improving both the accuracy and the build time of a greedy approach for building LMTs by introducing ordering of features in the splitting of the tree, (2) giving an overview of the characteristics of the seafarer/operator and the developer as two different end-users of the agent and receiver of the explanations, and (3) suggesting a visualization of the docking agent, the environment, and the feature attributions given by the LMT for when the developer is the end-user of the system, and another visualization for when the seafarer or operator is the end-user, based on their different characteristics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge