Varun Rao

LLMs Meet Finance: Fine-Tuning Foundation Models for the Open FinLLM Leaderboard

Apr 17, 2025

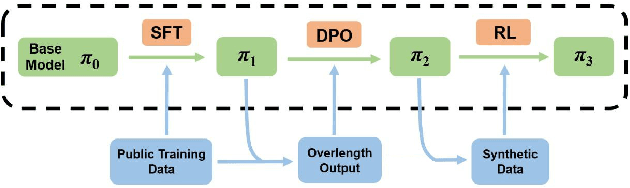

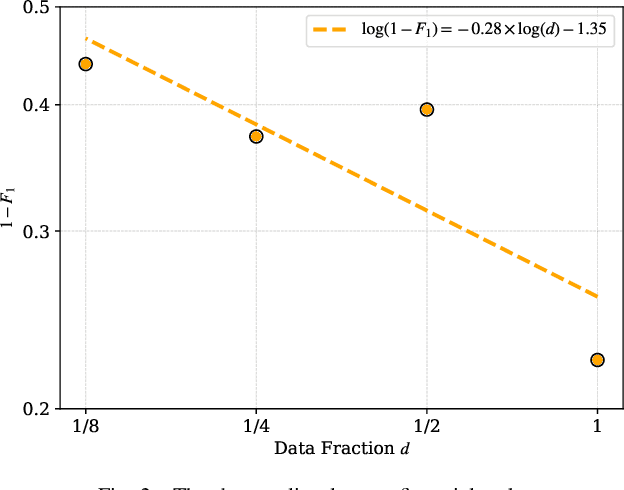

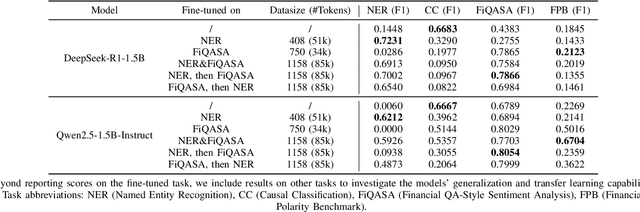

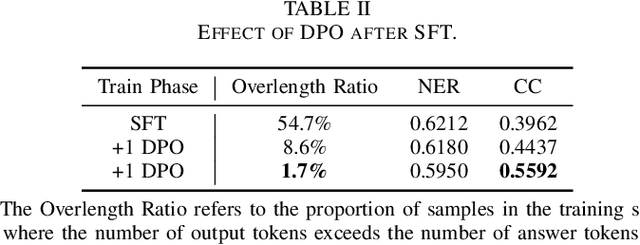

Abstract:This paper investigates the application of large language models (LLMs) to financial tasks. We fine-tuned foundation models using the Open FinLLM Leaderboard as a benchmark. Building on Qwen2.5 and Deepseek-R1, we employed techniques including supervised fine-tuning (SFT), direct preference optimization (DPO), and reinforcement learning (RL) to enhance their financial capabilities. The fine-tuned models demonstrated substantial performance gains across a wide range of financial tasks. Moreover, we measured the data scaling law in the financial domain. Our work demonstrates the potential of large language models (LLMs) in financial applications.

An Effective Pixel-Wise Approach for Skin Colour Segmentation Using Pixel Neighbourhood Technique

Aug 24, 2021

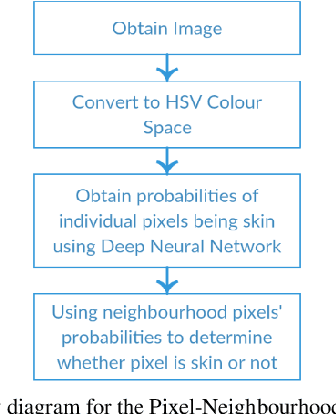

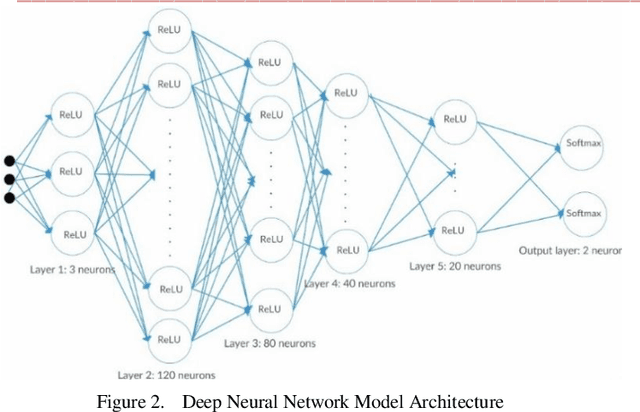

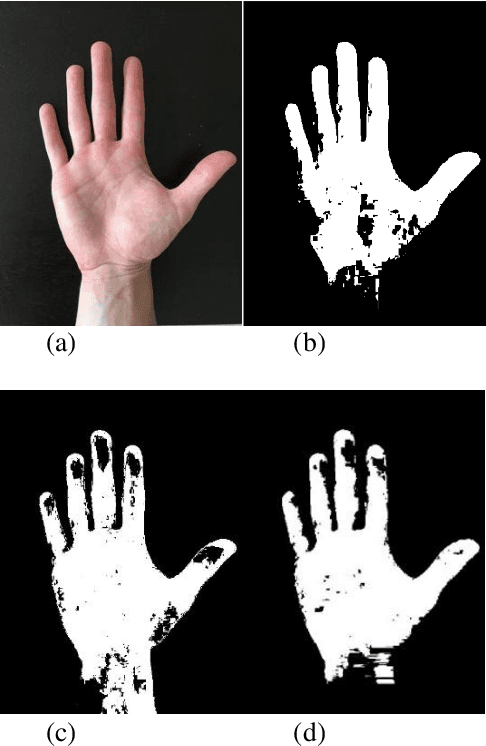

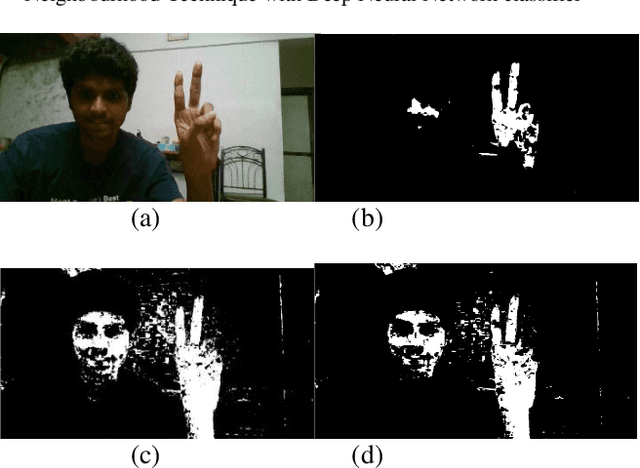

Abstract:This paper presents a novel technique for skin colour segmentation that overcomes the limitations faced by existing techniques such as Colour Range Thresholding. Skin colour segmentation is affected by the varied skin colours and surrounding lighting conditions, leading to poorskin segmentation for many techniques. We propose a new two stage Pixel Neighbourhood technique that classifies any pixel as skin or non-skin based on its neighbourhood pixels. The first step calculates the probability of each pixel being skin by passing HSV values of the pixel to a Deep Neural Network model. In the next step, it calculates the likeliness of pixel being skin using these probabilities of neighbouring pixels. This technique performs skin colour segmentation better than the existing techniques.

* 5 pages

Real-time Indian Sign Language (ISL) Recognition

Aug 24, 2021

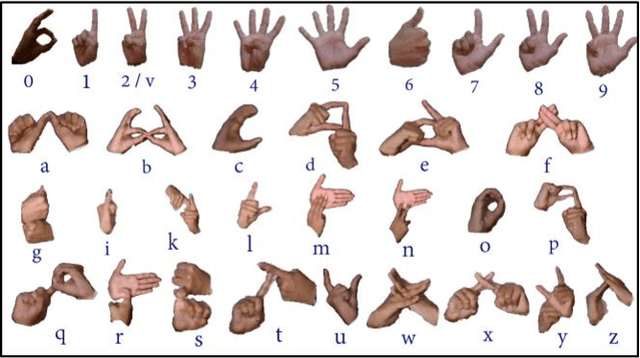

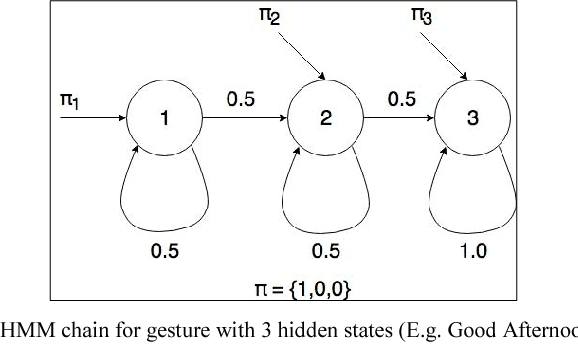

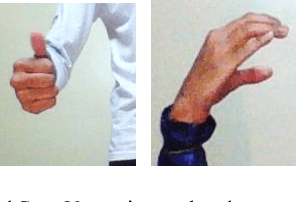

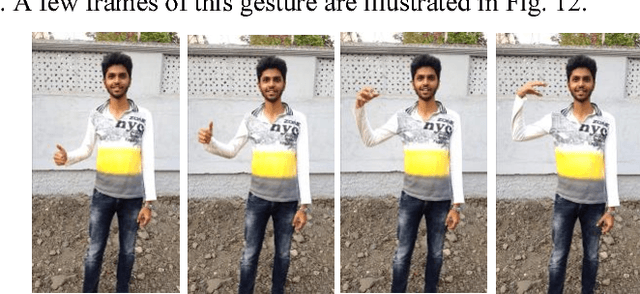

Abstract:This paper presents a system which can recognise hand poses & gestures from the Indian Sign Language (ISL) in real-time using grid-based features. This system attempts to bridge the communication gap between the hearing and speech impaired and the rest of the society. The existing solutions either provide relatively low accuracy or do not work in real-time. This system provides good results on both the parameters. It can identify 33 hand poses and some gestures from the ISL. Sign Language is captured from a smartphone camera and its frames are transmitted to a remote server for processing. The use of any external hardware (such as gloves or the Microsoft Kinect sensor) is avoided, making it user-friendly. Techniques such as Face detection, Object stabilisation and Skin Colour Segmentation are used for hand detection and tracking. The image is further subjected to a Grid-based Feature Extraction technique which represents the hand's pose in the form of a Feature Vector. Hand poses are then classified using the k-Nearest Neighbours algorithm. On the other hand, for gesture classification, the motion and intermediate hand poses observation sequences are fed to Hidden Markov Model chains corresponding to the 12 pre-selected gestures defined in ISL. Using this methodology, the system is able to achieve an accuracy of 99.7% for static hand poses, and an accuracy of 97.23% for gesture recognition.

* 9 pages

A Comprehensive Survey of Machine Learning Based Localization with Wireless Signals

Dec 21, 2020

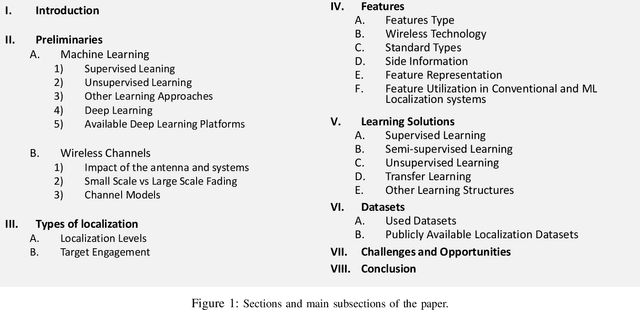

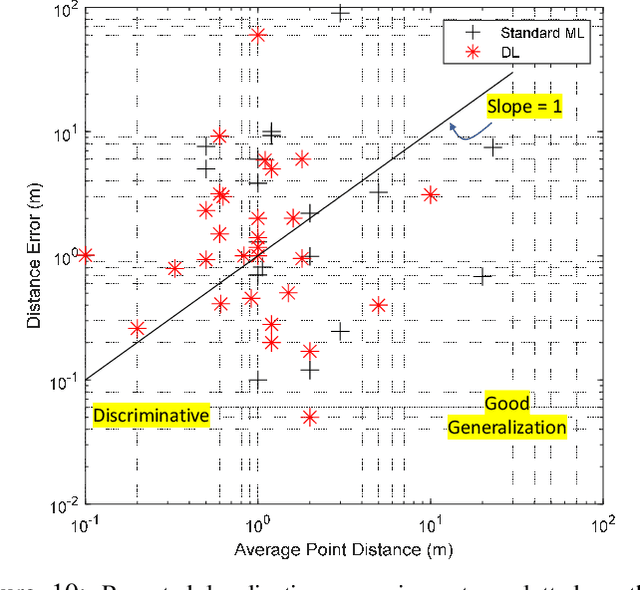

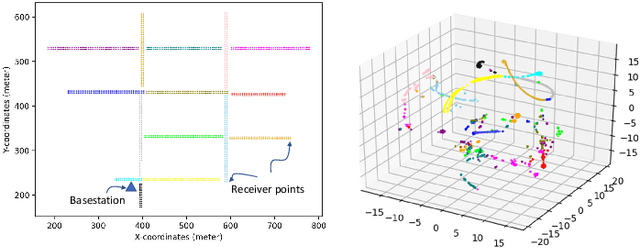

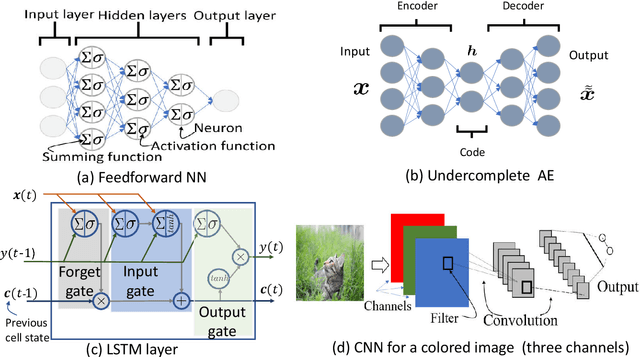

Abstract:The last few decades have witnessed a growing interest in location-based services. Using localization systems based on Radio Frequency (RF) signals has proven its efficacy for both indoor and outdoor applications. However, challenges remain with respect to both complexity and accuracy of such systems. Machine Learning (ML) is one of the most promising methods for mitigating these problems, as ML (especially deep learning) offers powerful practical data-driven tools that can be integrated into localization systems. In this paper, we provide a comprehensive survey of ML-based localization solutions that use RF signals. The survey spans different aspects, ranging from the system architectures, to the input features, the ML methods, and the datasets. A main point of the paper is the interaction between the domain knowledge arising from the physics of localization systems, and the various ML approaches. Besides the ML methods, the utilized input features play a major role in shaping the localization solution; we present a detailed discussion of the different features and what could influence them, be it the underlying wireless technology or standards or the preprocessing techniques. A detailed discussion is dedicated to the different ML methods that have been applied to localization problems, discussing the underlying problem and the solution structure. Furthermore, we summarize the different ways the datasets were acquired, and then list the publicly available ones. Overall, the survey categorizes and partly summarizes insights from almost 400 papers in this field. This survey is self-contained, as we provide a concise review of the main ML and wireless propagation concepts, which shall help the researchers in either field navigate through the surveyed solutions, and suggested open problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge