Vanessa Echeverria

From Complexity to Parsimony: Integrating Latent Class Analysis to Uncover Multimodal Learning Patterns in Collaborative Learning

Nov 23, 2024

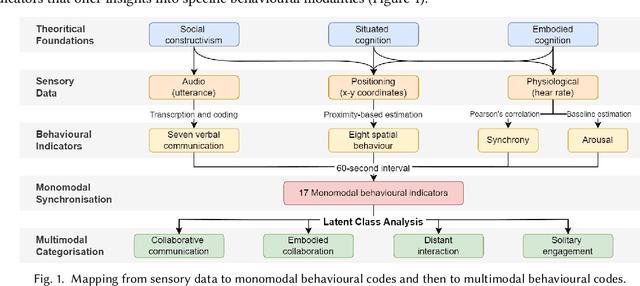

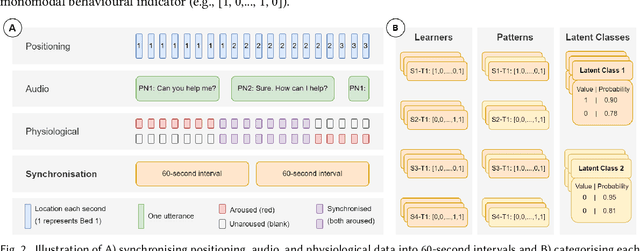

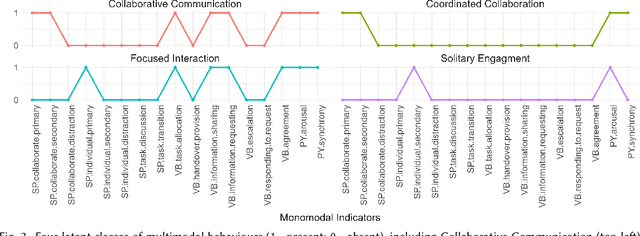

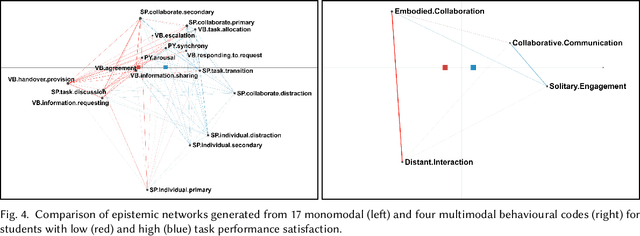

Abstract:Multimodal Learning Analytics (MMLA) leverages advanced sensing technologies and artificial intelligence to capture complex learning processes, but integrating diverse data sources into cohesive insights remains challenging. This study introduces a novel methodology for integrating latent class analysis (LCA) within MMLA to map monomodal behavioural indicators into parsimonious multimodal ones. Using a high-fidelity healthcare simulation context, we collected positional, audio, and physiological data, deriving 17 monomodal indicators. LCA identified four distinct latent classes: Collaborative Communication, Embodied Collaboration, Distant Interaction, and Solitary Engagement, each capturing unique monomodal patterns. Epistemic network analysis compared these multimodal indicators with the original monomodal indicators and found that the multimodal approach was more parsimonious while offering higher explanatory power regarding students' task and collaboration performances. The findings highlight the potential of LCA in simplifying the analysis of complex multimodal data while capturing nuanced, cross-modality behaviours, offering actionable insights for educators and enhancing the design of collaborative learning interventions. This study proposes a pathway for advancing MMLA, making it more parsimonious and manageable, and aligning with the principles of learner-centred education.

Generative AI in Higher Education: A Global Perspective of Institutional Adoption Policies and Guidelines

May 20, 2024

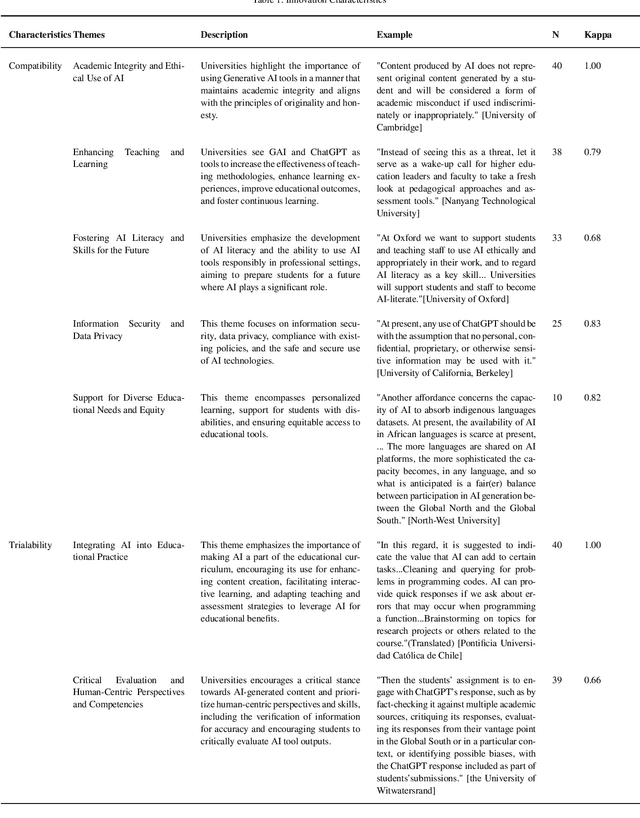

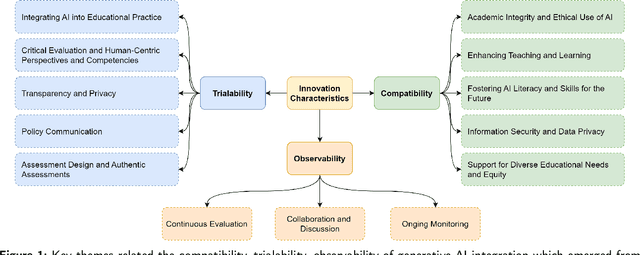

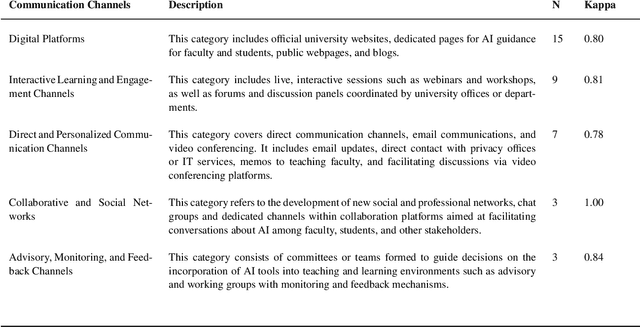

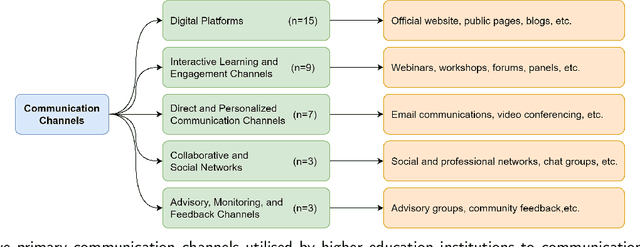

Abstract:Integrating generative AI (GAI) into higher education is crucial for preparing a future generation of GAI-literate students. Yet a thorough understanding of the global institutional adoption policy remains absent, with most of the prior studies focused on the Global North and the promises and challenges of GAI, lacking a theoretical lens. This study utilizes the Diffusion of Innovations Theory to examine GAI adoption strategies in higher education across 40 universities from six global regions. It explores the characteristics of GAI innovation, including compatibility, trialability, and observability, and analyses the communication channels and roles and responsibilities outlined in university policies and guidelines. The findings reveal a proactive approach by universities towards GAI integration, emphasizing academic integrity, teaching and learning enhancement, and equity. Despite a cautious yet optimistic stance, a comprehensive policy framework is needed to evaluate the impacts of GAI integration and establish effective communication strategies that foster broader stakeholder engagement. The study highlights the importance of clear roles and responsibilities among faculty, students, and administrators for successful GAI integration, supporting a collaborative model for navigating the complexities of GAI in education. This study contributes insights for policymakers in crafting detailed strategies for its integration.

Human-Centred Learning Analytics and AI in Education: a Systematic Literature Review

Dec 20, 2023Abstract:The rapid expansion of Learning Analytics (LA) and Artificial Intelligence in Education (AIED) offers new scalable, data-intensive systems but also raises concerns about data privacy and agency. Excluding stakeholders -- like students and teachers -- from the design process can potentially lead to mistrust and inadequately aligned tools. Despite a shift towards human-centred design in recent LA and AIED research, there remain gaps in our understanding of the importance of human control, safety, reliability, and trustworthiness in the design and implementation of these systems. We conducted a systematic literature review to explore these concerns and gaps. We analysed 108 papers to provide insights about i) the current state of human-centred LA/AIED research; ii) the extent to which educational stakeholders have contributed to the design process of human-centred LA/AIED systems; iii) the current balance between human control and computer automation of such systems; and iv) the extent to which safety, reliability and trustworthiness have been considered in the literature. Results indicate some consideration of human control in LA/AIED system design, but limited end-user involvement in actual design. Based on these findings, we recommend: 1) carefully balancing stakeholders' involvement in designing and deploying LA/AIED systems throughout all design phases, 2) actively involving target end-users, especially students, to delineate the balance between human control and automation, and 3) exploring safety, reliability, and trustworthiness as principles in future human-centred LA/AIED systems.

Human-AI Collaboration in Thematic Analysis using ChatGPT: A User Study and Design Recommendations

Nov 07, 2023Abstract:Generative artificial intelligence (GenAI) offers promising potential for advancing human-AI collaboration in qualitative research. However, existing works focused on conventional machine-learning and pattern-based AI systems, and little is known about how researchers interact with GenAI in qualitative research. This work delves into researchers' perceptions of their collaboration with GenAI, specifically ChatGPT. Through a user study involving ten qualitative researchers, we found ChatGPT to be a valuable collaborator for thematic analysis, enhancing coding efficiency, aiding initial data exploration, offering granular quantitative insights, and assisting comprehension for non-native speakers and non-experts. Yet, concerns about its trustworthiness and accuracy, reliability and consistency, limited contextual understanding, and broader acceptance within the research community persist. We contribute five actionable design recommendations to foster effective human-AI collaboration. These include incorporating transparent explanatory mechanisms, enhancing interface and integration capabilities, prioritising contextual understanding and customisation, embedding human-AI feedback loops and iterative functionality, and strengthening trust through validation mechanisms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge