Van-Thong Huynh

Lightweight Models for Emotional Analysis in Video

Mar 13, 2025Abstract:In this study, we present an approach for efficient spatiotemporal feature extraction using MobileNetV4 and a multi-scale 3D MLP-Mixer-based temporal aggregation module. MobileNetV4, with its Universal Inverted Bottleneck (UIB) blocks, serves as the backbone for extracting hierarchical feature representations from input image sequences, ensuring both computational efficiency and rich semantic encoding. To capture temporal dependencies, we introduce a three-level MLP-Mixer module, which processes spatial features at multiple resolutions while maintaining structural integrity. Experimental results on the ABAW 8th competition demonstrate the effectiveness of our approach, showing promising performance in affective behavior analysis. By integrating an efficient vision backbone with a structured temporal modeling mechanism, the proposed framework achieves a balance between computational efficiency and predictive accuracy, making it well-suited for real-time applications in mobile and embedded computing environments.

Facial Expression Classification using Fusion of Deep Neural Network in Video for the 3rd ABAW3 Competition

Apr 08, 2022

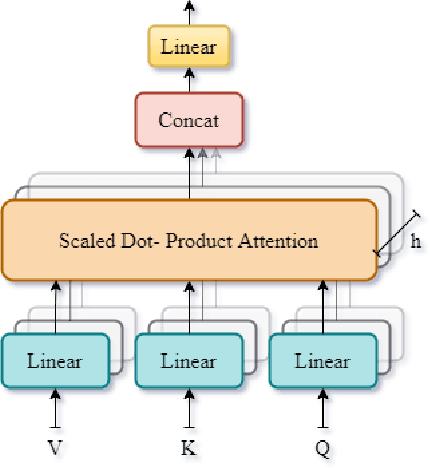

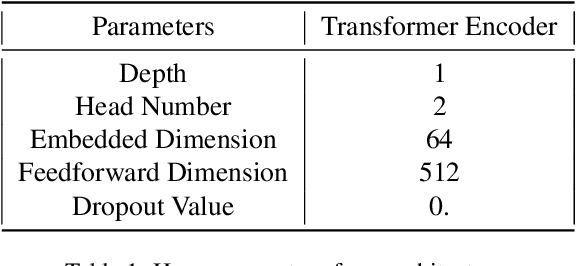

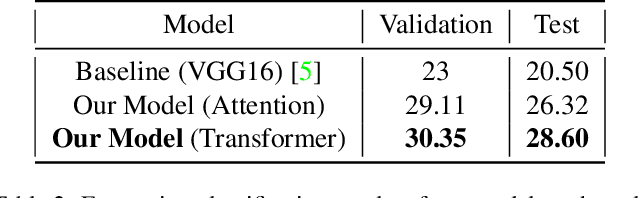

Abstract:For computers to recognize human emotions, expression classification is an equally important problem in the human-computer interaction area. In the 3rd Affective Behavior Analysis In-The-Wild competition, the task of expression classification includes eight classes with six basic expressions of human faces from videos. In this paper, we employ a transformer mechanism to encode the robust representation from the backbone. Fusion of the robust representations plays an important role in the expression classification task. Our approach achieves 30.35\% and 28.60\% for the $F_1$ score on the validation set and the test set, respectively. This result shows the effectiveness of the proposed architecture based on the Aff-Wild2 dataset.

An Ensemble Approach for Facial Expression Analysis in Video

Mar 24, 2022

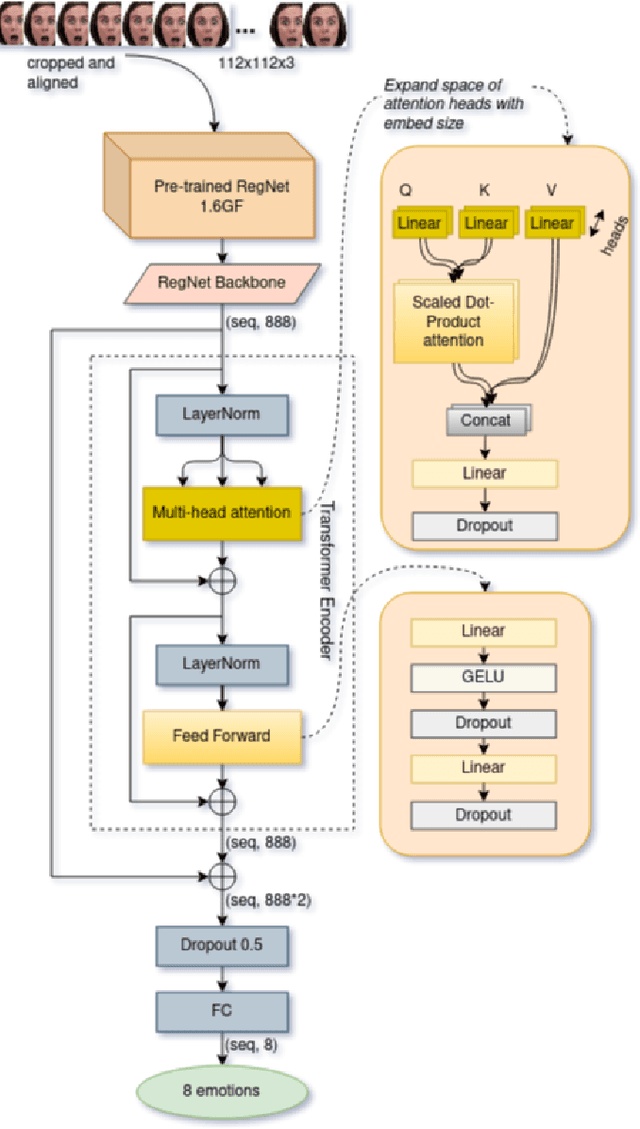

Abstract:Human emotions recognization contributes to the development of human-computer interaction. The machines understanding human emotions in the real world will significantly contribute to life in the future. This paper will introduce the Affective Behavior Analysis in-the-wild (ABAW3) 2022 challenge. The paper focuses on solving the problem of the valence-arousal estimation and action unit detection. For valence-arousal estimation, we conducted two stages: creating new features from multimodel and temporal learning to predict valence-arousal. First, we make new features; the Gated Recurrent Unit (GRU) and Transformer are combined using a Regular Networks (RegNet) feature, which is extracted from the image. The next step is the GRU combined with Local Attention to predict valence-arousal. The Concordance Correlation Coefficient (CCC) was used to evaluate the model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge