Valeria Efimova

Image Vectorization: a Review

Jun 10, 2023Abstract:Nowadays, there are many diffusion and autoregressive models that show impressive results for generating images from text and other input domains. However, these methods are not intended for ultra-high-resolution image synthesis. Vector graphics are devoid of this disadvantage, so the generation of images in this format looks very promising. Instead of generating vector images directly, you can first synthesize a raster image and then apply vectorization. Vectorization is the process of converting a raster image into a similar vector image using primitive shapes. Besides being similar, generated vector image is also required to contain the minimum number of shapes for rendering. In this paper, we focus specifically on machine learning-compatible vectorization methods. We are considering Mang2Vec, Deep Vectorization of Technical Drawings, DiffVG, and LIVE models. We also provide a brief overview of existing online methods. We also recall other algorithmic methods, Im2Vec and ClipGEN models, but they do not participate in the comparison, since there is no open implementation of these methods or their official implementations do not work correctly. Our research shows that despite the ability to directly specify the number and type of shapes, existing machine learning methods work for a very long time and do not accurately recreate the original image. We believe that there is no fast universal automatic approach and human control is required for every method.

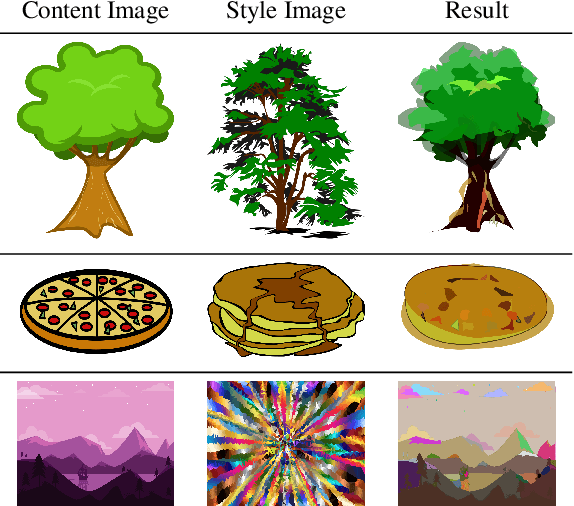

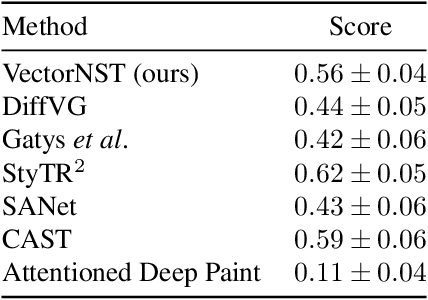

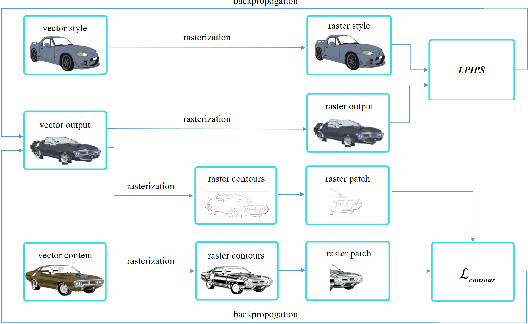

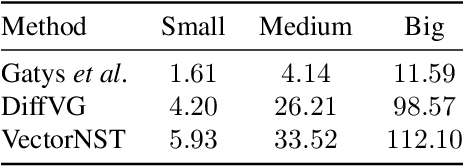

Neural Style Transfer for Vector Graphics

Mar 06, 2023

Abstract:Neural style transfer draws researchers' attention, but the interest focuses on bitmap images. Various models have been developed for bitmap image generation both online and offline with arbitrary and pre-trained styles. However, the style transfer between vector images has not almost been considered. Our research shows that applying standard content and style losses insignificantly changes the vector image drawing style because the structure of vector primitives differs a lot from pixels. To handle this problem, we introduce new loss functions. We also develop a new method based on differentiable rasterization that uses these loss functions and can change the color and shape parameters of the content image corresponding to the drawing of the style image. Qualitative experiments demonstrate the effectiveness of the proposed VectorNST method compared with the state-of-the-art neural style transfer approaches for bitmap images and the only existing approach for stylizing vector images, DiffVG. Although the proposed model does not achieve the quality and smoothness of style transfer between bitmap images, we consider our work an important early step in this area. VectorNST code and demo service are available at https://github.com/IzhanVarsky/VectorNST.

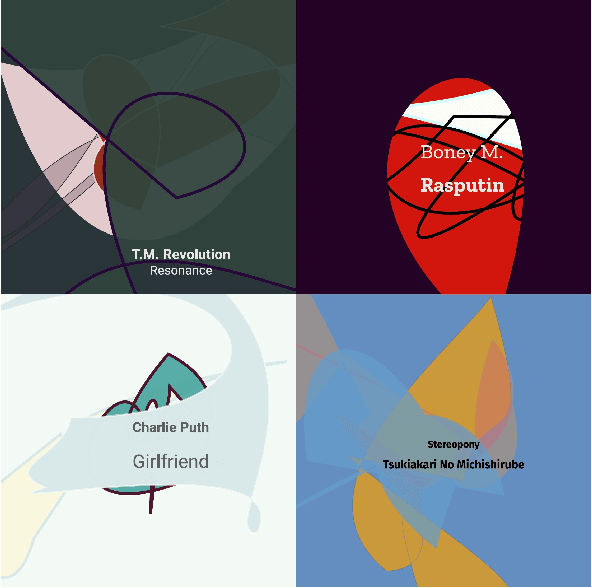

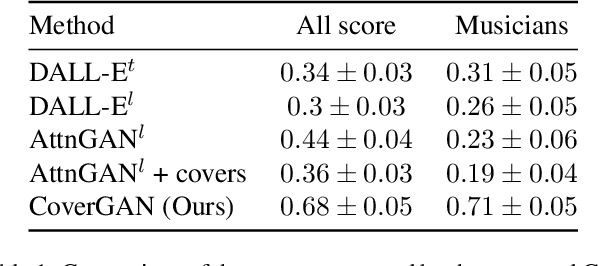

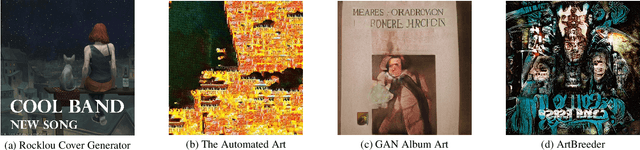

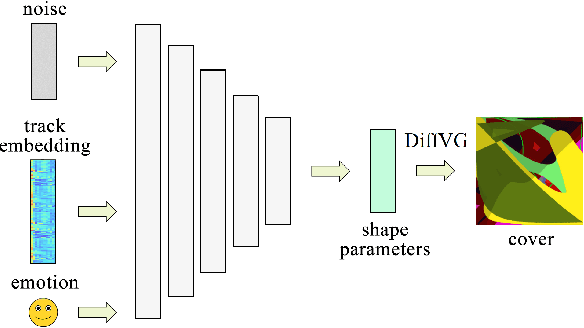

Conditional Vector Graphics Generation for Music Cover Images

May 15, 2022

Abstract:Generative Adversarial Networks (GAN) have motivated a rapid growth of the domain of computer image synthesis. As almost all the existing image synthesis algorithms consider an image as a pixel matrix, the high-resolution image synthesis is complicated.A good alternative can be vector images. However, they belong to the highly sophisticated parametric space, which is a restriction for solving the task of synthesizing vector graphics by GANs. In this paper, we consider a specific application domain that softens this restriction dramatically allowing the usage of vector image synthesis. Music cover images should meet the requirements of Internet streaming services and printing standards, which imply high resolution of graphic materials without any additional requirements on the content of such images. Existing music cover image generation services do not analyze tracks themselves; however, some services mostly consider only genre tags. To generate music covers as vector images that reflect the music and consist of simple geometric objects, we suggest a GAN-based algorithm called CoverGAN. The assessment of resulting images is based on their correspondence to the music compared with AttnGAN and DALL-E text-to-image generation according to title or lyrics. Moreover, the significance of the patterns found by CoverGAN has been evaluated in terms of the correspondence of the generated cover images to the musical tracks. Listeners evaluate the music covers generated by the proposed algorithm as quite satisfactory and corresponding to the tracks. Music cover images generation code and demo are available at https://github.com/IzhanVarsky/CoverGAN.

Reinforcement-based Simultaneous Algorithm and its Hyperparameters Selection

Nov 07, 2016

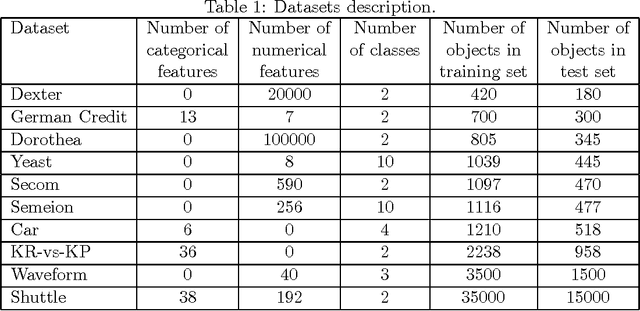

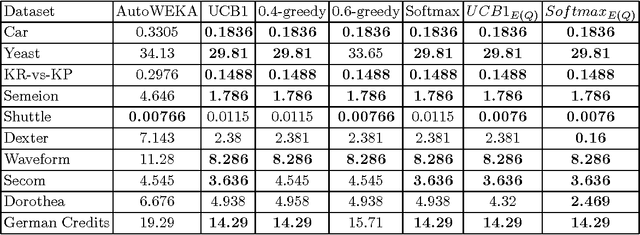

Abstract:Many algorithms for data analysis exist, especially for classification problems. To solve a data analysis problem, a proper algorithm should be chosen, and also its hyperparameters should be selected. In this paper, we present a new method for the simultaneous selection of an algorithm and its hyperparameters. In order to do so, we reduced this problem to the multi-armed bandit problem. We consider an algorithm as an arm and algorithm hyperparameters search during a fixed time as the corresponding arm play. We also suggest a problem-specific reward function. We performed the experiments on 10 real datasets and compare the suggested method with the existing one implemented in Auto-WEKA. The results show that our method is significantly better in most of the cases and never worse than the Auto-WEKA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge