Valentina Gregori

Visual Cues to Improve Myoelectric Control of Upper Limb Prostheses

Aug 29, 2017

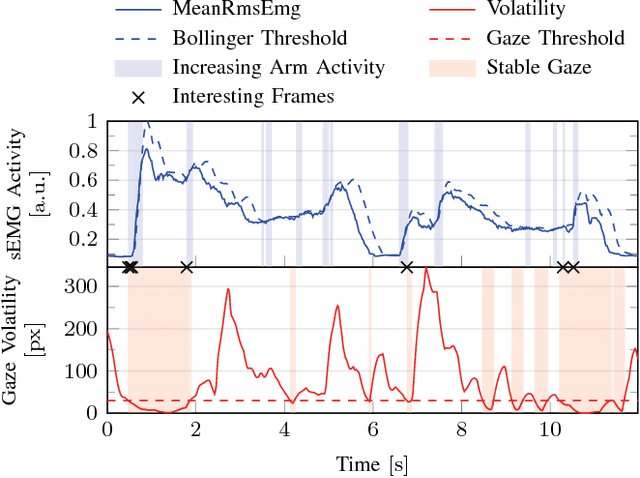

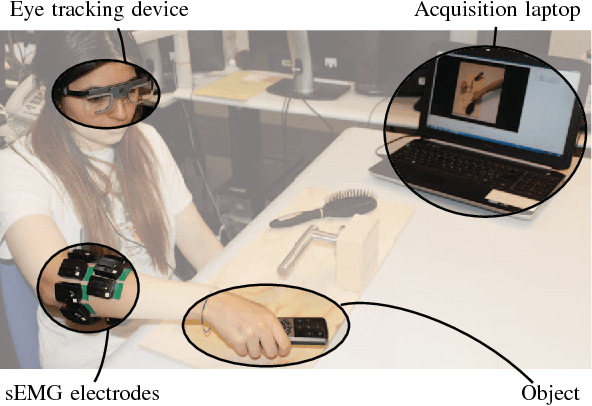

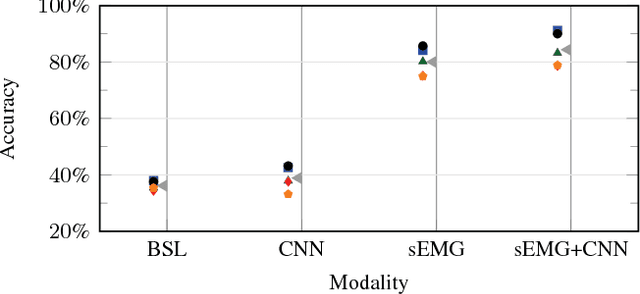

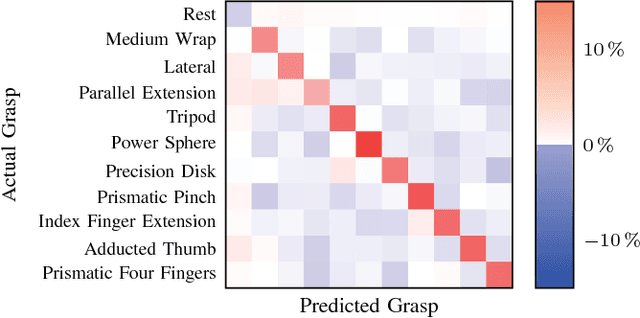

Abstract:The instability of myoelectric signals over time complicates their use to control highly articulated prostheses. To address this problem, studies have tried to combine surface electromyography with modalities that are less affected by the amputation and environment, such as accelerometry or gaze information. In the latter case, the hypothesis is that a subject looks at the object he or she intends to manipulate and that knowing this object's affordances allows to constrain the set of possible grasps. In this paper, we develop an automated way to detect stable fixations and show that gaze information is indeed helpful in predicting hand movements. In our multimodal approach, we automatically detect stable gazes and segment an object of interest around the subject's fixation in the visual frame. The patch extracted around this object is subsequently fed through an off-the-shelf deep convolutional neural network to obtain a high level feature representation, which is then combined with traditional surface electromyography in the classification stage. Tests have been performed on a dataset acquired from five intact subjects who performed ten types of grasps on various objects as well as in a functional setting. They show that the addition of gaze information increases the classification accuracy considerably. Further analysis demonstrates that this improvement is consistent for all grasps and concentrated during the movement onset and offset.

Adaptive Learning to Speed-Up Control of Prosthetic Hands: a Few Things Everybody Should Know

Feb 27, 2017

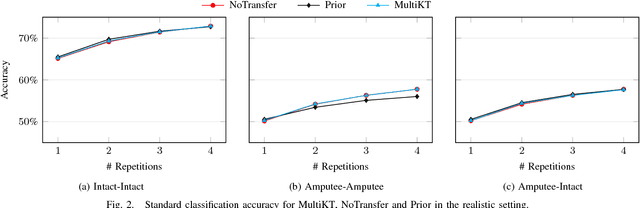

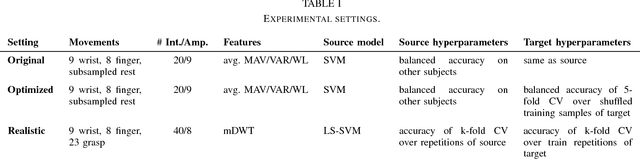

Abstract:A number of studies have proposed to use domain adaptation to reduce the training efforts needed to control an upper-limb prosthesis exploiting pre-trained models from prior subjects. These studies generally reported impressive reductions in the required number of training samples to achieve a certain level of accuracy for intact subjects. We further investigate two popular methods in this field to verify whether this result equally applies to amputees. Our findings show instead that this improvement can largely be attributed to a suboptimal hyperparameter configuration. When hyperparameters are appropriately tuned, the standard approach that does not exploit prior information performs on par with the more complicated transfer learning algorithms. Additionally, earlier studies erroneously assumed that the number of training samples relates proportionally to the efforts required from the subject. However, a repetition of a movement is the atomic unit for subjects and the total number of repetitions should therefore be used as reliable measure for training efforts. Also when correcting for this mistake, we do not find any performance increase due to the use of prior models.

Leveraging over intact priors for boosting control and dexterity of prosthetic hands by amputees

Aug 26, 2016

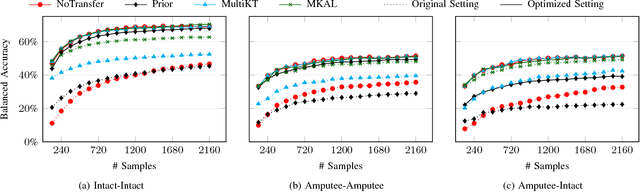

Abstract:Non-invasive myoelectric prostheses require a long training time to obtain satisfactory control dexterity. These training times could possibly be reduced by leveraging over training efforts by previous subjects. So-called domain adaptation algorithms formalize this strategy and have indeed been shown to significantly reduce the amount of required training data for intact subjects for myoelectric movements classification. It is not clear, however, whether these results extend also to amputees and, if so, whether prior information from amputees and intact subjects is equally useful. To overcome this problem, we evaluated several domain adaptation algorithms on data coming from both amputees and intact subjects. Our findings indicate that: (1) the use of previous experience from other subjects allows us to reduce the training time by about an order of magnitude; (2) this improvement holds regardless of whether an amputee exploits previous information from other amputees or from intact subjects.

Leveraging Over Priors for Boosting Control of Prosthetic Hands

May 24, 2016

Abstract:The Electromyography (EMG) signal is the electrical activity produced by cells of skeletal muscles in order to provide a movement. The non-invasive prosthetic hand works with several electrodes, placed on the stump of an amputee, that record this signal. In order to favour the control of prosthesis, the EMG signal is analyzed with algorithms based on machine learning theory to decide the movement that the subject is going to do. In order to obtain a significant control of the prosthesis and avoid mismatch between desired and performed movements, a long training period is needed when we use the traditional algorithm of machine learning (i.e. Support Vector Machines). An actual challenge in this field concerns the reduction of the time necessary for an amputee to learn how to use the prosthesis. Recently, several algorithms that exploit a form of prior knowledge have been proposed. In general, we refer to prior knowledge as a past experience available in the form of models. In our case an amputee, that attempts to perform some movements with the prosthesis, could use experience from different subjects that are already able to perform those movements. The aim of this work is to verify, with a computational investigation, if for an amputee this kind of previous experience is useful in order to reduce the training time and boost the prosthetic control. Furthermore, we want to understand if and how the final results change when the previous knowledge of intact or amputated subjects is used for a new amputee. Our experiments indicate that: (1) the use of experience, from other subjects already trained to perform a task, makes us able to reduce the training time of about an order of magnitude; (2) it seems that an amputee that tries to learn to use the prosthesis doesn't reach different results when he/she exploits previous experience of amputees or intact.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge