Valentin Delchevalerie

Towards Photonic Band Diagram Generation with Transformer-Latent Diffusion Models

Oct 02, 2025Abstract:Photonic crystals enable fine control over light propagation at the nanoscale, and thus play a central role in the development of photonic and quantum technologies. Photonic band diagrams (BDs) are a key tool to investigate light propagation into such inhomogeneous structured materials. However, computing BDs requires solving Maxwell's equations across many configurations, making it numerically expensive, especially when embedded in optimization loops for inverse design techniques, for example. To address this challenge, we introduce the first approach for BD generation based on diffusion models, with the capacity to later generalize and scale to arbitrary three dimensional structures. Our method couples a transformer encoder, which extracts contextual embeddings from the input structure, with a latent diffusion model to generate the corresponding BD. In addition, we provide insights into why transformers and diffusion models are well suited to capture the complex interference and scattering phenomena inherent to photonics, paving the way for new surrogate modeling strategies in this domain.

On the effectiveness of Rotation-Equivariance in U-Net: A Benchmark for Image Segmentation

Dec 12, 2024

Abstract:Numerous studies have recently focused on incorporating different variations of equivariance in Convolutional Neural Networks (CNNs). In particular, rotation-equivariance has gathered significant attention due to its relevance in many applications related to medical imaging, microscopic imaging, satellite imaging, industrial tasks, etc. While prior research has primarily focused on enhancing classification tasks with rotation equivariant CNNs, their impact on more complex architectures, such as U-Net for image segmentation, remains scarcely explored. Indeed, previous work interested in integrating rotation-equivariance into U-Net architecture have focused on solving specific applications with a limited scope. In contrast, this paper aims to provide a more exhaustive evaluation of rotation equivariant U-Net for image segmentation across a broader range of tasks. We benchmark their effectiveness against standard U-Net architectures, assessing improvements in terms of performance and sustainability (i.e., computational cost). Our evaluation focuses on datasets whose orientation of objects of interest is arbitrary in the image (e.g., Kvasir-SEG), but also on more standard segmentation datasets (such as COCO-Stuff) as to explore the wider applicability of rotation equivariance beyond tasks undoubtedly concerned by rotation equivariance. The main contribution of this work is to provide insights into the trade-offs and advantages of integrating rotation equivariance for segmentation tasks.

SO and O Equivariance in Image Recognition with Bessel-Convolutional Neural Networks

Apr 18, 2023

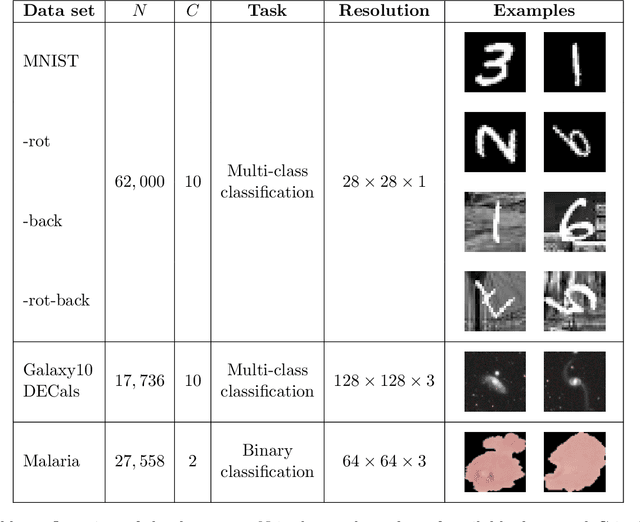

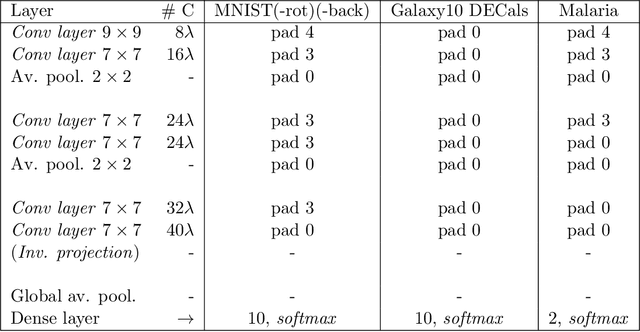

Abstract:For many years, it has been shown how much exploiting equivariances can be beneficial when solving image analysis tasks. For example, the superiority of convolutional neural networks (CNNs) compared to dense networks mainly comes from an elegant exploitation of the translation equivariance. Patterns can appear at arbitrary positions and convolutions take this into account to achieve translation invariant operations through weight sharing. Nevertheless, images often involve other symmetries that can also be exploited. It is the case of rotations and reflections that have drawn particular attention and led to the development of multiple equivariant CNN architectures. Among all these methods, Bessel-convolutional neural networks (B-CNNs) exploit a particular decomposition based on Bessel functions to modify the key operation between images and filters and make it by design equivariant to all the continuous set of planar rotations. In this work, the mathematical developments of B-CNNs are presented along with several improvements, including the incorporation of reflection and multi-scale equivariances. Extensive study is carried out to assess the performances of B-CNNs compared to other methods. Finally, we emphasize the theoretical advantages of B-CNNs by giving more insights and in-depth mathematical details.

Industrial and Medical Anomaly Detection Through Cycle-Consistent Adversarial Networks

Feb 10, 2023Abstract:In this study, a new Anomaly Detection (AD) approach for real-world images is proposed. This method leverages the theoretical strengths of unsupervised learning and the data availability of both normal and abnormal classes. The AD is often formulated as an unsupervised task motivated by the frequent imbalanced nature of the datasets, as well as the challenge of capturing the entirety of the abnormal class. Such methods only rely on normal images during training, which are devoted to be reconstructed through an autoencoder architecture for instance. However, the information contained in the abnormal data is also valuable for this reconstruction. Indeed, the model would be able to identify its weaknesses by better learning how to transform an abnormal (or normal) image into a normal (or abnormal) image. Each of these tasks could help the entire model to learn with higher precision than a single normal to normal reconstruction. To address this challenge, the proposed method utilizes Cycle-Generative Adversarial Networks (Cycle-GANs) for abnormal-to-normal translation. To the best of our knowledge, this is the first time that Cycle-GANs have been studied for this purpose. After an input image has been reconstructed by the normal generator, an anomaly score describes the differences between the input and reconstructed images. Based on a threshold set with a business quality constraint, the input image is then flagged as normal or not. The proposed method is evaluated on industrial and medical images, including cases with balanced datasets and others with as few as 30 abnormal images. The results demonstrate accurate performance and good generalization for all kinds of anomalies, specifically for texture-shaped images where the method reaches an average accuracy of 97.2% (85.4% with an additional zero false negative constraint).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge