Vadim Weinstein

A Mathematical Characterization of Minimally Sufficient Robot Brains

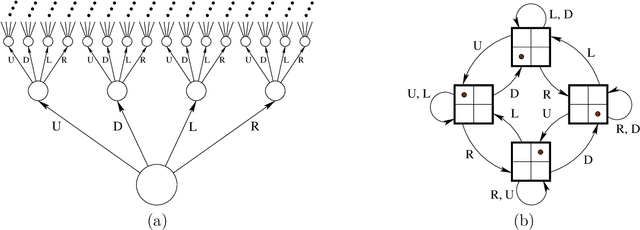

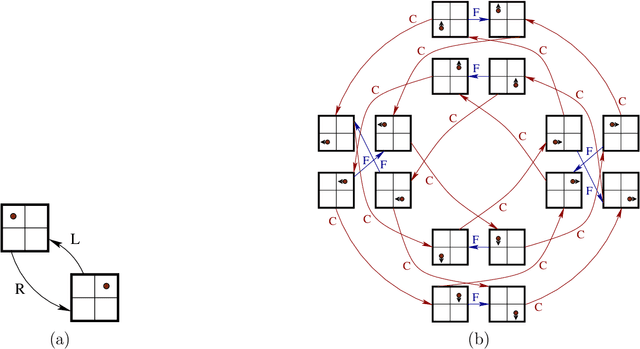

Aug 17, 2023Abstract:This paper addresses the lower limits of encoding and processing the information acquired through interactions between an internal system (robot algorithms or software) and an external system (robot body and its environment) in terms of action and observation histories. Both are modeled as transition systems. We want to know the weakest internal system that is sufficient for achieving passive (filtering) and active (planning) tasks. We introduce the notion of an information transition system for the internal system which is a transition system over a space of information states that reflect a robot's or other observer's perspective based on limited sensing, memory, computation, and actuation. An information transition system is viewed as a filter and a policy or plan is viewed as a function that labels the states of this information transition system. Regardless of whether internal systems are obtained by learning algorithms, planning algorithms, or human insight, we want to know the limits of feasibility for given robot hardware and tasks. We establish, in a general setting, that minimal information transition systems exist up to reasonable equivalence assumptions, and are unique under some general conditions. We then apply the theory to generate new insights into several problems, including optimal sensor fusion/filtering, solving basic planning tasks, and finding minimal representations for modeling a system given input-output relations.

The Limits of Learning and Planning: Minimal Sufficient Information Transition Systems

Dec 01, 2022Abstract:In this paper, we view a policy or plan as a transition system over a space of information states that reflect a robot's or other observer's perspective based on limited sensing, memory, computation, and actuation. Regardless of whether policies are obtained by learning algorithms, planning algorithms, or human insight, we want to know the limits of feasibility for given robot hardware and tasks. Toward the quest to find the best policies, we establish in a general setting that minimal information transition systems (ITSs) exist up to reasonable equivalence assumptions, and are unique under some general conditions. We then apply the theory to generate new insights into several problems, including optimal sensor fusion/filtering, solving basic planning tasks, and finding minimal representations for feasible policies.

An Enactivist-Inspired Mathematical Model of Cognition

Jun 10, 2022

Abstract:We formulate five basic tenets of enactivist cognitive science that we have carefully identified in the relevant literature as the main underlying principles of that philosophy. We then develop a mathematical framework to talk about cognitive systems (both artificial and natural) which complies with these enactivist tenets. In particular we pay attention that our mathematical modeling does not attribute contentful symbolic representations to the agents, and that the agent's brain, body and environment are modeled in a way that makes them an inseparable part of a greater totality. The purpose is to create a mathematical foundation for cognition which is in line with enactivism. We see two main benefits of doing so: (1) It enables enactivist ideas to be more accessible for computer scientists, AI researchers, roboticists, cognitive scientists, and psychologists, and (2) it gives the philosophers a mathematical tool which can be used to clarify their notions and help with their debates. Our main notion is that of a sensorimotor system which is a special case of a well studied notion of a transition system. We also consider related notions such as labeled transition systems and deterministic automata. We analyze a notion called sufficiency and show that it is a very good candidate for a foundational notion in the "mathematics of cognition from an enactivist perspective". We demonstrate its importance by proving a uniqueness theorem about the minimal sufficient refinements (which correspond in some sense to an optimal attunement of an organism to its environment) and by showing that sufficiency corresponds to known notions such as sufficient history information spaces. We then develop other related notions such as degree of insufficiency, universal covers, hierarchies, strategic sufficiency. In the end, we tie it all back to the enactivist tenets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge