Vadakkepat Prahlad

CAM/CAD Point Cloud Part Segmentation via Few-Shot Learning

Jul 16, 2022

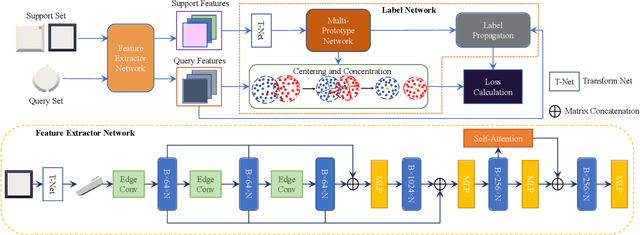

Abstract:3D part segmentation is an essential step in advanced CAM/CAD workflow. Precise 3D segmentation contributes to lower defective rate of work-pieces produced by the manufacturing equipment (such as computer controlled CNCs), thereby improving work efficiency and attaining the attendant economic benefits. A large class of existing works on 3D model segmentation are mostly based on fully-supervised learning, which trains the AI models with large, annotated datasets. However, the disadvantage is that the resulting models from the fully-supervised learning methodology are highly reliant on the completeness of the available dataset, and its generalization ability is relatively poor to new unknown segmentation types (i.e. further additional novel classes). In this work, we propose and develop a noteworthy few-shot learning-based approach for effective part segmentation in CAM/CAD; and this is designed to significantly enhance its generalization ability and flexibly adapt to new segmentation tasks by using only relatively rather few samples. As a result, it not only reduces the requirements for the usually unattainable and exhaustive completeness of supervision datasets, but also improves the flexibility for real-world applications. As further improvement and innovation, we additionally adopt the transform net and the center loss block in the network. These characteristics serve to improve the comprehension for 3D features of the various possible instances of the whole work-piece and ensure the close distribution of the same class in feature space.

Masked Self-Supervision for Remaining Useful Lifetime Prediction in Machine Tools

Jul 04, 2022

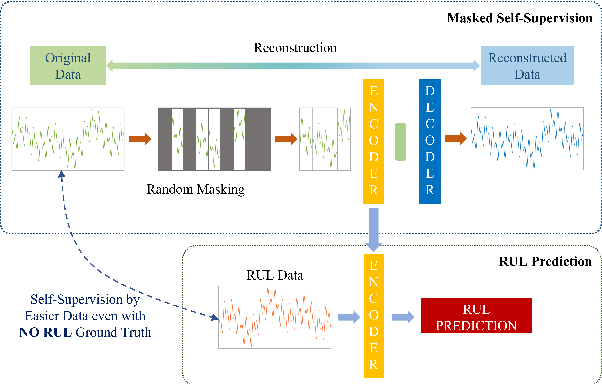

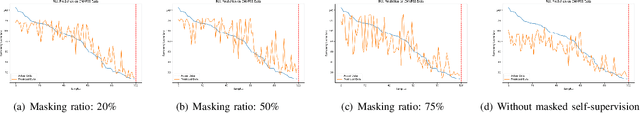

Abstract:Prediction of Remaining Useful Lifetime(RUL) in the modern manufacturing and automation workplace for machines and tools is essential in Industry 4.0. This is clearly evident as continuous tool wear, or worse, sudden machine breakdown will lead to various manufacturing failures which would clearly cause economic loss. With the availability of deep learning approaches, the great potential and prospect of utilizing these for RUL prediction have resulted in several models which are designed driven by operation data of manufacturing machines. Current efforts in these which are based on fully-supervised models heavily rely on the data labeled with their RULs. However, the required RUL prediction data (i.e. the annotated and labeled data from faulty and/or degraded machines) can only be obtained after the machine breakdown occurs. The scarcity of broken machines in the modern manufacturing and automation workplace in real-world situations increases the difficulty of getting sufficient annotated and labeled data. In contrast, the data from healthy machines is much easier to be collected. Noting this challenge and the potential for improved effectiveness and applicability, we thus propose (and also fully develop) a method based on the idea of masked autoencoders which will utilize unlabeled data to do self-supervision. In thus the work here, a noteworthy masked self-supervised learning approach is developed and utilized. This is designed to seek to build a deep learning model for RUL prediction by utilizing unlabeled data. The experiments to verify the effectiveness of this development are implemented on the C-MAPSS datasets (which are collected from the data from the NASA turbofan engine). The results rather clearly show that our development and approach here perform better, in both accuracy and effectiveness, for RUL prediction when compared with approaches utilizing a fully-supervised model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge