V. Abrol

Proceedings of the third "international Traveling Workshop on Interactions between Sparse models and Technology"

Sep 14, 2016

Abstract:The third edition of the "international - Traveling Workshop on Interactions between Sparse models and Technology" (iTWIST) took place in Aalborg, the 4th largest city in Denmark situated beautifully in the northern part of the country, from the 24th to 26th of August 2016. The workshop venue was at the Aalborg University campus. One implicit objective of this biennial workshop is to foster collaboration between international scientific teams by disseminating ideas through both specific oral/poster presentations and free discussions. For this third edition, iTWIST'16 gathered about 50 international participants and features 8 invited talks, 12 oral presentations, and 12 posters on the following themes, all related to the theory, application and generalization of the "sparsity paradigm": Sparsity-driven data sensing and processing (e.g., optics, computer vision, genomics, biomedical, digital communication, channel estimation, astronomy); Application of sparse models in non-convex/non-linear inverse problems (e.g., phase retrieval, blind deconvolution, self calibration); Approximate probabilistic inference for sparse problems; Sparse machine learning and inference; "Blind" inverse problems and dictionary learning; Optimization for sparse modelling; Information theory, geometry and randomness; Sparsity? What's next? (Discrete-valued signals; Union of low-dimensional spaces, Cosparsity, mixed/group norm, model-based, low-complexity models, ...); Matrix/manifold sensing/processing (graph, low-rank approximation, ...); Complexity/accuracy tradeoffs in numerical methods/optimization; Electronic/optical compressive sensors (hardware).

Making sense of randomness: an approach for fast recovery of compressively sensed signals

Jul 25, 2015

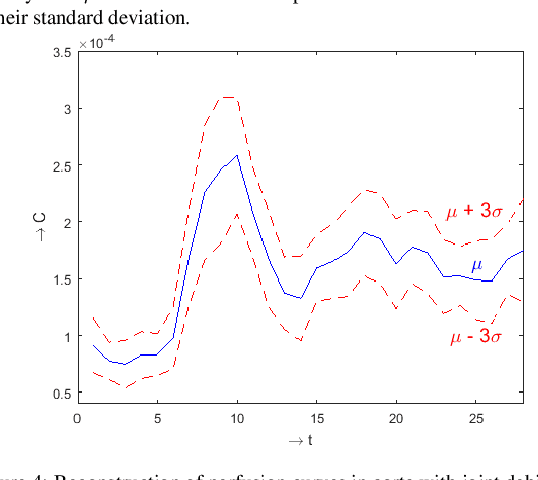

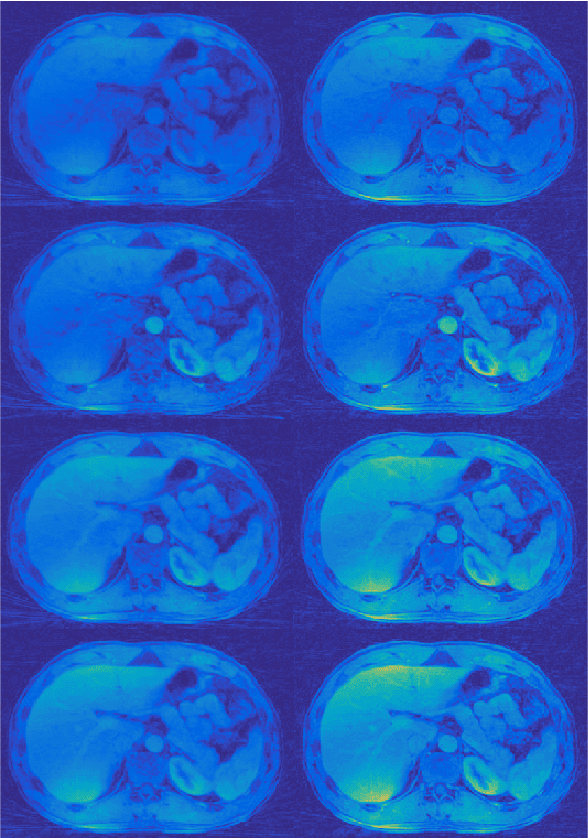

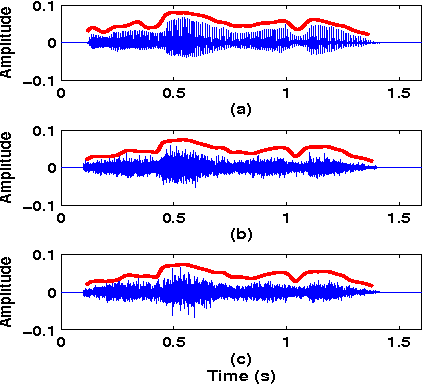

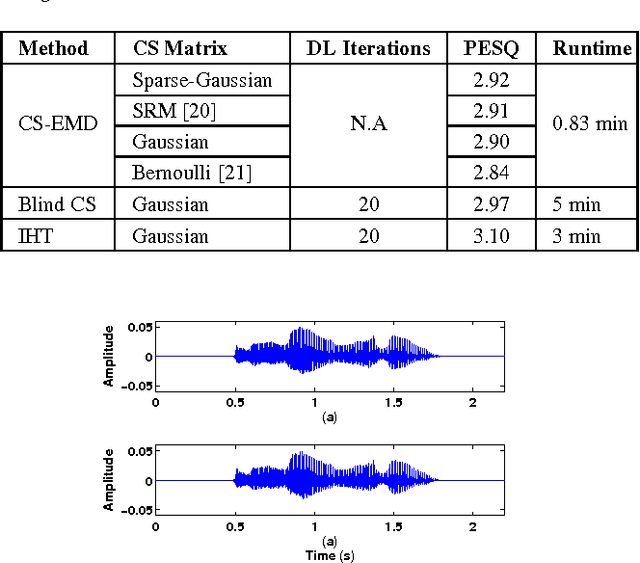

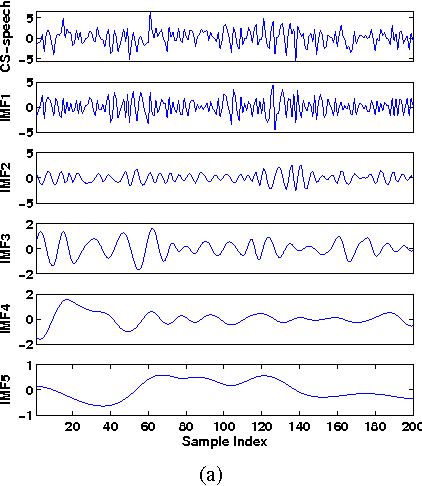

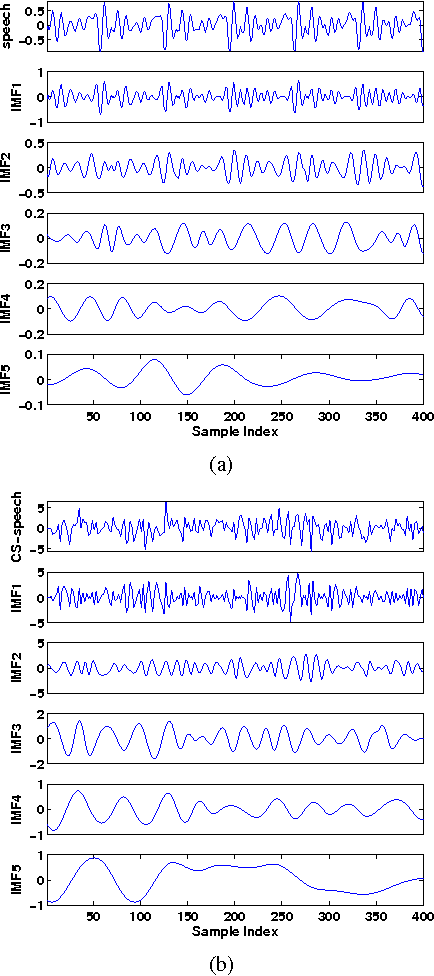

Abstract:In compressed sensing (CS) framework, a signal is sampled below Nyquist rate, and the acquired compressed samples are generally random in nature. However, for efficient estimation of the actual signal, the sensing matrix must preserve the relative distances among the acquired compressed samples. Provided this condition is fulfilled, we show that CS samples will preserve the envelope of the actual signal even at different compression ratios. Exploiting this envelope preserving property of CS samples, we propose a new fast dictionary learning (DL) algorithm which is able to extract prototype signals from compressive samples for efficient sparse representation and recovery of signals. These prototype signals are orthogonal intrinsic mode functions (IMFs) extracted using empirical mode decomposition (EMD), which is one of the popular methods to capture the envelope of a signal. The extracted IMFs are used to build the dictionary without even comprehending the original signal or the sensing matrix. Moreover, one can build the dictionary on-line as new CS samples are available. In particularly, to recover first $L$ signals ($\in\mathbb{R}^n$) at the decoder, one can build the dictionary in just $\mathcal{O}(nL\log n)$ operations, that is far less as compared to existing approaches. The efficiency of the proposed approach is demonstrated experimentally for recovery of speech signals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge