Urs Hengartner

Unlocking The Potential of Adaptive Attacks on Diffusion-Based Purification

Nov 25, 2024

Abstract:Diffusion-based purification (DBP) is a defense against adversarial examples (AEs), amassing popularity for its ability to protect classifiers in an attack-oblivious manner and resistance to strong adversaries with access to the defense. Its robustness has been claimed to ensue from the reliance on diffusion models (DMs) that project the AEs onto the natural distribution. We revisit this claim, focusing on gradient-based strategies that back-propagate the loss gradients through the defense, commonly referred to as ``adaptive attacks". Analytically, we show that such an optimization method invalidates DBP's core foundations, effectively targeting the DM rather than the classifier and restricting the purified outputs to a distribution over malicious samples instead. Thus, we reassess the reported empirical robustness, uncovering implementation flaws in the gradient back-propagation techniques used thus far for DBP. We fix these issues, providing the first reliable gradient library for DBP and demonstrating how adaptive attacks drastically degrade its robustness. We then study a less efficient yet stricter majority-vote setting where the classifier evaluates multiple purified copies of the input to make its decision. Here, DBP's stochasticity enables it to remain partially robust against traditional norm-bounded AEs. We propose a novel adaptation of a recent optimization method against deepfake watermarking that crafts systemic malicious perturbations while ensuring imperceptibility. When integrated with the adaptive attack, it completely defeats DBP, even in the majority-vote setup. Our findings prove that DBP, in its current state, is not a viable defense against AEs.

UnMarker: A Universal Attack on Defensive Watermarking

May 14, 2024

Abstract:Reports regarding the misuse of $\textit{Generative AI}$ ($\textit{GenAI}$) to create harmful deepfakes are emerging daily. Recently, defensive watermarking, which enables $\textit{GenAI}$ providers to hide fingerprints in their images to later use for deepfake detection, has been on the rise. Yet, its potential has not been fully explored. We present $\textit{UnMarker}$ -- the first practical $\textit{universal}$ attack on defensive watermarking. Unlike existing attacks, $\textit{UnMarker}$ requires no detector feedback, no unrealistic knowledge of the scheme or similar models, and no advanced denoising pipelines that may not be available. Instead, being the product of an in-depth analysis of the watermarking paradigm revealing that robust schemes must construct their watermarks in the spectral amplitudes, $\textit{UnMarker}$ employs two novel adversarial optimizations to disrupt the spectra of watermarked images, erasing the watermarks. Evaluations against the $\textit{SOTA}$ prove its effectiveness, not only defeating traditional schemes while retaining superior quality compared to existing attacks but also breaking $\textit{semantic}$ watermarks that alter the image's structure, reducing the best detection rate to $43\%$ and rendering them useless. To our knowledge, $\textit{UnMarker}$ is the first practical attack on $\textit{semantic}$ watermarks, which have been deemed the future of robust watermarking. $\textit{UnMarker}$ casts doubts on the very penitential of this countermeasure and exposes its paradoxical nature as designing schemes for robustness inevitably compromises other robustness aspects.

On the Feasibility of Fingerprinting Collaborative Robot Traffic

Dec 11, 2023Abstract:This study examines privacy risks in collaborative robotics, focusing on the potential for traffic analysis in encrypted robot communications. While previous research has explored low-level command recovery, our work investigates high-level motion recovery from command message sequences. We evaluate the efficacy of traditional website fingerprinting techniques (k-FP, KNN, and CUMUL) and their limitations in accurately identifying robotic actions due to their inability to capture detailed temporal relationships. To address this, we introduce a traffic classification approach using signal processing techniques, demonstrating high accuracy in action identification and highlighting the vulnerability of encrypted communications to privacy breaches. Additionally, we explore defenses such as packet padding and timing manipulation, revealing the challenges in balancing traffic analysis resistance with network efficiency. Our findings emphasize the need for continued development of practical defenses in robotic privacy and security.

Practical Attacks on Voice Spoofing Countermeasures

Jul 30, 2021

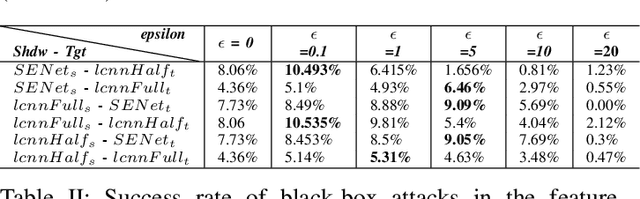

Abstract:Voice authentication has become an integral part in security-critical operations, such as bank transactions and call center conversations. The vulnerability of automatic speaker verification systems (ASVs) to spoofing attacks instigated the development of countermeasures (CMs), whose task is to tell apart bonafide and spoofed speech. Together, ASVs and CMs form today's voice authentication platforms, advertised as an impregnable access control mechanism. We develop the first practical attack on CMs, and show how a malicious actor may efficiently craft audio samples to bypass voice authentication in its strictest form. Previous works have primarily focused on non-proactive attacks or adversarial strategies against ASVs that do not produce speech in the victim's voice. The repercussions of our attacks are far more severe, as the samples we generate sound like the victim, eliminating any chance of plausible deniability. Moreover, the few existing adversarial attacks against CMs mistakenly optimize spoofed speech in the feature space and do not take into account the existence of ASVs, resulting in inferior synthetic audio that fails in realistic settings. We eliminate these obstacles through our key technical contribution: a novel joint loss function that enables mounting advanced adversarial attacks against combined ASV/CM deployments directly in the time domain. Our adversarials achieve concerning black-box success rates against state-of-the-art authentication platforms (up to 93.57\%). Finally, we perform the first targeted, over-telephony-network attack on CMs, bypassing several challenges and enabling various potential threats, given the increased use of voice biometrics in call centers. Our results call into question the security of modern voice authentication systems in light of the real threat of attackers bypassing these measures to gain access to users' most valuable resources.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge