Umut Demir

Scalable Planning and Learning Framework Development for Swarm-to-Swarm Engagement Problems

Dec 06, 2022

Abstract:Development of guidance, navigation and control frameworks/algorithms for swarms attracted significant attention in recent years. That being said, algorithms for planning swarm allocations/trajectories for engaging with enemy swarms is largely an understudied problem. Although small-scale scenarios can be addressed with tools from differential game theory, existing approaches fail to scale for large-scale multi-agent pursuit evasion (PE) scenarios. In this work, we propose a reinforcement learning (RL) based framework to decompose to large-scale swarm engagement problems into a number of independent multi-agent pursuit-evasion games. We simulate a variety of multi-agent PE scenarios, where finite time capture is guaranteed under certain conditions. The calculated PE statistics are provided as a reward signal to the high level allocation layer, which uses an RL algorithm to allocate controlled swarm units to eliminate enemy swarm units with maximum efficiency. We verify our approach in large-scale swarm-to-swarm engagement simulations.

A Scalable Reinforcement Learning Approach for Attack Allocation in Swarm to Swarm Engagement Problems

Oct 15, 2022

Abstract:In this work we propose a reinforcement learning (RL) framework that controls the density of a large-scale swarm for engaging with adversarial swarm attacks. Although there is a significant amount of existing work in applying artificial intelligence methods to swarm control, analysis of interactions between two adversarial swarms is a rather understudied area. Most of the existing work in this subject develop strategies by making hard assumptions regarding the strategy and dynamics of the adversarial swarm. Our main contribution is the formulation of the swarm to swarm engagement problem as a Markov Decision Process and development of RL algorithms that can compute engagement strategies without the knowledge of strategy/dynamics of the adversarial swarm. Simulation results show that the developed framework can handle a wide array of large-scale engagement scenarios in an efficient manner.

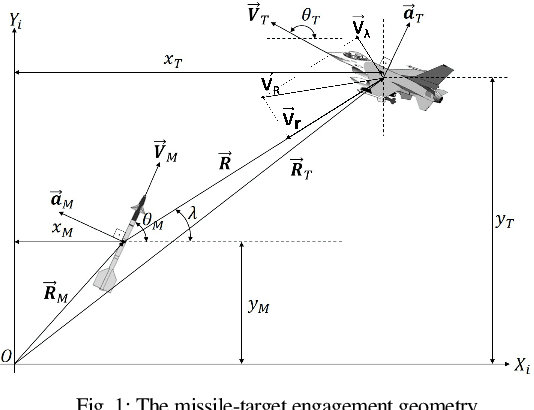

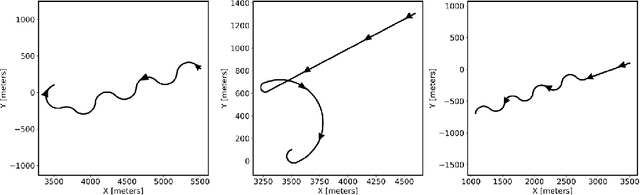

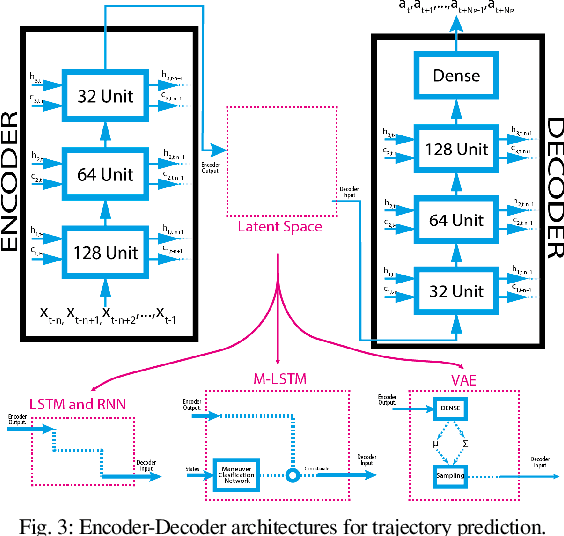

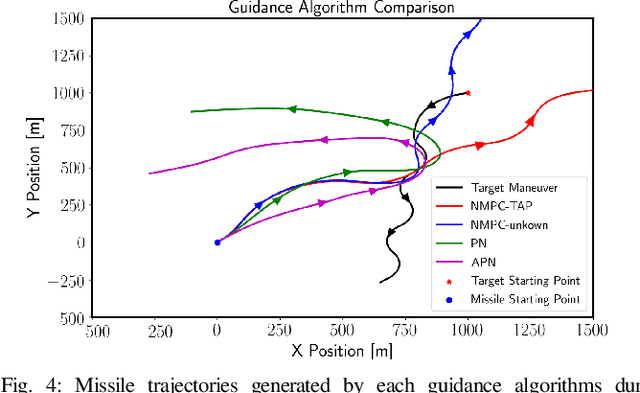

Nonlinear Model Based Guidance with Deep Learning Based Target Trajectory Prediction Against Aerial Agile Attack Patterns

Apr 06, 2021

Abstract:In this work, we propose a novel missile guidance algorithm that combines deep learning based trajectory prediction with nonlinear model predictive control. Although missile guidance and threat interception is a well-studied problem, existing algorithms' performance degrades significantly when the target is pulling high acceleration attack maneuvers while rapidly changing its direction. We argue that since most threats execute similar attack maneuvers, these nonlinear trajectory patterns can be processed with modern machine learning methods to build high accuracy trajectory prediction algorithms. We train a long short-term memory network (LSTM) based on a class of simulated structured agile attack patterns, then combine this predictor with quadratic programming based nonlinear model predictive control (NMPC). Our method, named nonlinear model based predictive control with target acceleration predictions (NMPC-TAP), significantly outperforms compared approaches in terms of miss distance, for the scenarios where the target/threat is executing agile maneuvers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge