Umit Cali

BERT4MIMO: A Foundation Model using BERT Architecture for Massive MIMO Channel State Information Prediction

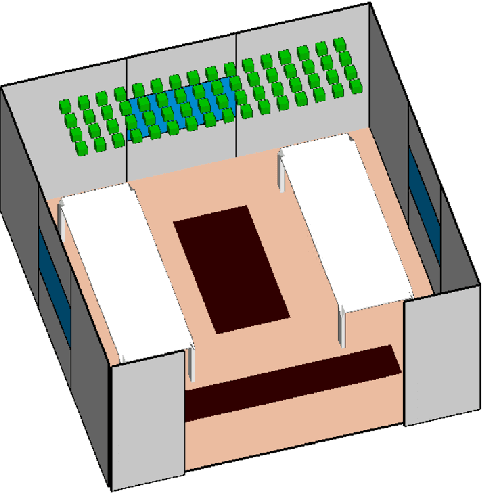

Jan 03, 2025Abstract:Massive MIMO (Multiple-Input Multiple-Output) is an advanced wireless communication technology, using a large number of antennas to improve the overall performance of the communication system in terms of capacity, spectral, and energy efficiency. The performance of MIMO systems is highly dependent on the quality of channel state information (CSI). Predicting CSI is, therefore, essential for improving communication system performance, particularly in MIMO systems, since it represents key characteristics of a wireless channel, including propagation, fading, scattering, and path loss. This study proposes a foundation model inspired by BERT, called BERT4MIMO, which is specifically designed to process high-dimensional CSI data from massive MIMO systems. BERT4MIMO offers superior performance in reconstructing CSI under varying mobility scenarios and channel conditions through deep learning and attention mechanisms. The experimental results demonstrate the effectiveness of BERT4MIMO in a variety of wireless environments.

Neural Networks Meet Elliptic Curve Cryptography: A Novel Approach to Secure Communication

Jul 11, 2024

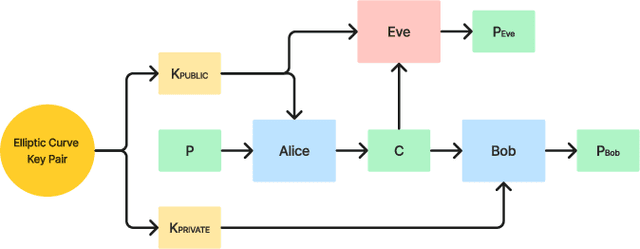

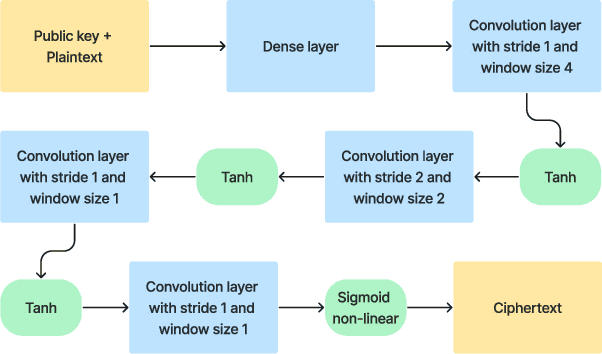

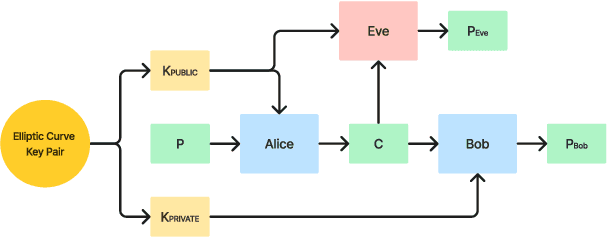

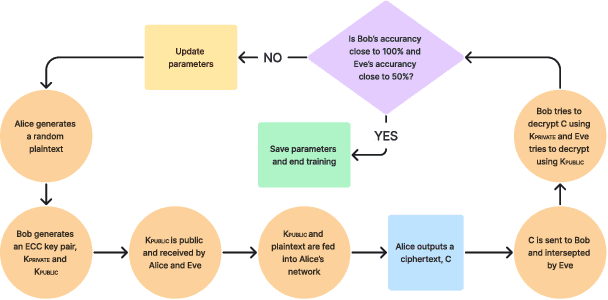

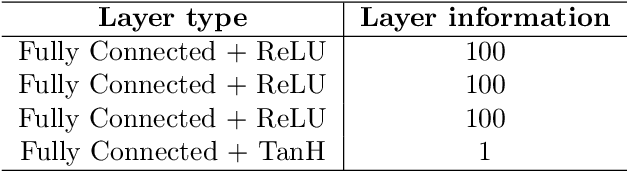

Abstract:In recent years, neural networks have been used to implement symmetric cryptographic functions for secure communications. Extending this domain, the proposed approach explores the application of asymmetric cryptography within a neural network framework to safeguard the exchange between two communicating entities, i.e., Alice and Bob, from an adversarial eavesdropper, i.e., Eve. It employs a set of five distinct cryptographic keys to examine the efficacy and robustness of communication security against eavesdropping attempts using the principles of elliptic curve cryptography. The experimental setup reveals that Alice and Bob achieve secure communication with negligible variation in security effectiveness across different curves. It is also designed to evaluate cryptographic resilience. Specifically, the loss metrics for Bob oscillate between 0 and 1 during encryption-decryption processes, indicating successful message comprehension post-encryption by Alice. The potential vulnerability with a decryption accuracy exceeds 60\%, where Eve experiences enhanced adversarial training, receiving twice the training iterations per batch compared to Alice and Bob.

Anomaly Detection in Power Markets and Systems

Dec 05, 2022

Abstract:The widespread use of information and communication technology (ICT) over the course of the last decades has been a primary catalyst behind the digitalization of power systems. Meanwhile, as the utilization rate of the Internet of Things (IoT) continues to rise along with recent advancements in ICT, the need for secure and computationally efficient monitoring of critical infrastructures like the electrical grid and the agents that participate in it is growing. A cyber-physical system, such as the electrical grid, may experience anomalies for a number of different reasons. These may include physical defects, mistakes in measurement and communication, cyberattacks, and other similar occurrences. The goal of this study is to emphasize what the most common incidents are with power systems and to give an overview and classification of the most common ways to find problems, starting with the consumer/prosumer end working up to the primary power producers. In addition, this article aimed to discuss the methods and techniques, such as artificial intelligence (AI) that are used to identify anomalies in the power systems and markets.

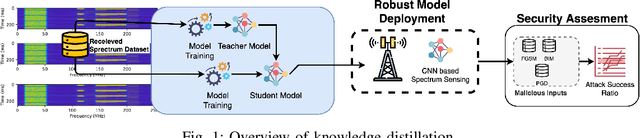

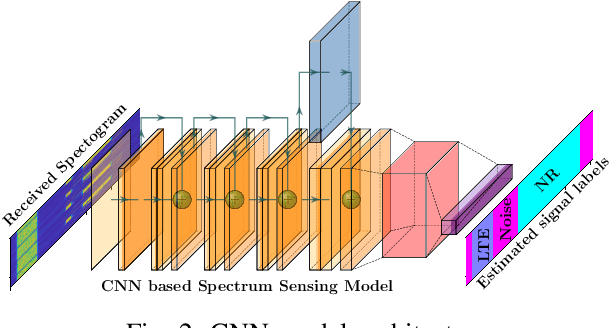

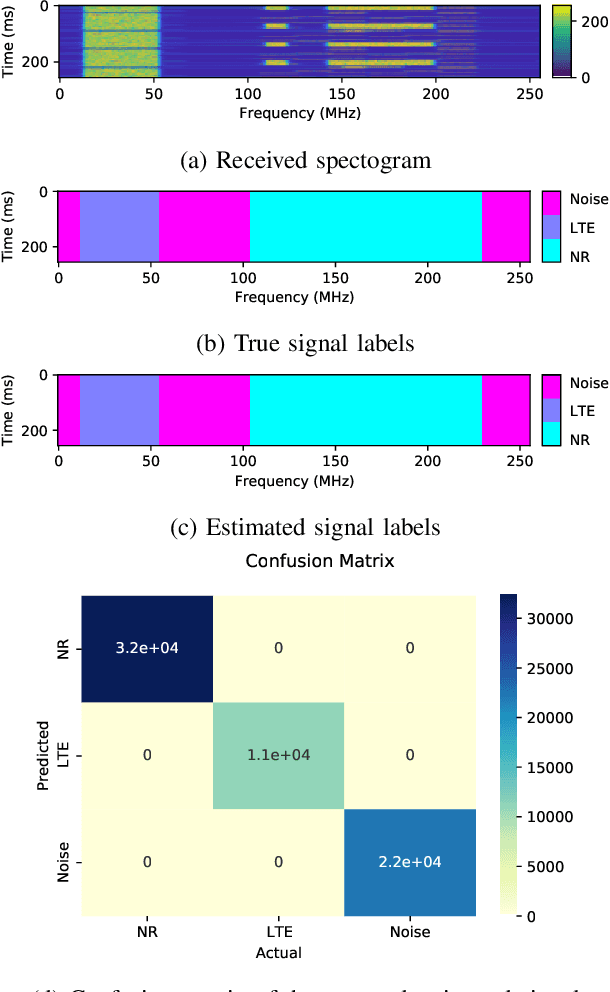

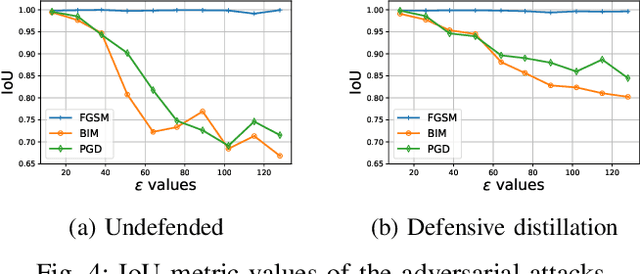

Mitigating Attacks on Artificial Intelligence-based Spectrum Sensing for Cellular Network Signals

Sep 27, 2022

Abstract:Cellular networks (LTE, 5G, and beyond) are dramatically growing with high demand from consumers and more promising than the other wireless networks with advanced telecommunication technologies. The main goal of these networks is to connect billions of devices, systems, and users with high-speed data transmission, high cell capacity, and low latency, as well as to support a wide range of new applications, such as virtual reality, metaverse, telehealth, online education, autonomous and flying vehicles, advanced manufacturing, and many more. To achieve these goals, spectrum sensing has been paid more attention, along with new approaches using artificial intelligence (AI) methods for spectrum management in cellular networks. This paper provides a vulnerability analysis of spectrum sensing approaches using AI-based semantic segmentation models for identifying cellular network signals under adversarial attacks with and without defensive distillation methods. The results showed that mitigation methods can significantly reduce the vulnerabilities of AI-based spectrum sensing models against adversarial attacks.

Hybrid AI-based Anomaly Detection Model using Phasor Measurement Unit Data

Sep 21, 2022

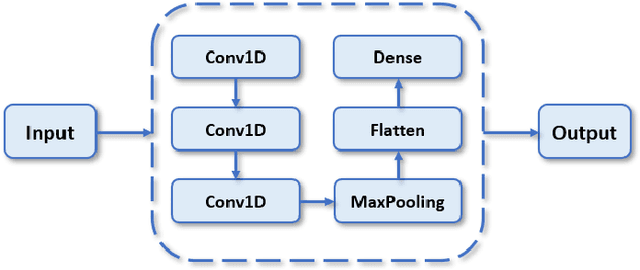

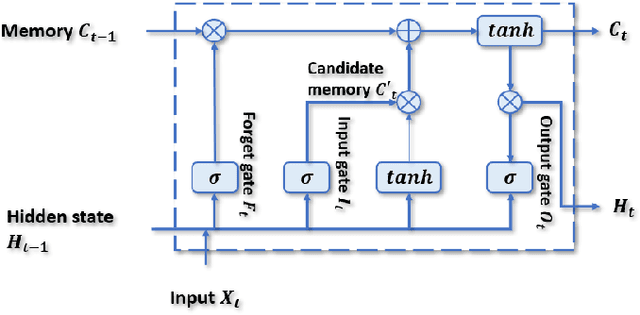

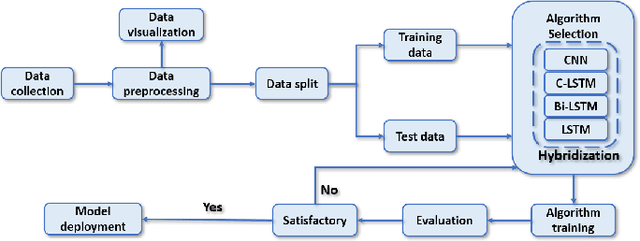

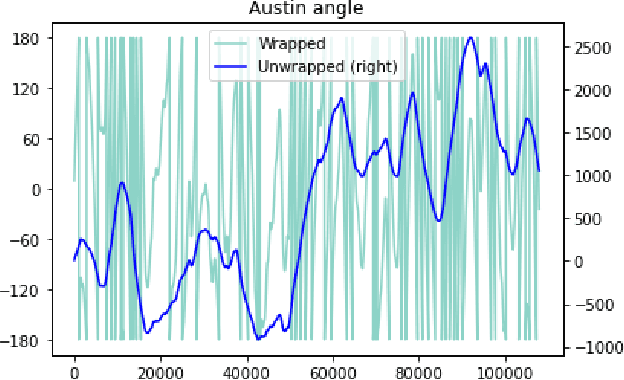

Abstract:Over the last few decades, extensive use of information and communication technologies has been the main driver of the digitalization of power systems. Proper and secure monitoring of the critical grid infrastructure became an integral part of the modern power system. Using phasor measurement units (PMUs) to surveil the power system is one of the technologies that have a promising future. Increased frequency of measurements and smarter methods for data handling can improve the ability to reliably operate power grids. The increased cyber-physical interaction offers both benefits and drawbacks, where one of the drawbacks comes in the form of anomalies in the measurement data. The anomalies can be caused by both physical faults on the power grid, as well as disturbances, errors, and cyber attacks in the cyber layer. This paper aims to develop a hybrid AI-based model that is based on various methods such as Long Short Term Memory (LSTM), Convolutional Neural Network (CNN) and other relevant hybrid algorithms for anomaly detection in phasor measurement unit data. The dataset used within this research was acquired by the University of Texas, which consists of real data from grid measurements. In addition to the real data, false data that has been injected to produce anomalies has been analyzed. The impacts and mitigating methods to prevent such kind of anomalies are discussed.

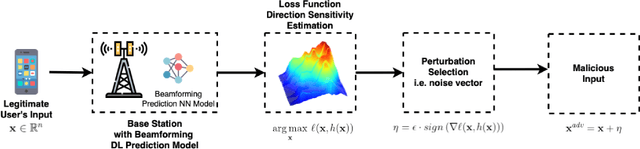

Defensive Distillation based Adversarial Attacks Mitigation Method for Channel Estimation using Deep Learning Models in Next-Generation Wireless Networks

Aug 12, 2022

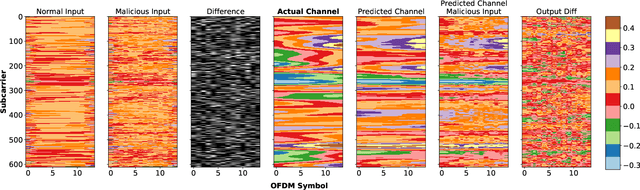

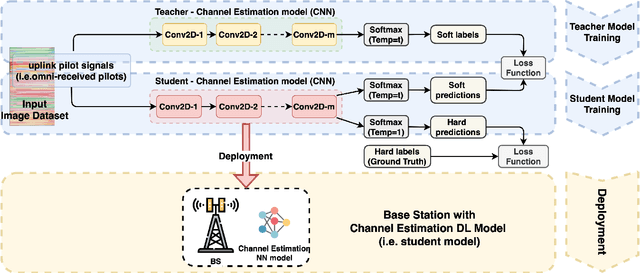

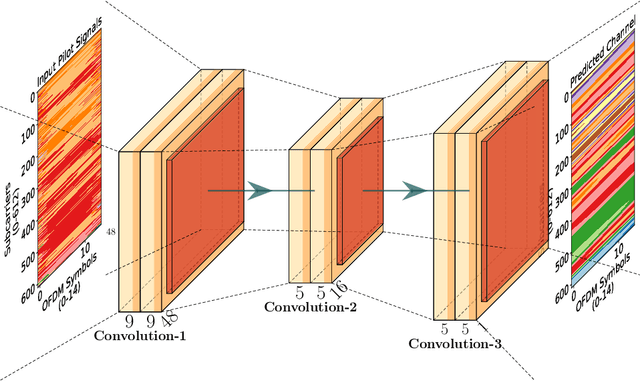

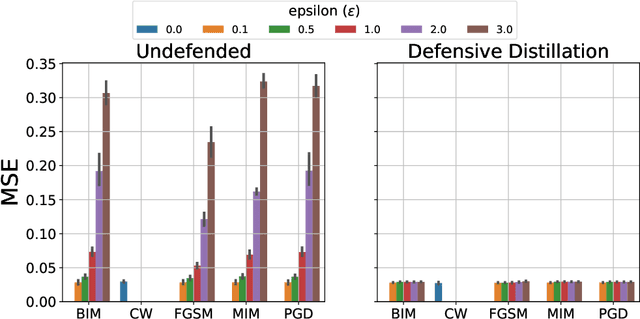

Abstract:Future wireless networks (5G and beyond) are the vision of forthcoming cellular systems, connecting billions of devices and people together. In the last decades, cellular networks have been dramatically growth with advanced telecommunication technologies for high-speed data transmission, high cell capacity, and low latency. The main goal of those technologies is to support a wide range of new applications, such as virtual reality, metaverse, telehealth, online education, autonomous and flying vehicles, smart cities, smart grids, advanced manufacturing, and many more. The key motivation of NextG networks is to meet the high demand for those applications by improving and optimizing network functions. Artificial Intelligence (AI) has a high potential to achieve these requirements by being integrated in applications throughout all layers of the network. However, the security concerns on network functions of NextG using AI-based models, i.e., model poising, have not been investigated deeply. Therefore, it needs to design efficient mitigation techniques and secure solutions for NextG networks using AI-based methods. This paper proposes a comprehensive vulnerability analysis of deep learning (DL)-based channel estimation models trained with the dataset obtained from MATLAB's 5G toolbox for adversarial attacks and defensive distillation-based mitigation methods. The adversarial attacks produce faulty results by manipulating trained DL-based models for channel estimation in NextG networks, while making models more robust against any attacks through mitigation methods. This paper also presents the performance of the proposed defensive distillation mitigation method for each adversarial attack against the channel estimation model. The results indicated that the proposed mitigation method can defend the DL-based channel estimation models against adversarial attacks in NextG networks.

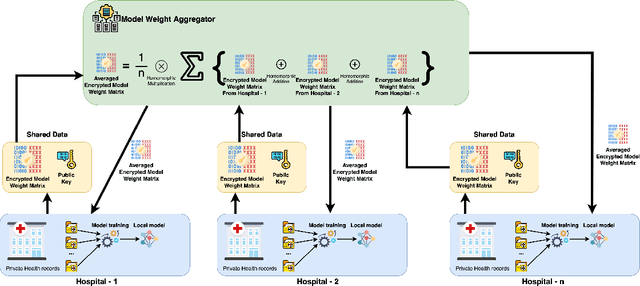

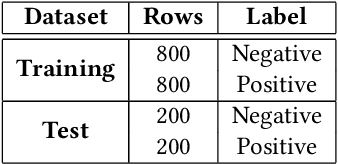

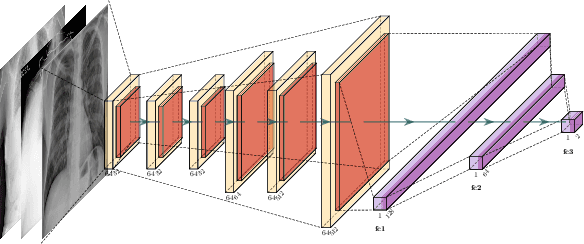

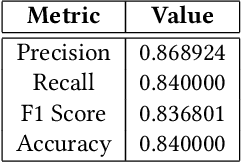

Homomorphic Encryption and Federated Learning based Privacy-Preserving CNN Training: COVID-19 Detection Use-Case

Apr 16, 2022

Abstract:Medical data is often highly sensitive in terms of data privacy and security concerns. Federated learning, one type of machine learning techniques, has been started to use for the improvement of the privacy and security of medical data. In the federated learning, the training data is distributed across multiple machines, and the learning process is performed in a collaborative manner. There are several privacy attacks on deep learning (DL) models to get the sensitive information by attackers. Therefore, the DL model itself should be protected from the adversarial attack, especially for applications using medical data. One of the solutions for this problem is homomorphic encryption-based model protection from the adversary collaborator. This paper proposes a privacy-preserving federated learning algorithm for medical data using homomorphic encryption. The proposed algorithm uses a secure multi-party computation protocol to protect the deep learning model from the adversaries. In this study, the proposed algorithm using a real-world medical dataset is evaluated in terms of the model performance.

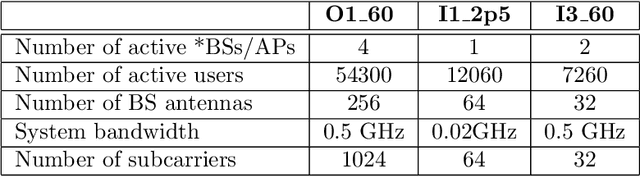

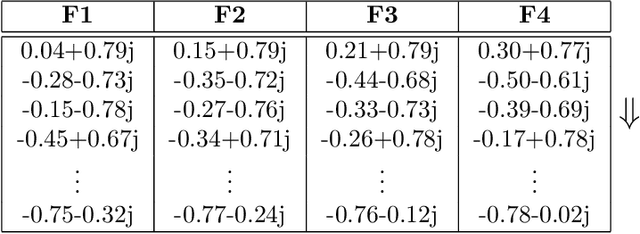

The Adversarial Security Mitigations of mmWave Beamforming Prediction Models using Defensive Distillation and Adversarial Retraining

Feb 16, 2022

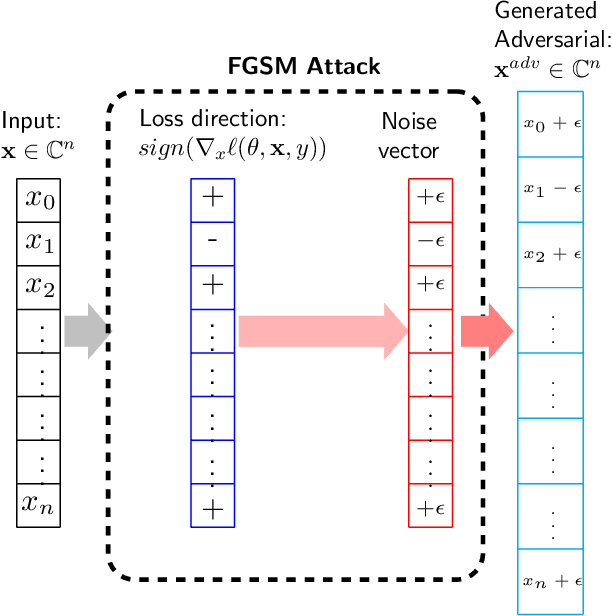

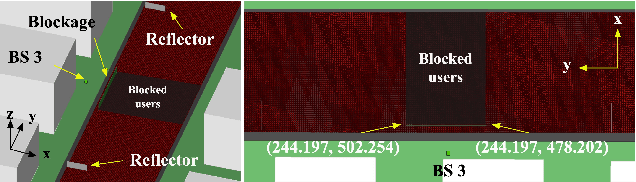

Abstract:The design of a security scheme for beamforming prediction is critical for next-generation wireless networks (5G, 6G, and beyond). However, there is no consensus about protecting the beamforming prediction using deep learning algorithms in these networks. This paper presents the security vulnerabilities in deep learning for beamforming prediction using deep neural networks (DNNs) in 6G wireless networks, which treats the beamforming prediction as a multi-output regression problem. It is indicated that the initial DNN model is vulnerable against adversarial attacks, such as Fast Gradient Sign Method (FGSM), Basic Iterative Method (BIM), Projected Gradient Descent (PGD), and Momentum Iterative Method (MIM), because the initial DNN model is sensitive to the perturbations of the adversarial samples of the training data. This study also offers two mitigation methods, such as adversarial training and defensive distillation, for adversarial attacks against artificial intelligence (AI)-based models used in the millimeter-wave (mmWave) beamforming prediction. Furthermore, the proposed scheme can be used in situations where the data are corrupted due to the adversarial examples in the training data. Experimental results show that the proposed methods effectively defend the DNN models against adversarial attacks in next-generation wireless networks.

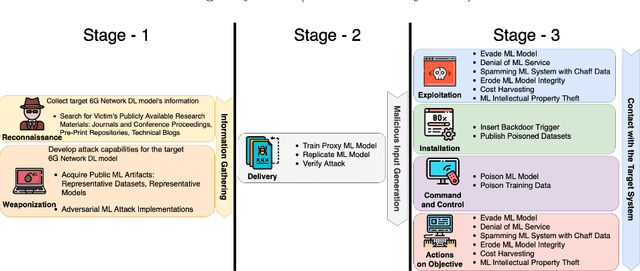

Security Concerns on Machine Learning Solutions for 6G Networks in mmWave Beam Prediction

May 09, 2021

Abstract:6G -- sixth generation -- is the latest cellular technology currently under development for wireless communication systems. In recent years, machine learning algorithms have been applied widely in various fields, such as healthcare, transportation, energy, autonomous car, and many more. Those algorithms have been also using in communication technologies to improve the system performance in terms of frequency spectrum usage, latency, and security. With the rapid developments of machine learning techniques, especially deep learning, it is critical to take the security concern into account when applying the algorithms. While machine learning algorithms offer significant advantages for 6G networks, security concerns on Artificial Intelligent (AI) models is typically ignored by the scientific community so far. However, security is also a vital part of the AI algorithms, this is because the AI model itself can be poisoned by attackers. This paper proposes a mitigation method for adversarial attacks against proposed 6G machine learning models for the millimeter-wave (mmWave) beam prediction using adversarial learning. The main idea behind adversarial attacks against machine learning models is to produce faulty results by manipulating trained deep learning models for 6G applications for mmWave beam prediction. We also present the adversarial learning mitigation method's performance for 6G security in mmWave beam prediction application with fast gradient sign method attack. The mean square errors (MSE) of the defended model under attack are very close to the undefended model without attack.

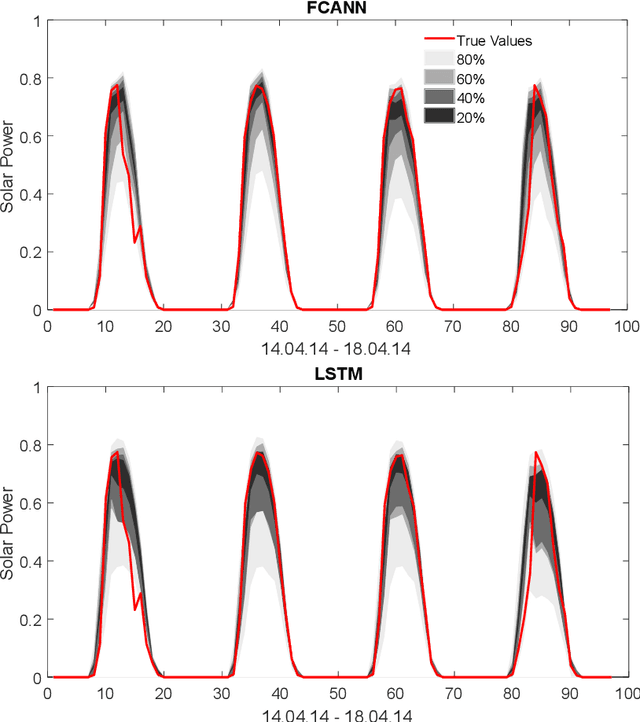

Probabilistic Solar Power Forecasting: Long Short-Term Memory Network vs Simpler Approaches

Jan 20, 2021

Abstract:The high penetration of volatile renewable energy sources such as solar make methods for coping with the uncertainty associated with them of paramount importance. Probabilistic forecasts are an example of these methods, as they assist energy planners in their decision-making process by providing them with information about the uncertainty of future power generation. Currently, there is a trend towards the use of deep learning probabilistic forecasting methods. However, the point at which the more complex deep learning methods should be preferred over more simple approaches is not yet clear. Therefore, the current article presents a simple comparison between a long short-term memory neural network and other more simple approaches. The comparison consists of training and comparing models able to provide one-day-ahead probabilistic forecasts for a solar power system. Moreover, the current paper makes use of an open-source dataset provided during the Global Energy Forecasting Competition of 2014 (GEFCom14).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge