Udit Bhatia

Spatially Regularized Graph Attention Autoencoder Framework for Detecting Rainfall Extremes

Nov 12, 2024

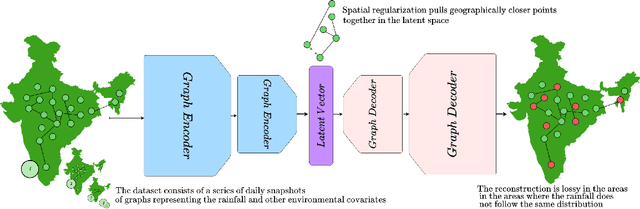

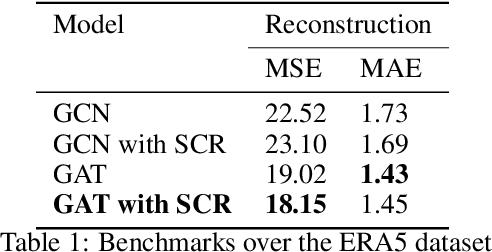

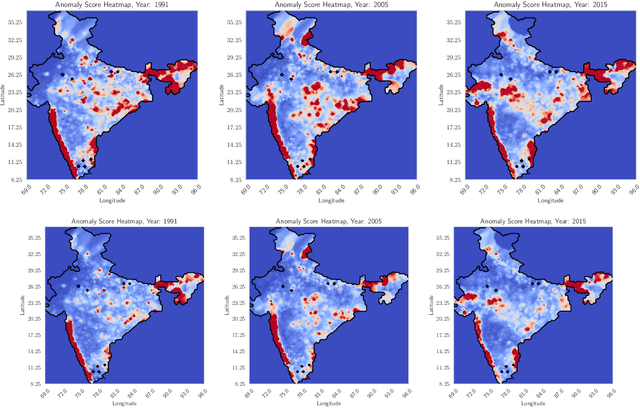

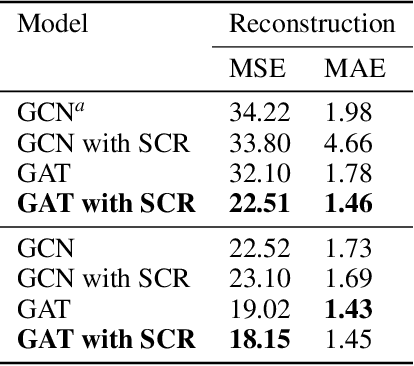

Abstract:We introduce a novel Graph Attention Autoencoder (GAE) with spatial regularization to address the challenge of scalable anomaly detection in spatiotemporal rainfall data across India from 1990 to 2015. Our model leverages a Graph Attention Network (GAT) to capture spatial dependencies and temporal dynamics in the data, further enhanced by a spatial regularization term ensuring geographic coherence. We construct two graph datasets employing rainfall, pressure, and temperature attributes from the Indian Meteorological Department and ERA5 Reanalysis on Single Levels, respectively. Our network operates on graph representations of the data, where nodes represent geographic locations, and edges, inferred through event synchronization, denote significant co-occurrences of rainfall events. Through extensive experiments, we demonstrate that our GAE effectively identifies anomalous rainfall patterns across the Indian landscape. Our work paves the way for sophisticated spatiotemporal anomaly detection methodologies in climate science, contributing to better climate change preparedness and response strategies.

Bayesian Deep Learning Hyperparameter Search for Robust Function Mapping to Polynomials with Noise

Jun 23, 2021

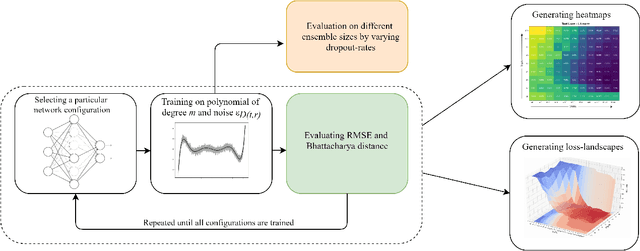

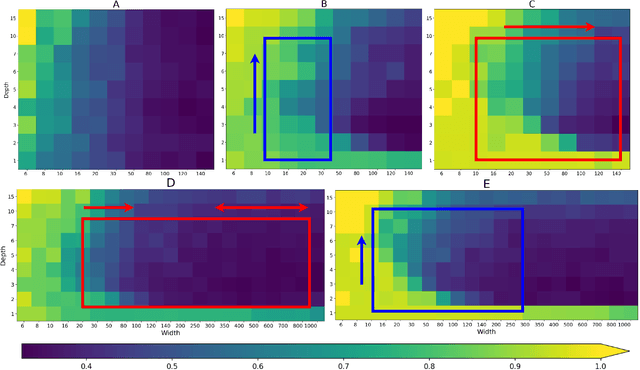

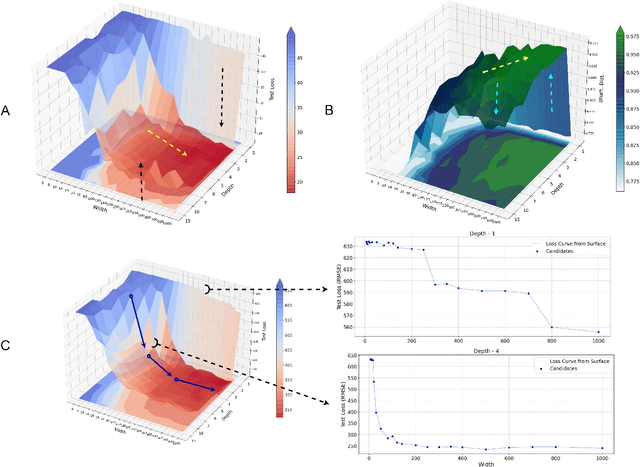

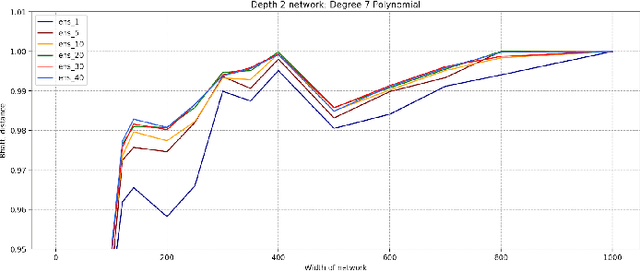

Abstract:Advances in neural architecture search, as well as explainability and interpretability of connectionist architectures, have been reported in the recent literature. However, our understanding of how to design Bayesian Deep Learning (BDL) hyperparameters, specifically, the depth, width and ensemble size, for robust function mapping with uncertainty quantification, is still emerging. This paper attempts to further our understanding by mapping Bayesian connectionist representations to polynomials of different orders with varying noise types and ratios. We examine the noise-contaminated polynomials to search for the combination of hyperparameters that can extract the underlying polynomial signals while quantifying uncertainties based on the noise attributes. Specifically, we attempt to study the question that an appropriate neural architecture and ensemble configuration can be found to detect a signal of any n-th order polynomial contaminated with noise having different distributions and signal-to-noise (SNR) ratios and varying noise attributes. Our results suggest the possible existence of an optimal network depth as well as an optimal number of ensembles for prediction skills and uncertainty quantification, respectively. However, optimality is not discernible for width, even though the performance gain reduces with increasing width at high values of width. Our experiments and insights can be directional to understand theoretical properties of BDL representations and to design practical solutions.

Enhancing predictive skills in physically-consistent way: Physics Informed Machine Learning for Hydrological Processes

Apr 22, 2021

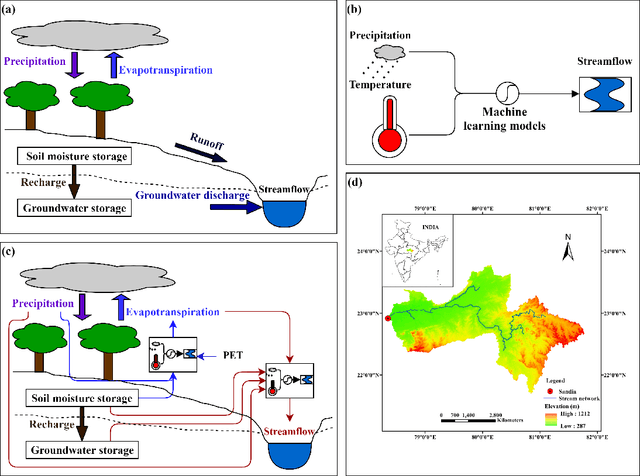

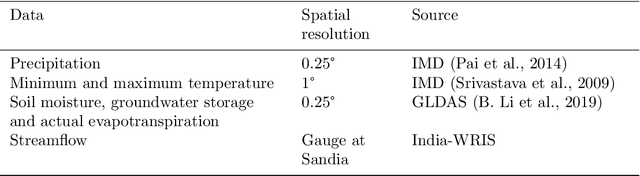

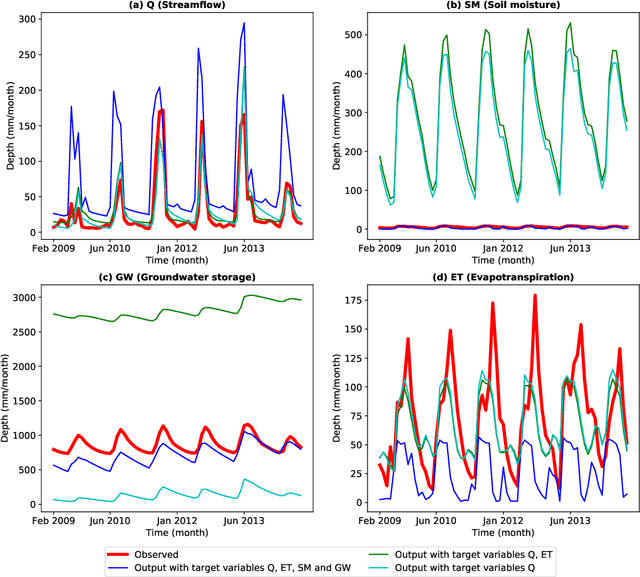

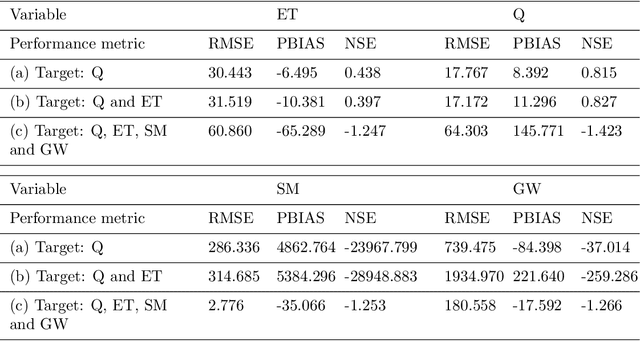

Abstract:Current modeling approaches for hydrological modeling often rely on either physics-based or data-science methods, including Machine Learning (ML) algorithms. While physics-based models tend to rigid structure resulting in unrealistic parameter values in certain instances, ML algorithms establish the input-output relationship while ignoring the constraints imposed by well-known physical processes. While there is a notion that the physics model enables better process understanding and ML algorithms exhibit better predictive skills, scientific knowledge that does not add to predictive ability may be deceptive. Hence, there is a need for a hybrid modeling approach to couple ML algorithms and physics-based models in a synergistic manner. Here we develop a Physics Informed Machine Learning (PIML) model that combines the process understanding of conceptual hydrological model with predictive abilities of state-of-the-art ML models. We apply the proposed model to predict the monthly time series of the target (streamflow) and intermediate variables (actual evapotranspiration) in the Narmada river basin in India. Our results show the capability of the PIML model to outperform a purely conceptual model ($abcd$ model) and ML algorithms while ensuring the physical consistency in outputs validated through water balance analysis. The systematic approach for combining conceptual model structure with ML algorithms could be used to improve the predictive accuracy of crucial hydrological processes important for flood risk assessment.

Augmented Convolutional LSTMs for Generation of High-Resolution Climate Change Projections

Sep 23, 2020

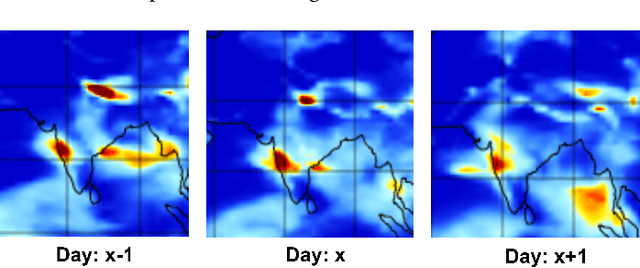

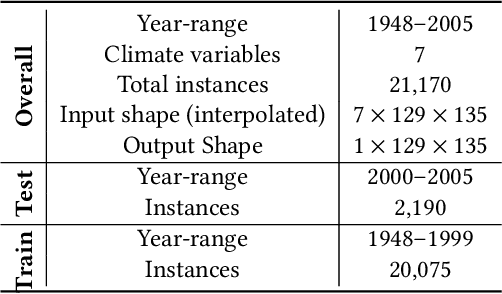

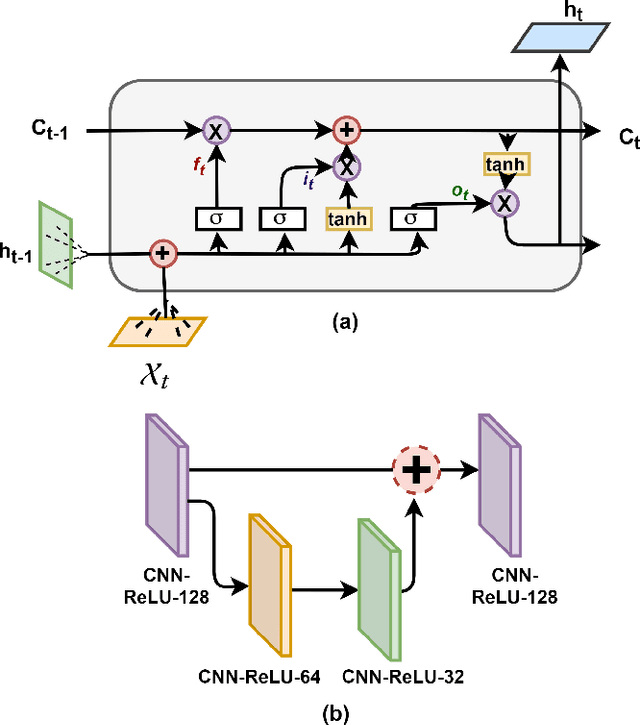

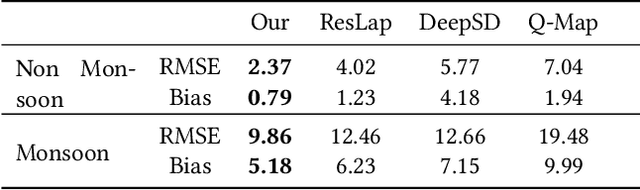

Abstract:Projection of changes in extreme indices of climate variables such as temperature and precipitation are critical to assess the potential impacts of climate change on human-made and natural systems, including critical infrastructures and ecosystems. While impact assessment and adaptation planning rely on high-resolution projections (typically in the order of a few kilometers), state-of-the-art Earth System Models (ESMs) are available at spatial resolutions of few hundreds of kilometers. Current solutions to obtain high-resolution projections of ESMs include downscaling approaches that consider the information at a coarse-scale to make predictions at local scales. Complex and non-linear interdependence among local climate variables (e.g., temperature and precipitation) and large-scale predictors (e.g., pressure fields) motivate the use of neural network-based super-resolution architectures. In this work, we present auxiliary variables informed spatio-temporal neural architecture for statistical downscaling. The current study performs daily downscaling of precipitation variable from an ESM output at 1.15 degrees (~115 km) to 0.25 degrees (25 km) over the world's most climatically diversified country, India. We showcase significant improvement gain against three popular state-of-the-art baselines with a better ability to predict extreme events. To facilitate reproducible research, we make available all the codes, processed datasets, and trained models in the public domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge