Uddipan Mukherjee

A Visual Domain Transfer Learning Approach for Heartbeat Sound Classification

Jul 28, 2021

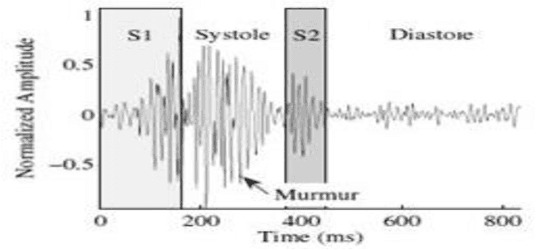

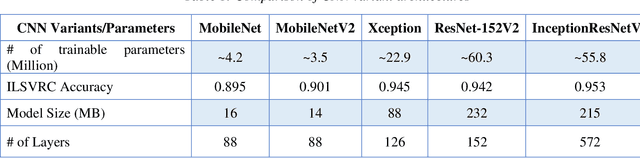

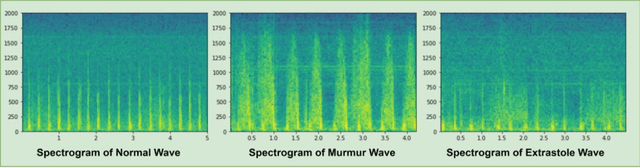

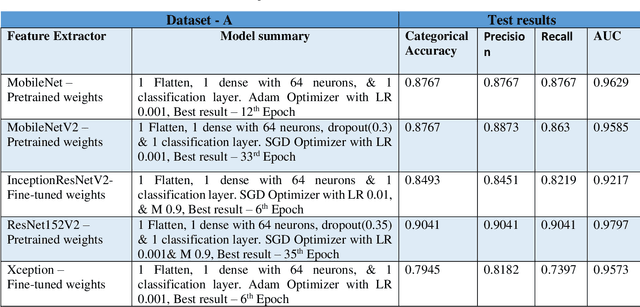

Abstract:Heart disease is the most common reason for human mortality that causes almost one-third of deaths throughout the world. Detecting the disease early increases the chances of survival of the patient and there are several ways a sign of heart disease can be detected early. This research proposes to convert cleansed and normalized heart sound into visual mel scale spectrograms and then using visual domain transfer learning approaches to automatically extract features and categorize between heart sounds. Some of the previous studies found that the spectrogram of various types of heart sounds is visually distinguishable to human eyes, which motivated this study to experiment on visual domain classification approaches for automated heart sound classification. It will use convolution neural network-based architectures i.e. ResNet, MobileNetV2, etc as the automated feature extractors from spectrograms. These well-accepted models in the image domain showed to learn generalized feature representations of cardiac sounds collected from different environments with varying amplitude and noise levels. Model evaluation criteria used were categorical accuracy, precision, recall, and AUROC as the chosen dataset is unbalanced. The proposed approach has been implemented on datasets A and B of the PASCAL heart sound collection and resulted in ~ 90% categorical accuracy and AUROC of ~0.97 for both sets.

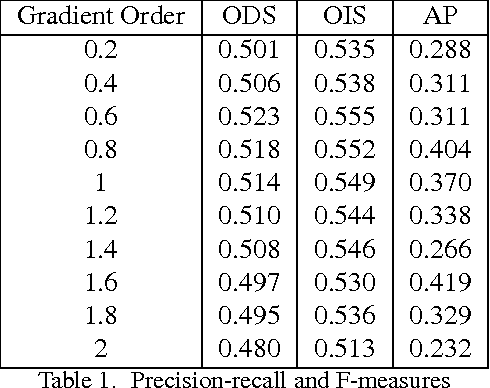

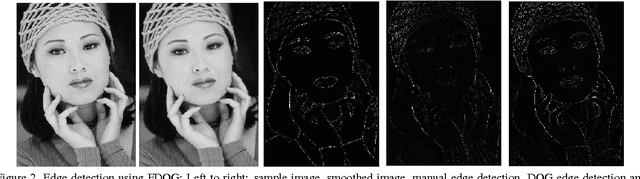

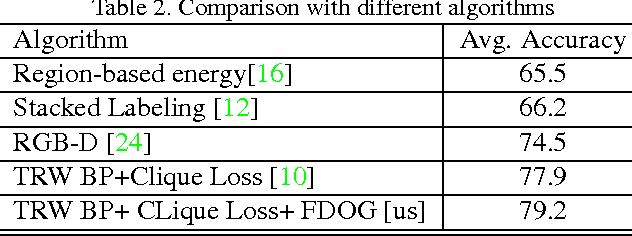

On Image segmentation using Fractional Gradients-Learning Model Parameters using Approximate Marginal Inference

May 07, 2016

Abstract:Estimates of image gradients play a ubiquitous role in image segmentation and classification problems since gradients directly relate to the boundaries or the edges of a scene. This paper proposes an unified approach to gradient estimation based on fractional calculus that is computationally cheap and readily applicable to any existing algorithm that relies on image gradients. We show experiments on edge detection and image segmentation on the Stanford Backgrounds Dataset where these improved local gradients outperforms state of the art, achieving a performance of 79.2% average accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge