Ubaldo Ramon

LDDMM meets GANs: Generative Adversarial Networks for diffeomorphic registration

Nov 24, 2021

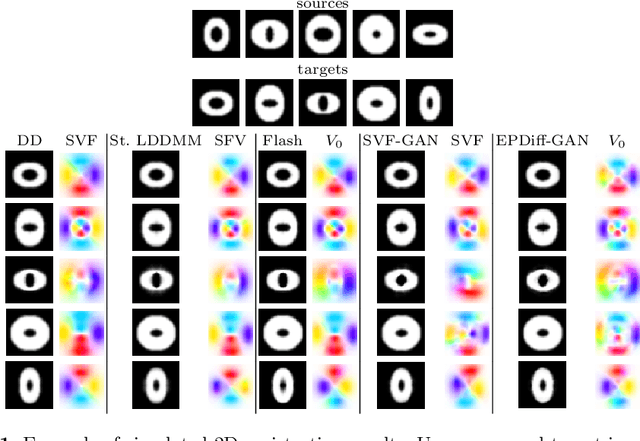

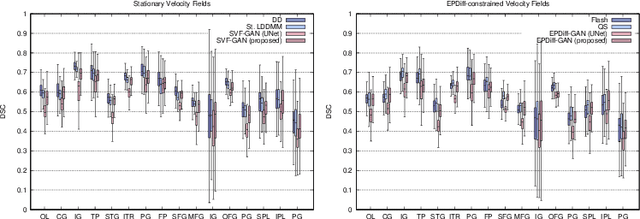

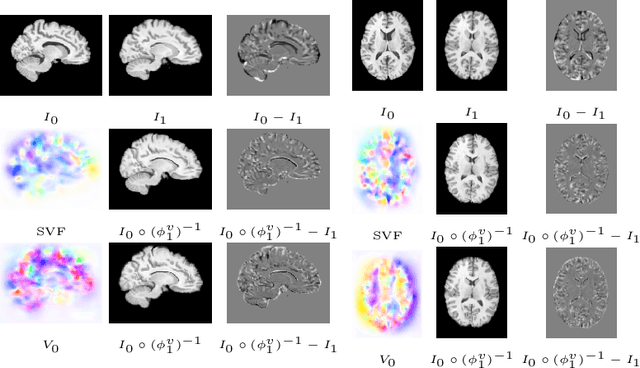

Abstract:The purpose of this work is to contribute to the state of the art of deep-learning methods for diffeomorphic registration. We propose an adversarial learning LDDMM method for pairs of 3D mono-modal images based on Generative Adversarial Networks. The method is inspired by the recent literature for deformable image registration with adversarial learning. We combine the best performing generative, discriminative, and adversarial ingredients from the state of the art within the LDDMM paradigm. We have successfully implemented two models with the stationary and the EPDiff-constrained non-stationary parameterizations of diffeomorphisms. Our unsupervised and data-hungry approach has shown a competitive performance with respect to a benchmark supervised and rich-data approach. In addition, our method has shown similar results to model-based methods with a computational time under one second.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge