Tomas Cabezon Pedroso

Feature space exploration as an alternative for design space exploration beyond the parametric space

Jan 26, 2023Abstract:This paper compares the parametric design space with a feature space generated by the extraction of design features using deep learning (DL) as an alternative way for design space exploration. In this comparison, the parametric design space is constructed by creating a synthetic dataset of 15.000 elements using a parametric algorithm and reducing its dimensions for visualization. The feature space - reduced-dimensionality vector space of embedded data features - is constructed by training a DL model on the same dataset. We analyze and compare the extracted design features by reducing their dimension and visualizing the results. We demonstrate that parametric design space is narrow in how it describes the design solutions because it is based on the combination of individual parameters. In comparison, we observed that the feature design space can intuitively represent design solutions according to complex parameter relationships. Based on our results, we discuss the potential of translating the features learned by DL models to provide a mechanism for intuitive design exploration space and visualization of possible design solutions.

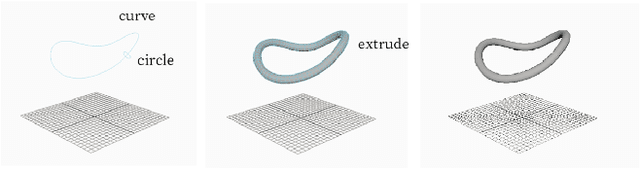

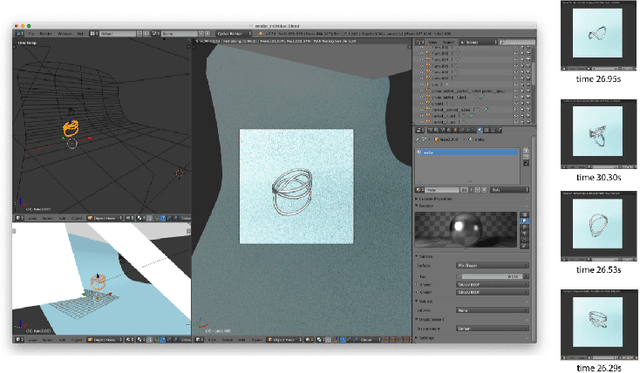

Capabilities, Limitations and Challenges of Style Transfer with CycleGANs: A Study on Automatic Ring Design Generation

Jul 18, 2022

Abstract:Rendering programs have changed the design process completely as they permit to see how the products will look before they are fabricated. However, the rendering process is complicated and takes a significant amount of time, not only in the rendering itself but in the setting of the scene as well. Materials, lights and cameras need to be set in order to get the best quality results. Nevertheless, the optimal output may not be obtained in the first render. This all makes the rendering process a tedious process. Since Goodfellow et al. introduced Generative Adversarial Networks (GANs) in 2014 [1], they have been used to generate computer-assigned synthetic data, from non-existing human faces to medical data analysis or image style transfer. GANs have been used to transfer image textures from one domain to another. However, paired data from both domains was needed. When Zhu et al. introduced the CycleGAN model, the elimination of this expensive constraint permitted transforming one image from one domain into another, without the need for paired data. This work validates the applicability of CycleGANs on style transfer from an initial sketch to a final render in 2D that represents a 3D design, a step that is paramount in every product design process. We inquiry the possibilities of including CycleGANs as part of the design pipeline, more precisely, applied to the rendering of ring designs. Our contribution entails a crucial part of the process as it allows the customer to see the final product before buying. This work sets a basis for future research, showing the possibilities of GANs in design and establishing a starting point for novel applications to approach crafts design.

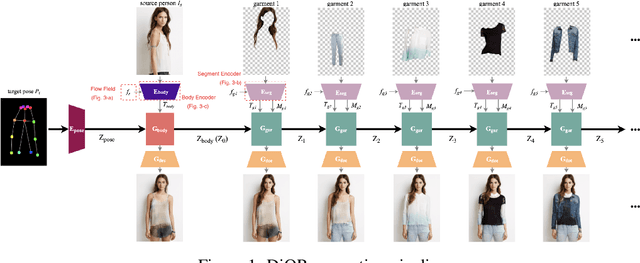

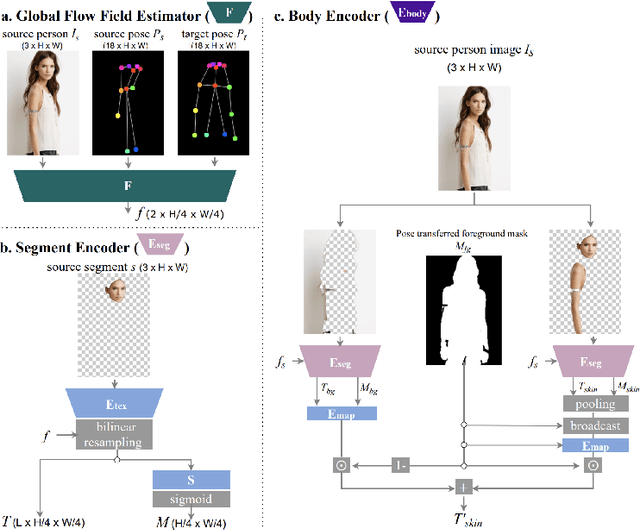

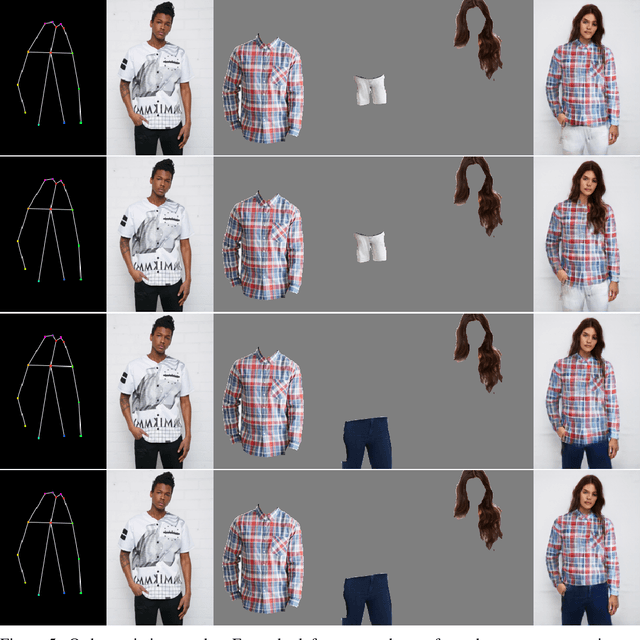

Controllable Garment Transfer

Apr 05, 2022

Abstract:Image-based garment transfer replaces the garment on the target human with the desired garment; this enables users to virtually view themselves in the desired garment. To this end, many approaches have been proposed using the generative model and have shown promising results. However, most fail to provide the user with on the fly garment modification functionality. We aim to add this customizable option of "garment tweaking" to our model to control garment attributes, such as sleeve length, waist width, and garment texture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge