Tom De Smedt

Online anti-Semitism across platforms

Dec 14, 2021

Abstract:We created a fine-grained AI system for the detection of anti-Semitism. This Explainable AI will identify English and German anti-Semitic expressions of dehumanization, verbal aggression and conspiracies in online social media messages across platforms, to support high-level decision making.

* 6 pages

A feast for trolls -- Engagement analysis of counternarratives against online toxicity

Nov 13, 2021

Abstract:This report provides an engagement analysis of counternarratives against online toxicity. Between February 2020 and July 2021, we observed over 15 million toxic messages on social media identified by our fine-grained, multilingual detection AI. Over 1,000 dashboard users responded to toxic messages with combinations of visual memes, text, or AI-generated text, or they reported content. This leads to new, real-life insights on self-regulatory approaches for the mitigation of online hate.

* 15 pages

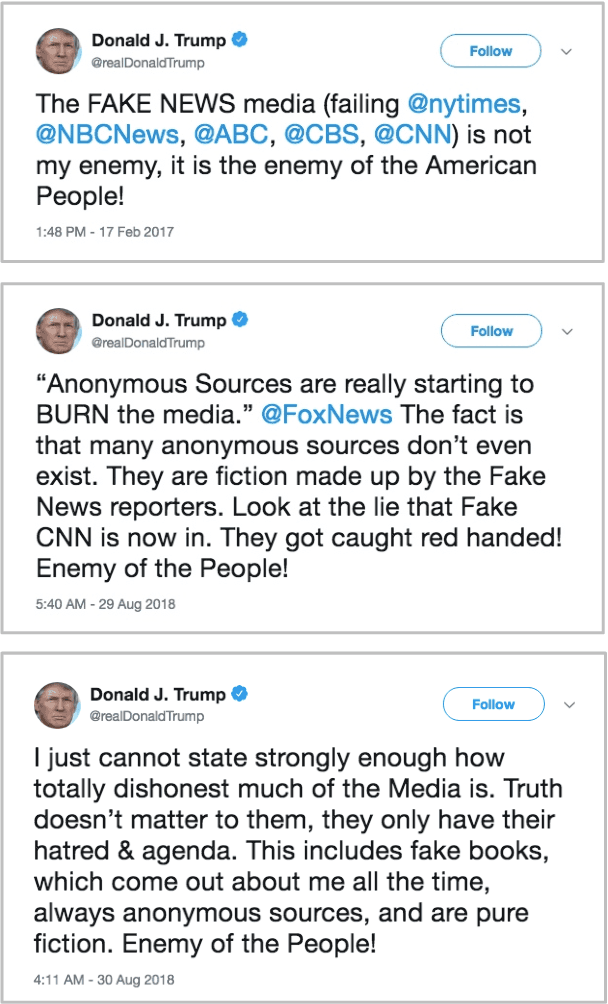

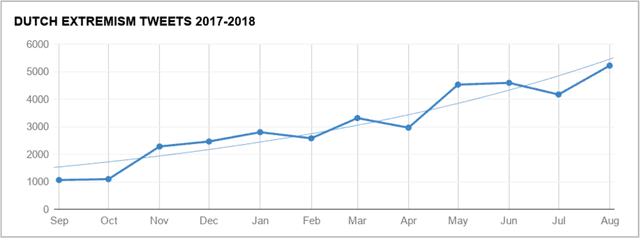

Right-wing German Hate Speech on Twitter: Analysis and Automatic Detection

Oct 16, 2019

Abstract:Discussion about the social network Twitter often concerns its role in political discourse, involving the question of when an expression of opinion becomes offensive, immoral, and/or illegal, and how to deal with it. Given the growing amount of offensive communication on the internet, there is a demand for new technology that can automatically detect hate speech, to assist content moderation by humans. This comes with new challenges, such as defining exactly what is free speech and what is illegal in a specific country, and knowing exactly what the linguistic characteristics of hate speech are. To shed light on the German situation, we analyzed over 50,000 right-wing German hate tweets posted between August 2017 and April 2018, at the time of the 2017 German federal elections, using both quantitative and qualitative methods. In this paper, we discuss the results of the analysis and demonstrate how the insights can be employed for the development of automatic detection systems.

Multilingual Cross-domain Perspectives on Online Hate Speech

Sep 11, 2018

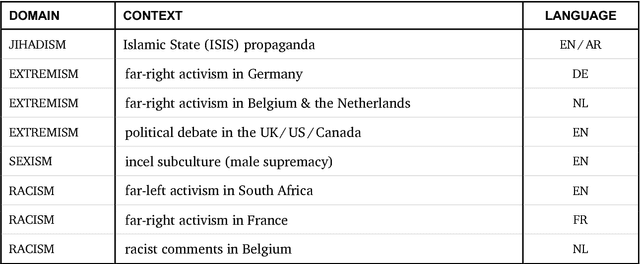

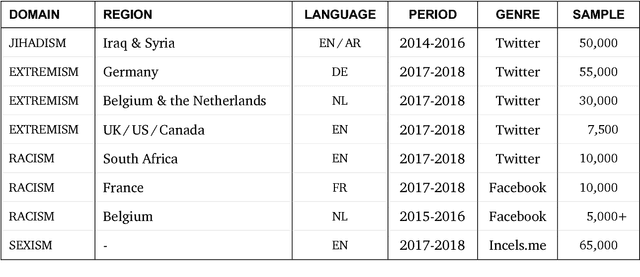

Abstract:In this report, we present a study of eight corpora of online hate speech, by demonstrating the NLP techniques that we used to collect and analyze the jihadist, extremist, racist, and sexist content. Analysis of the multilingual corpora shows that the different contexts share certain characteristics in their hateful rhetoric. To expose the main features, we have focused on text classification, text profiling, keyword and collocation extraction, along with manual annotation and qualitative study.

* 24 pages

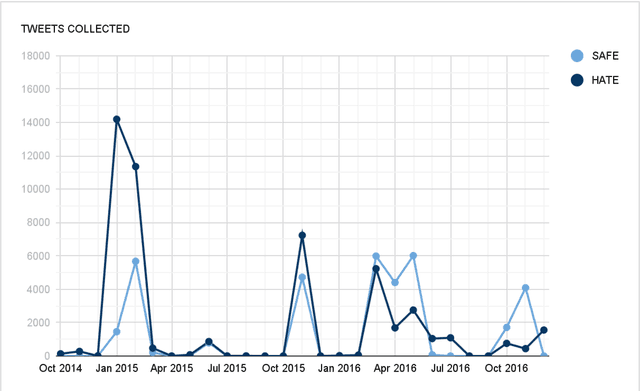

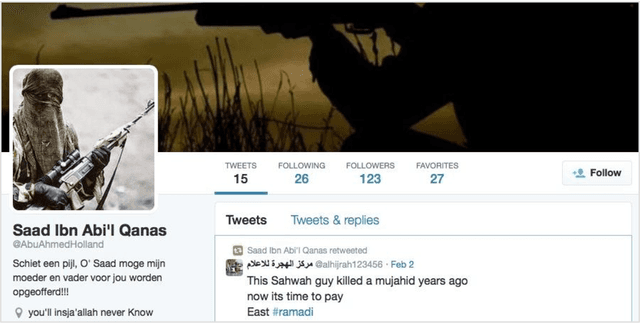

Automatic Detection of Online Jihadist Hate Speech

Mar 13, 2018

Abstract:We have developed a system that automatically detects online jihadist hate speech with over 80% accuracy, by using techniques from Natural Language Processing and Machine Learning. The system is trained on a corpus of 45,000 subversive Twitter messages collected from October 2014 to December 2016. We present a qualitative and quantitative analysis of the jihadist rhetoric in the corpus, examine the network of Twitter users, outline the technical procedure used to train the system, and discuss examples of use.

* 31 pages

Modeling Creativity: Case Studies in Python

Aug 10, 2014Abstract:Modeling Creativity (doctoral dissertation, 2013) explores how creativity can be represented using computational approaches. Our aim is to construct computer models that exhibit creativity in an artistic context, that is, that are capable of generating or evaluating an artwork (visual or linguistic), an interesting new idea, a subjective opinion. The research was conducted in 2008-2012 at the Computational Linguistics Research Group (CLiPS, University of Antwerp) under the supervision of Prof. Walter Daelemans. Prior research was also conducted at the Experimental Media Research Group (EMRG, St. Lucas University College of Art & Design Antwerp) under the supervision of Lucas Nijs. Modeling Creativity examines creativity in a number of different perspectives: from its origins in nature, which is essentially blind, to humans and machines, and from generating creative ideas to evaluating and learning their novelty and usefulness. We will use a hands-on approach with case studies and examples in the Python programming language.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge