Tolani Britton

Difficult Lessons on Social Prediction from Wisconsin Public Schools

Apr 13, 2023

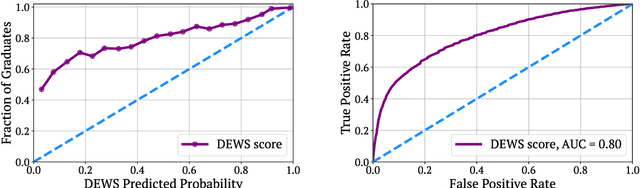

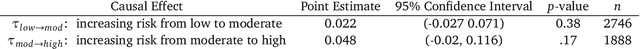

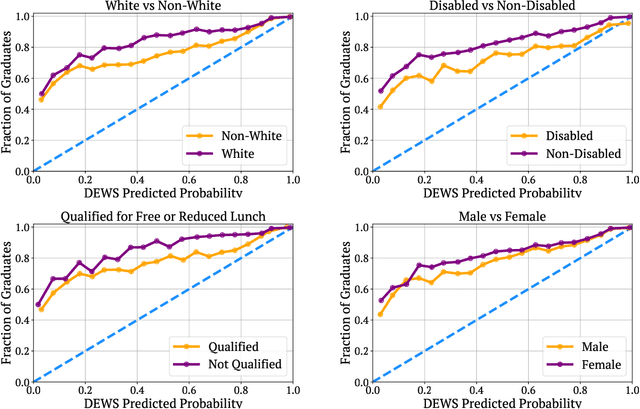

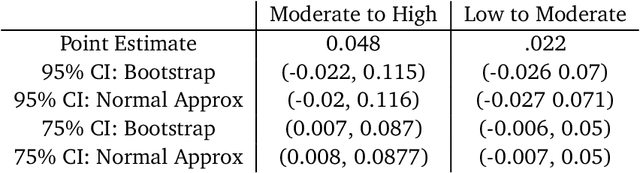

Abstract:Early warning systems (EWS) are prediction algorithms that have recently taken a central role in efforts to improve graduation rates in public schools across the US. These systems assist in targeting interventions at individual students by predicting which students are at risk of dropping out. Despite significant investments and adoption, there remain significant gaps in our understanding of the efficacy of EWS. In this work, we draw on nearly a decade's worth of data from a system used throughout Wisconsin to provide the first large-scale evaluation of the long-term impact of EWS on graduation outcomes. We present evidence that risk assessments made by the prediction system are highly accurate, including for students from marginalized backgrounds. Despite the system's accuracy and widespread use, we find no evidence that it has led to improved graduation rates. We surface a robust statistical pattern that can explain why these seemingly contradictory insights hold. Namely, environmental features, measured at the level of schools, contain significant signal about dropout risk. Within each school, however, academic outcomes are essentially independent of individual student performance. This empirical observation indicates that assigning all students within the same school the same probability of graduation is a nearly optimal prediction. Our work provides an empirical backbone for the robust, qualitative understanding among education researchers and policy-makers that dropout is structurally determined. The primary barrier to improving outcomes lies not in identifying students at risk of dropping out within specific schools, but rather in overcoming structural differences across different school districts. Our findings indicate that we should carefully evaluate the decision to fund early warning systems without also devoting resources to interventions tackling structural barriers.

Lost in Translation: Reimagining the Machine Learning Life Cycle in Education

Sep 08, 2022

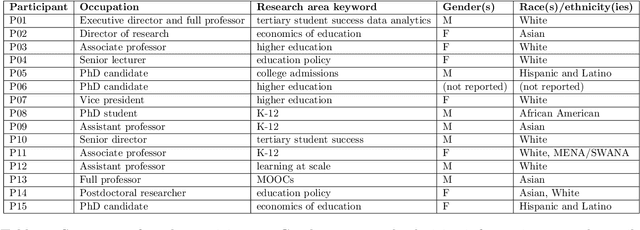

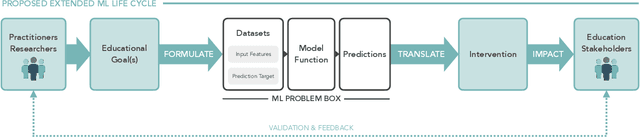

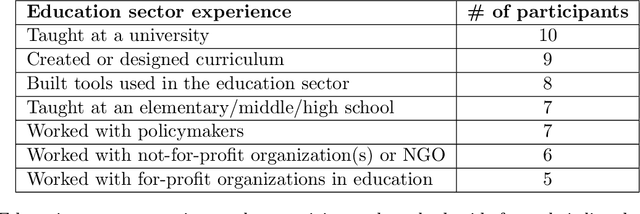

Abstract:Machine learning (ML) techniques are increasingly prevalent in education, from their use in predicting student dropout, to assisting in university admissions, and facilitating the rise of MOOCs. Given the rapid growth of these novel uses, there is a pressing need to investigate how ML techniques support long-standing education principles and goals. In this work, we shed light on this complex landscape drawing on qualitative insights from interviews with education experts. These interviews comprise in-depth evaluations of ML for education (ML4Ed) papers published in preeminent applied ML conferences over the past decade. Our central research goal is to critically examine how the stated or implied education and societal objectives of these papers are aligned with the ML problems they tackle. That is, to what extent does the technical problem formulation, objectives, approach, and interpretation of results align with the education problem at hand. We find that a cross-disciplinary gap exists and is particularly salient in two parts of the ML life cycle: the formulation of an ML problem from education goals and the translation of predictions to interventions. We use these insights to propose an extended ML life cycle, which may also apply to the use of ML in other domains. Our work joins a growing number of meta-analytical studies across education and ML research, as well as critical analyses of the societal impact of ML. Specifically, it fills a gap between the prevailing technical understanding of machine learning and the perspective of education researchers working with students and in policy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge