Toby Godfrey

MARLIN: Multi-Agent Reinforcement Learning Guided by Language-Based Inter-Robot Negotiation

Oct 18, 2024Abstract:Multi-agent reinforcement learning is a key method for training multi-robot systems over a series of episodes in which robots are rewarded or punished according to their performance; only once the system is trained to a suitable standard is it deployed in the real world. If the system is not trained enough, the task will likely not be completed and could pose a risk to the surrounding environment. Therefore, reaching high performance in a shorter training period can lead to significant reductions in time and resource consumption. We introduce Multi-Agent Reinforcement Learning guided by Language-based Inter-Robot Negotiation (MARLIN), which makes the training process both faster and more transparent. We equip robots with large language models that negotiate and debate the task, producing a plan that is used to guide the policy during training. We dynamically switch between using reinforcement learning and the negotiation-based approach throughout training. This offers an increase in training speed when compared to standard multi-agent reinforcement learning and allows the system to be deployed to physical hardware earlier. As robots negotiate in natural language, we can better understand the behaviour of the robots individually and as a collective. We compare the performance of our approach to multi-agent reinforcement learning and a large language model to show that our hybrid method trains faster at little cost to performance.

Conversational Language Models for Human-in-the-Loop Multi-Robot Coordination

Feb 29, 2024

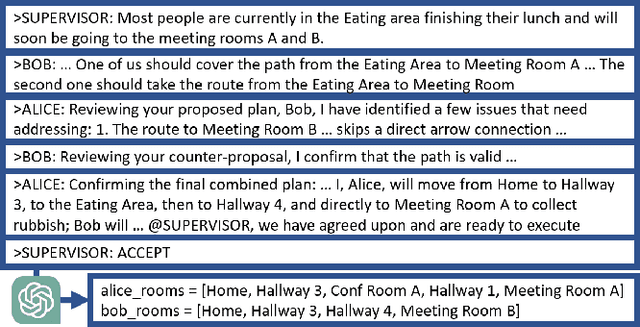

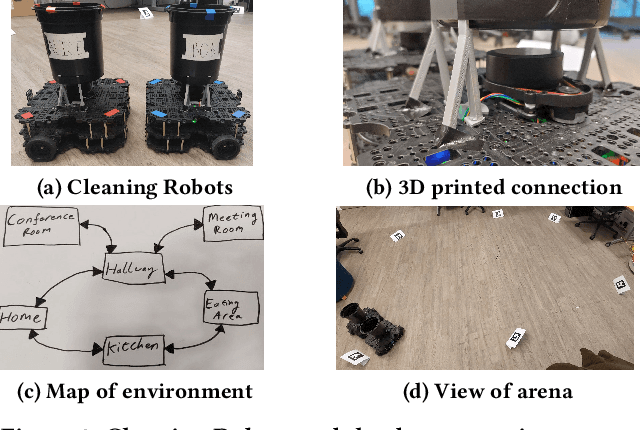

Abstract:With the increasing prevalence and diversity of robots interacting in the real world, there is need for flexible, on-the-fly planning and cooperation. Large Language Models are starting to be explored in a multimodal setup for communication, coordination, and planning in robotics. Existing approaches generally use a single agent building a plan, or have multiple homogeneous agents coordinating for a simple task. We present a decentralised, dialogical approach in which a team of agents with different abilities plans solutions through peer-to-peer and human-robot discussion. We suggest that argument-style dialogues are an effective way to facilitate adaptive use of each agent's abilities within a cooperative team. Two robots discuss how to solve a cleaning problem set by a human, define roles, and agree on paths they each take. Each step can be interrupted by a human advisor and agents check their plans with the human. Agents then execute this plan in the real world, collecting rubbish from people in each room. Our implementation uses text at every step, maintaining transparency and effective human-multi-robot interaction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge