Timothy F. Cootes

Evaluating Registration Without Ground Truth

Feb 24, 2020

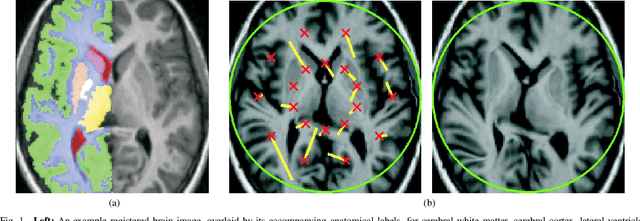

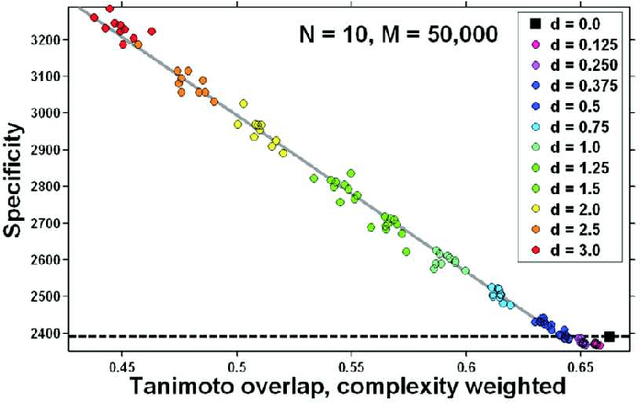

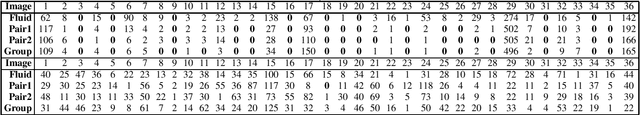

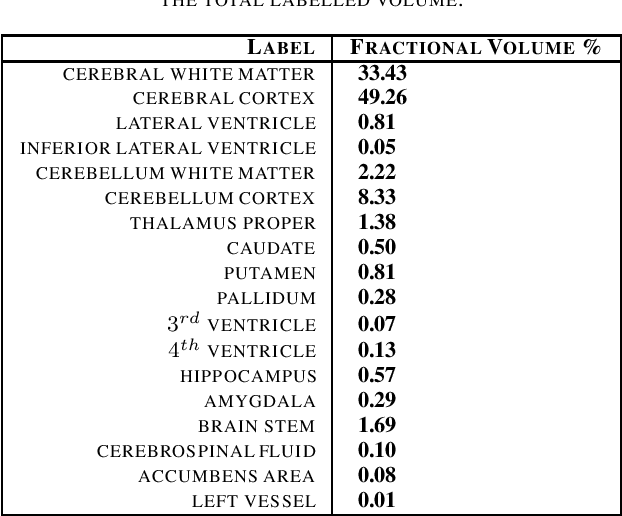

Abstract:We present a generic method for assessing the quality of non-rigid registration (NRR) algorithms, that does not depend on the existence of any ground truth, but depends solely on the data itself. The data is a set of images. The output of any NRR of such a set of images is a dense correspondence across the whole set. Given such a dense correspondence, it is possible to build various generative statistical models of appearance variation across the set. We show that evaluating the quality of the registration can be mapped to the problem of evaluating the quality of the resultant statistical model. The quality of the model entails a comparison between the model and the image data that was used to construct it. It should be noted that this approach does not depend on the specifics of the registration algorithm used (i.e., whether a groupwise or pairwise algorithm was used to register the set of images), or on the specifics of the modelling approach used. We derive an index of image model specificity that can be used to assess image model quality, and hence the quality of registration. This approach is validated by comparing our assessment of registration quality with that derived from ground truth anatomical labeling. We demonstrate that our approach is capable of assessing NRR reliably without ground truth. Finally, to demonstrate the practicality of our method, different NRR algorithms -- both pairwise and groupwise -- are compared in terms of their performance on 3D MR brain data.

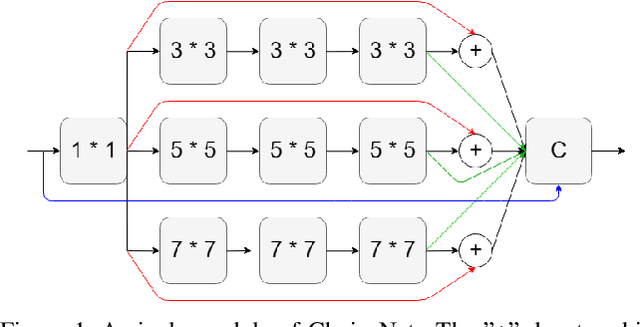

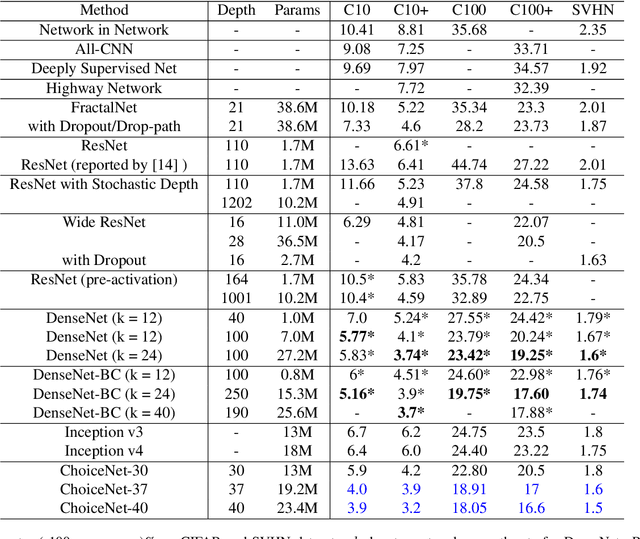

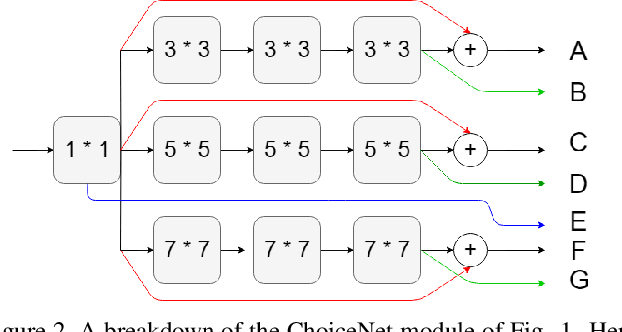

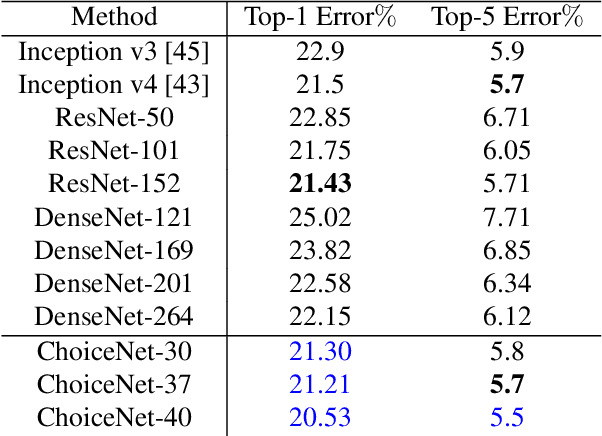

ChoiceNet: CNN learning through choice of multiple feature map representations

Apr 20, 2019

Abstract:We introduce a new architecture called ChoiceNet where each layer of the network is highly connected with skip connections and channelwise concatenations. This enables the network to alleviate the problem of vanishing gradients, reduces the number of parameters without sacrificing performance, and encourages feature reuse. We evaluate our proposed architecture on three benchmark datasetsforobjectrecognitiontasks(CIFAR-10,CIFAR100, SVHN) and on a semantic segmentation dataset (CamVid).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge