Tim Harris

DistIR: An Intermediate Representation and Simulator for Efficient Neural Network Distribution

Nov 09, 2021

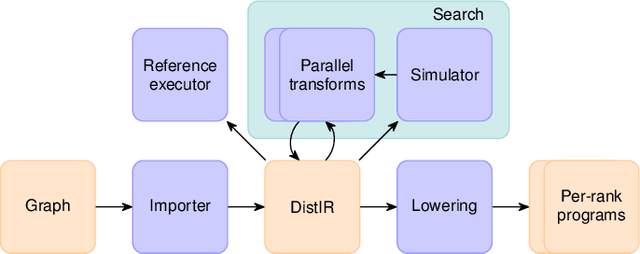

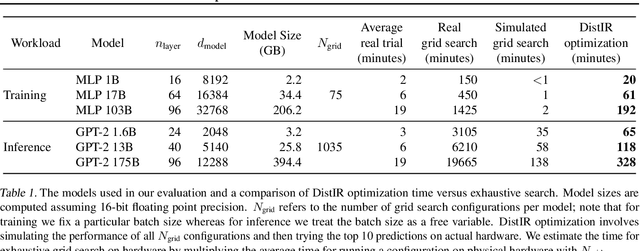

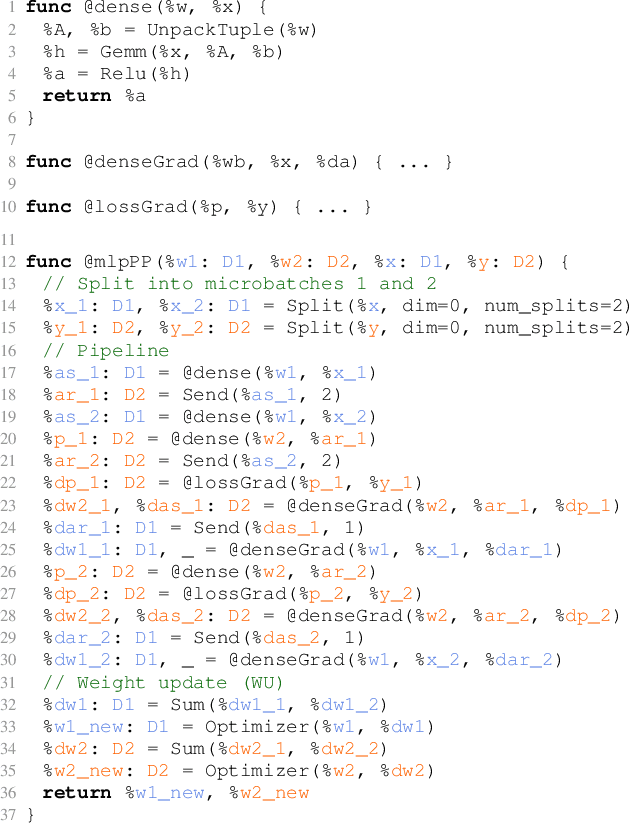

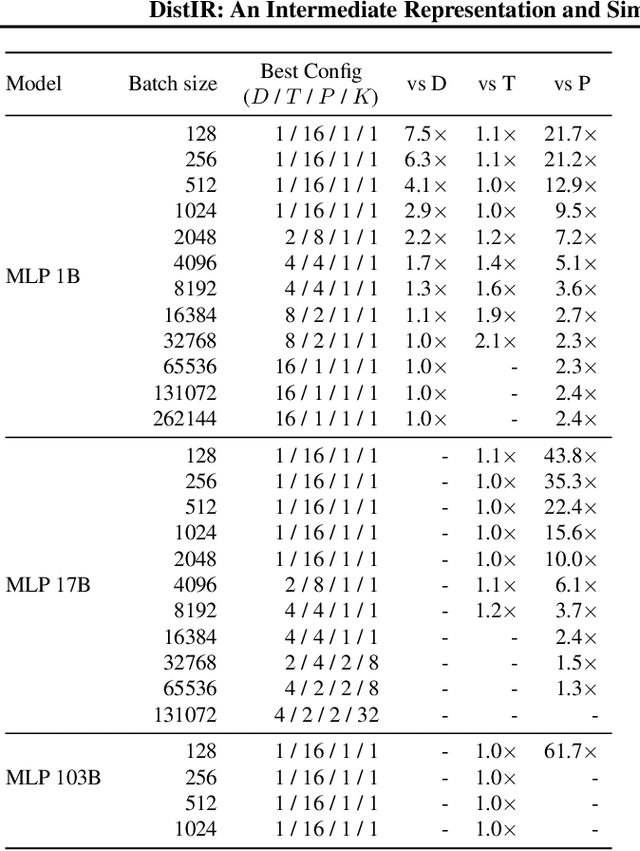

Abstract:The rapidly growing size of deep neural network (DNN) models and datasets has given rise to a variety of distribution strategies such as data, tensor-model, pipeline parallelism, and hybrid combinations thereof. Each of these strategies offers its own trade-offs and exhibits optimal performance across different models and hardware topologies. Selecting the best set of strategies for a given setup is challenging because the search space grows combinatorially, and debugging and testing on clusters is expensive. In this work we propose DistIR, an expressive intermediate representation for distributed DNN computation that is tailored for efficient analyses, such as simulation. This enables automatically identifying the top-performing strategies without having to execute on physical hardware. Unlike prior work, DistIR can naturally express many distribution strategies including pipeline parallelism with arbitrary schedules. Our evaluation on MLP training and GPT-2 inference models demonstrates how DistIR and its simulator enable fast grid searches over complex distribution spaces spanning up to 1000+ configurations, reducing optimization time by an order of magnitude for certain regimes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge