Tian Yonghong

TaxDiff: Taxonomic-Guided Diffusion Model for Protein Sequence Generation

Feb 27, 2024

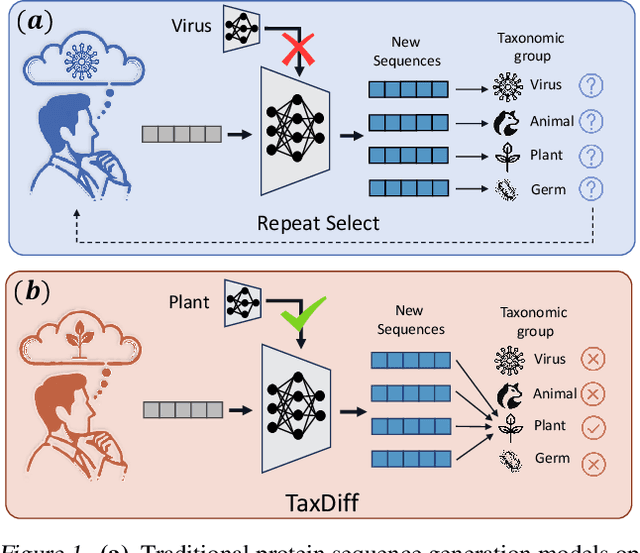

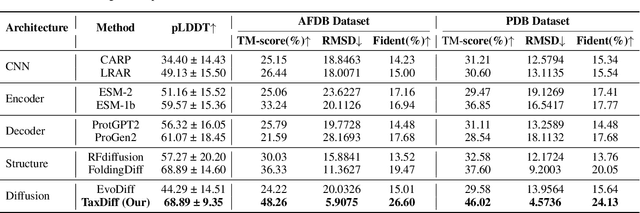

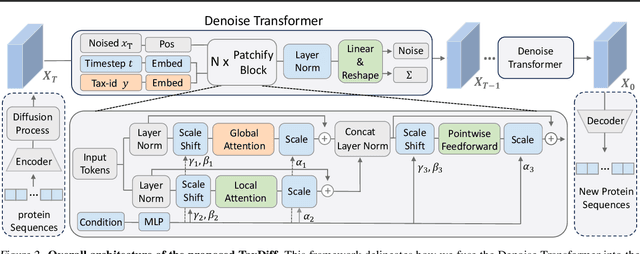

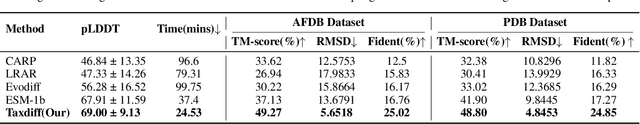

Abstract:Designing protein sequences with specific biological functions and structural stability is crucial in biology and chemistry. Generative models already demonstrated their capabilities for reliable protein design. However, previous models are limited to the unconditional generation of protein sequences and lack the controllable generation ability that is vital to biological tasks. In this work, we propose TaxDiff, a taxonomic-guided diffusion model for controllable protein sequence generation that combines biological species information with the generative capabilities of diffusion models to generate structurally stable proteins within the sequence space. Specifically, taxonomic control information is inserted into each layer of the transformer block to achieve fine-grained control. The combination of global and local attention ensures the sequence consistency and structural foldability of taxonomic-specific proteins. Extensive experiments demonstrate that TaxDiff can consistently achieve better performance on multiple protein sequence generation benchmarks in both taxonomic-guided controllable generation and unconditional generation. Remarkably, the sequences generated by TaxDiff even surpass those produced by direct-structure-generation models in terms of confidence based on predicted structures and require only a quarter of the time of models based on the diffusion model. The code for generating proteins and training new versions of TaxDiff is available at:https://github.com/Linzy19/TaxDiff.

Unsupervised Deraining: Where Contrastive Learning Meets Self-similarity

Mar 22, 2022

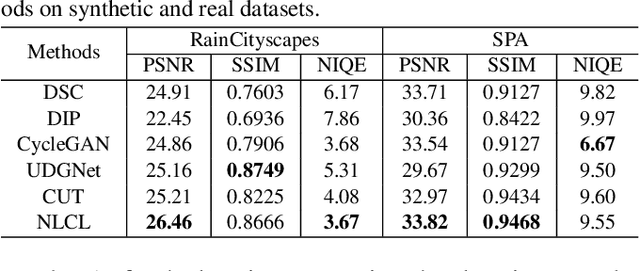

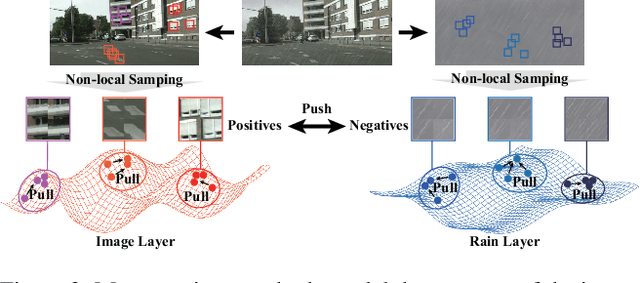

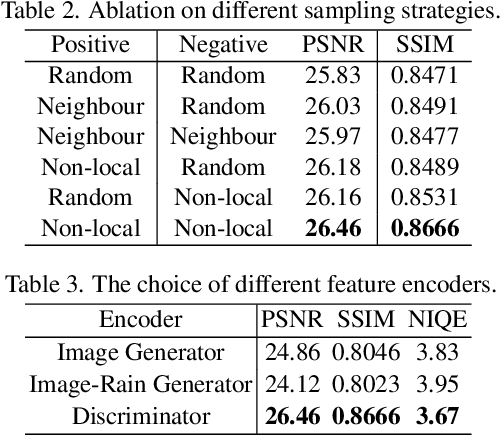

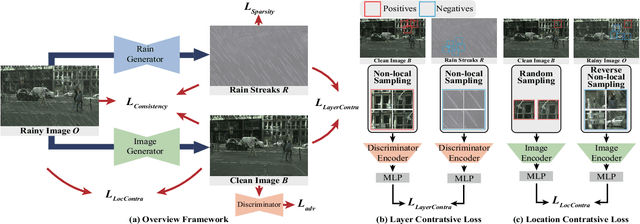

Abstract:Image deraining is a typical low-level image restoration task, which aims at decomposing the rainy image into two distinguishable layers: the clean image layer and the rain layer. Most of the existing learning-based deraining methods are supervisedly trained on synthetic rainy-clean pairs. The domain gap between the synthetic and real rains makes them less generalized to different real rainy scenes. Moreover, the existing methods mainly utilize the property of the two layers independently, while few of them have considered the mutually exclusive relationship between the two layers. In this work, we propose a novel non-local contrastive learning (NLCL) method for unsupervised image deraining. Consequently, we not only utilize the intrinsic self-similarity property within samples but also the mutually exclusive property between the two layers, so as to better differ the rain layer from the clean image. Specifically, the non-local self-similarity image layer patches as the positives are pulled together and similar rain layer patches as the negatives are pushed away. Thus the similar positive/negative samples that are close in the original space benefit us to enrich more discriminative representation. Apart from the self-similarity sampling strategy, we analyze how to choose an appropriate feature encoder in NLCL. Extensive experiments on different real rainy datasets demonstrate that the proposed method obtains state-of-the-art performance in real deraining.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge