Thomas Howe

AI Chat Assistants can Improve Conversations about Divisive Topics

Feb 21, 2023

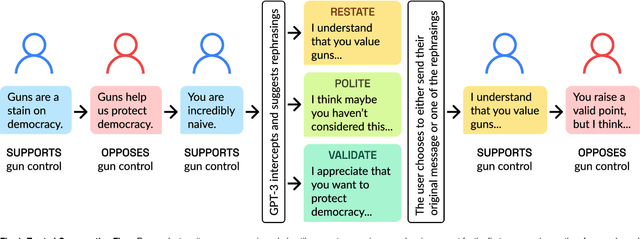

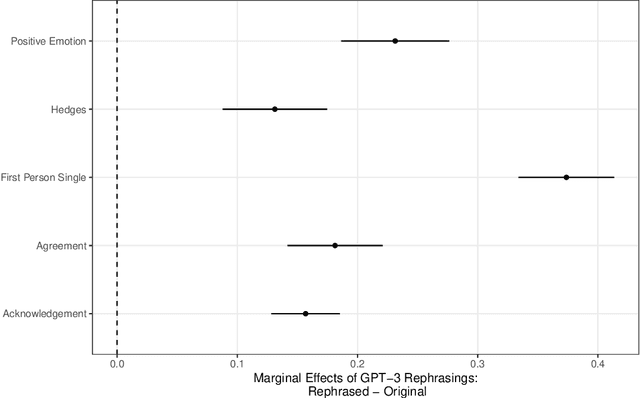

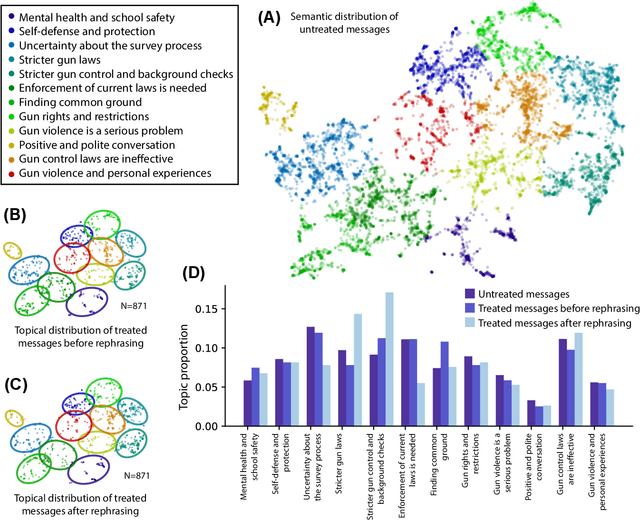

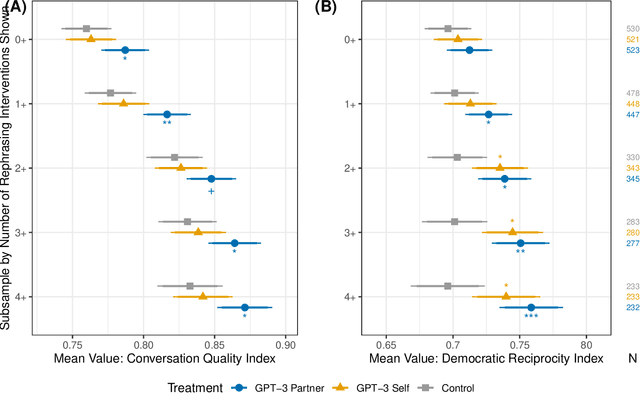

Abstract:A rapidly increasing amount of human conversation occurs online. But divisiveness and conflict can fester in text-based interactions on social media platforms, in messaging apps, and on other digital forums. Such toxicity increases polarization and, importantly, corrodes the capacity of diverse societies to develop efficient solutions to complex social problems that impact everyone. Scholars and civil society groups promote interventions that can make interpersonal conversations less divisive or more productive in offline settings, but scaling these efforts to the amount of discourse that occurs online is extremely challenging. We present results of a large-scale experiment that demonstrates how online conversations about divisive topics can be improved with artificial intelligence tools. Specifically, we employ a large language model to make real-time, evidence-based recommendations intended to improve participants' perception of feeling understood in conversations. We find that these interventions improve the reported quality of the conversation, reduce political divisiveness, and improve the tone, without systematically changing the content of the conversation or moving people's policy attitudes. These findings have important implications for future research on social media, political deliberation, and the growing community of scholars interested in the place of artificial intelligence within computational social science.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge