Thomas Gossard

BiasBench: A reproducible benchmark for tuning the biases of event cameras

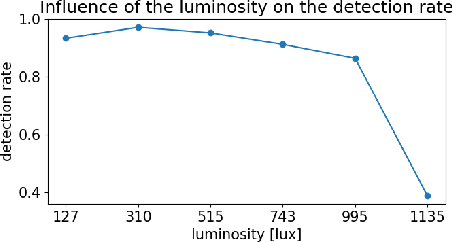

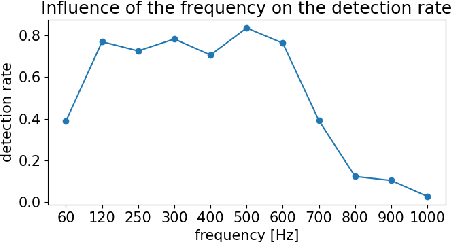

Apr 25, 2025Abstract:Event-based cameras are bio-inspired sensors that detect light changes asynchronously for each pixel. They are increasingly used in fields like computer vision and robotics because of several advantages over traditional frame-based cameras, such as high temporal resolution, low latency, and high dynamic range. As with any camera, the output's quality depends on how well the camera's settings, called biases for event-based cameras, are configured. While frame-based cameras have advanced automatic configuration algorithms, there are very few such tools for tuning these biases. A systematic testing framework would require observing the same scene with different biases, which is tricky since event cameras only generate events when there is movement. Event simulators exist, but since biases heavily depend on the electrical circuit and the pixel design, available simulators are not well suited for bias tuning. To allow reproducibility, we present BiasBench, a novel event dataset containing multiple scenes with settings sampled in a grid-like pattern. We present three different scenes, each with a quality metric of the downstream application. Additionally, we present a novel, RL-based method to facilitate online bias adjustments.

TT3D: Table Tennis 3D Reconstruction

Apr 14, 2025Abstract:Sports analysis requires processing large amounts of data, which is time-consuming and costly. Advancements in neural networks have significantly alleviated this burden, enabling highly accurate ball tracking in sports broadcasts. However, relying solely on 2D ball tracking is limiting, as it depends on the camera's viewpoint and falls short of supporting comprehensive game analysis. To address this limitation, we propose a novel approach for reconstructing precise 3D ball trajectories from online table tennis match recordings. Our method leverages the underlying physics of the ball's motion to identify the bounce state that minimizes the reprojection error of the ball's flying trajectory, hence ensuring an accurate and reliable 3D reconstruction. A key advantage of our approach is its ability to infer ball spin without relying on human pose estimation or racket tracking, which are often unreliable or unavailable in broadcast footage. We developed an automated camera calibration method capable of reliably tracking camera movements. Additionally, we adapted an existing 3D pose estimation model, which lacks depth motion capture, to accurately track player movements. Together, these contributions enable the full 3D reconstruction of a table tennis rally.

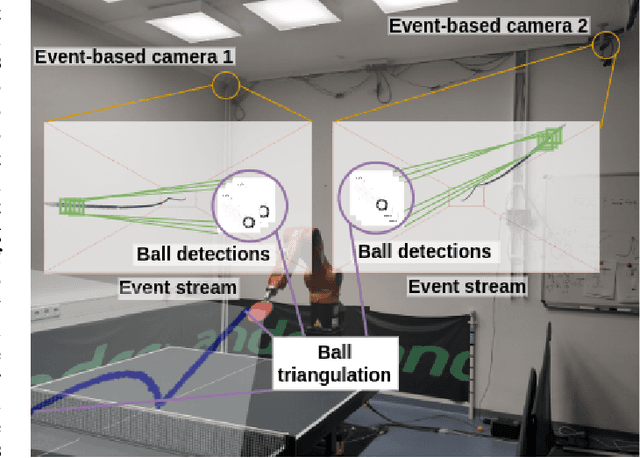

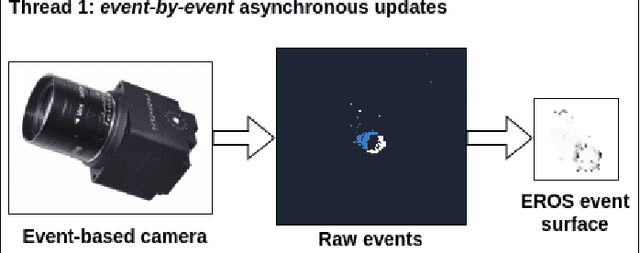

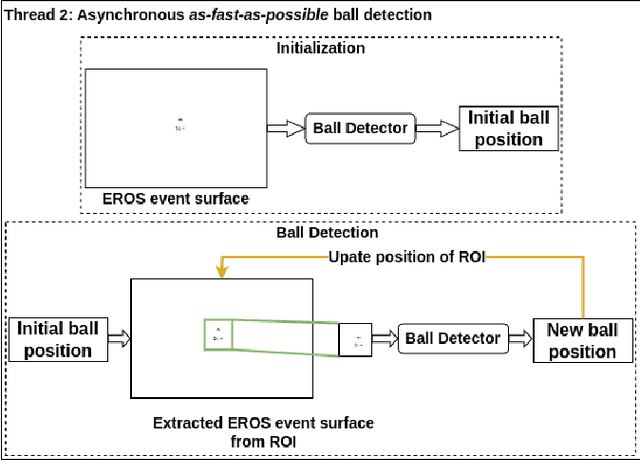

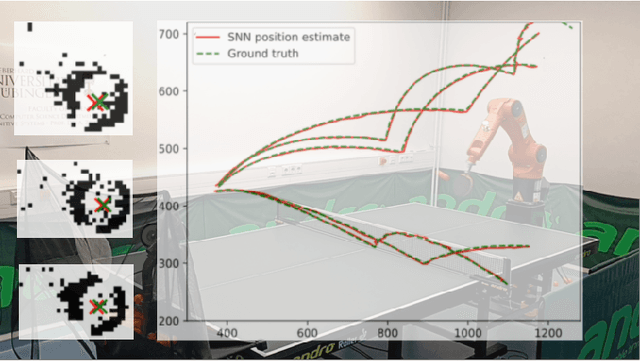

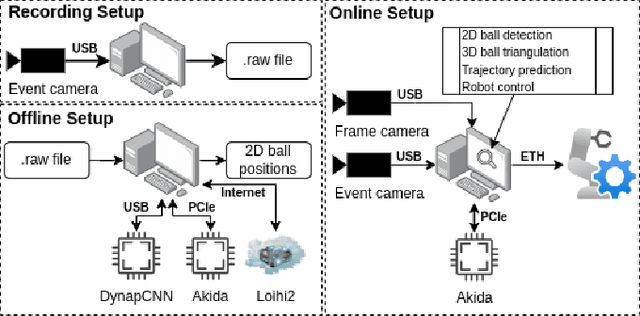

An Event-Based Perception Pipeline for a Table Tennis Robot

Feb 02, 2025

Abstract:Table tennis robots gained traction over the last years and have become a popular research challenge for control and perception algorithms. Fast and accurate ball detection is crucial for enabling a robotic arm to rally the ball back successfully. So far, most table tennis robots use conventional, frame-based cameras for the perception pipeline. However, frame-based cameras suffer from motion blur if the frame rate is not high enough for fast-moving objects. Event-based cameras, on the other hand, do not have this drawback since pixels report changes in intensity asynchronously and independently, leading to an event stream with a temporal resolution on the order of us. To the best of our knowledge, we present the first real-time perception pipeline for a table tennis robot that uses only event-based cameras. We show that compared to a frame-based pipeline, event-based perception pipelines have an update rate which is an order of magnitude higher. This is beneficial for the estimation and prediction of the ball's position, velocity, and spin, resulting in lower mean errors and uncertainties. These improvements are an advantage for the robot control, which has to be fast, given the short time a table tennis ball is flying until the robot has to hit back.

Spin Detection Using Racket Bounce Sounds in Table Tennis

Sep 18, 2024

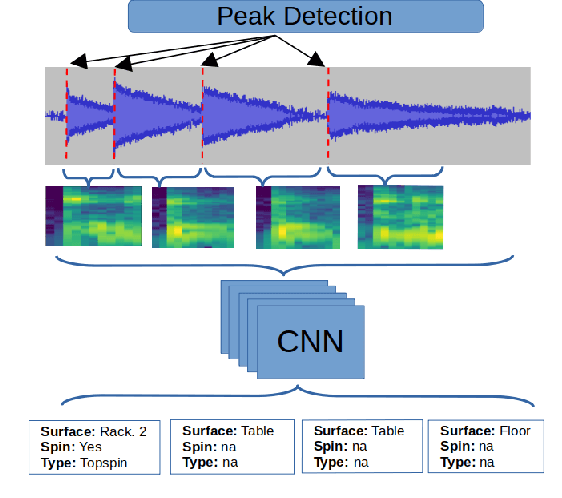

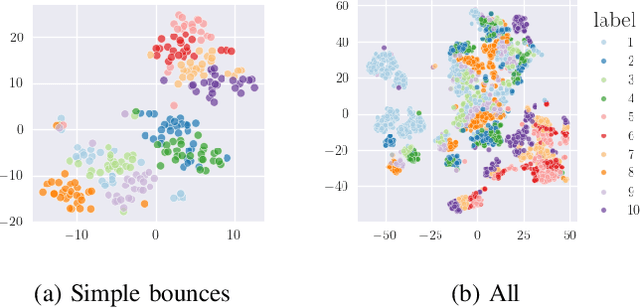

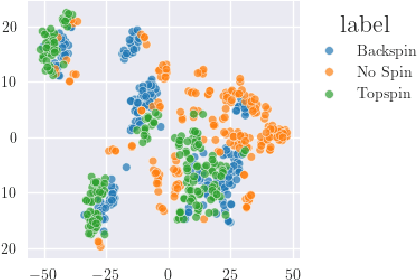

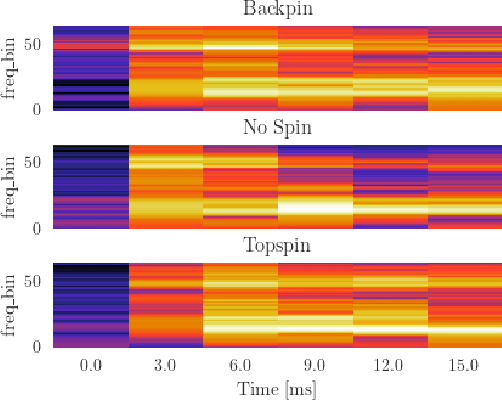

Abstract:While table tennis players primarily rely on visual cues, sound provides valuable information. The sound generated when the ball strikes the racket can assist in predicting the ball's trajectory, especially in determining the spin. While professional players can distinguish spin through these auditory cues, they often go unnoticed by untrained players. In this paper, we demonstrate that different rackets produce distinct sounds, which can be used to identify the racket type. In addition, we show that the sound generated by the racket can indicate whether spin was applied to the ball, or not. To achieve this, we created a comprehensive dataset featuring bounce sounds from 10 racket configurations, each applying various spins to the ball. To achieve millisecond level temporal accuracy, we first detect high frequency peaks that may correspond to table tennis ball bounces. We then refine these results using a CNN based classifier that accurately predicts both the type of racket used and whether spin was applied.

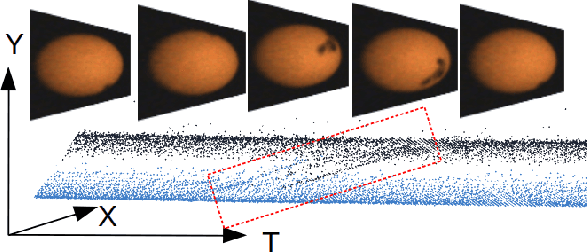

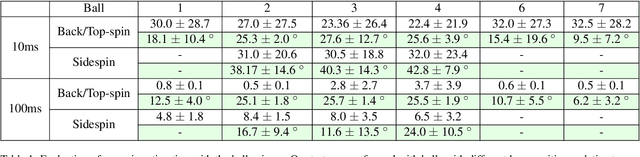

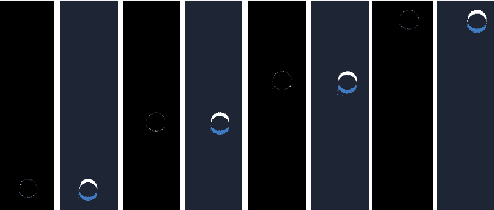

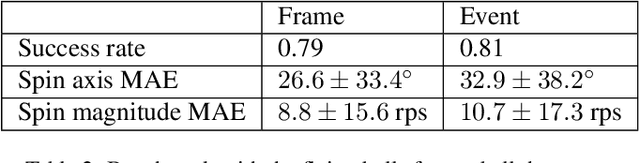

Table tennis ball spin estimation with an event camera

Apr 15, 2024

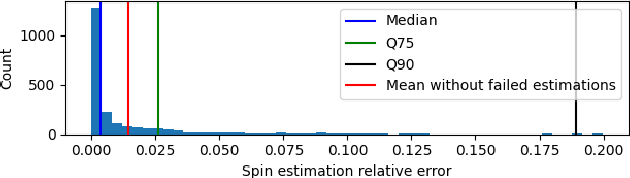

Abstract:Spin plays a pivotal role in ball-based sports. Estimating spin becomes a key skill due to its impact on the ball's trajectory and bouncing behavior. Spin cannot be observed directly, making it inherently challenging to estimate. In table tennis, the combination of high velocity and spin renders traditional low frame rate cameras inadequate for quickly and accurately observing the ball's logo to estimate the spin due to the motion blur. Event cameras do not suffer as much from motion blur, thanks to their high temporal resolution. Moreover, the sparse nature of the event stream solves communication bandwidth limitations many frame cameras face. To the best of our knowledge, we present the first method for table tennis spin estimation using an event camera. We use ordinal time surfaces to track the ball and then isolate the events generated by the logo on the ball. Optical flow is then estimated from the extracted events to infer the ball's spin. We achieved a spin magnitude mean error of $10.7 \pm 17.3$ rps and a spin axis mean error of $32.9 \pm 38.2\deg$ in real time for a flying ball.

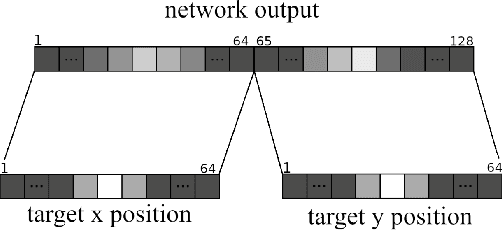

Spiking Neural Networks for Fast-Moving Object Detection on Neuromorphic Hardware Devices Using an Event-Based Camera

Mar 15, 2024

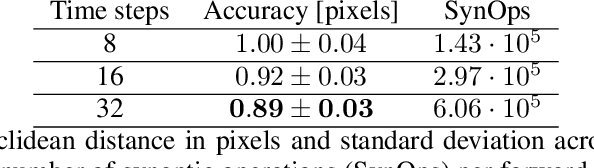

Abstract:Table tennis is a fast-paced and exhilarating sport that demands agility, precision, and fast reflexes. In recent years, robotic table tennis has become a popular research challenge for robot perception algorithms. Fast and accurate ball detection is crucial for enabling a robotic arm to rally the ball back successfully. Previous approaches have employed conventional frame-based cameras with Convolutional Neural Networks (CNNs) or traditional computer vision methods. In this paper, we propose a novel solution that combines an event-based camera with Spiking Neural Networks (SNNs) for ball detection. We use multiple state-of-the-art SNN frameworks and develop a SNN architecture for each of them, complying with their corresponding constraints. Additionally, we implement the SNN solution across multiple neuromorphic edge devices, conducting comparisons of their accuracies and run-times. This furnishes robotics researchers with a benchmark illustrating the capabilities achievable with each SNN framework and a corresponding neuromorphic edge device. Next to this comparison of SNN solutions for robots, we also show that an SNN on a neuromorphic edge device is able to run in real-time in a closed loop robotic system, a table tennis robot in our use case.

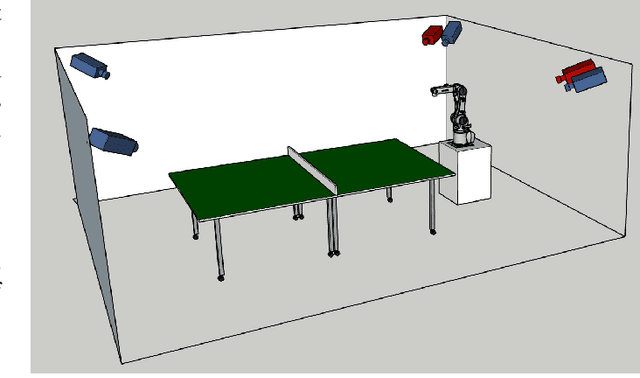

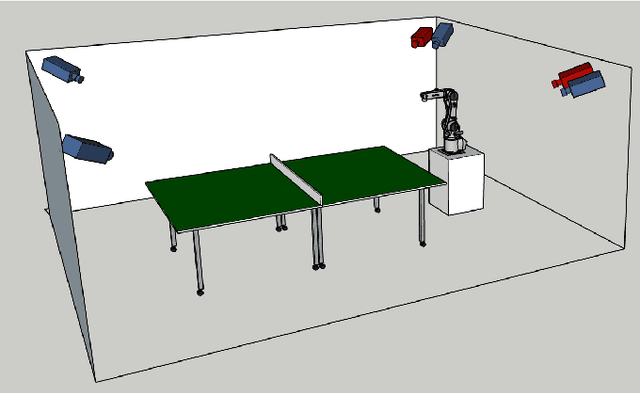

A multi-modal table tennis robot system

Oct 29, 2023

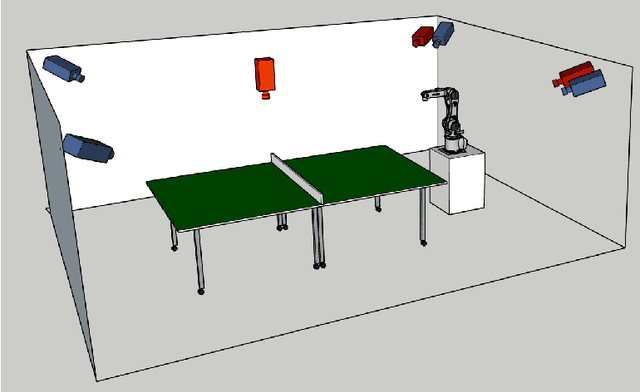

Abstract:In recent years, robotic table tennis has become a popular research challenge for perception and robot control. Here, we present an improved table tennis robot system with high accuracy vision detection and fast robot reaction. Based on previous work, our system contains a KUKA robot arm with 6 DOF, with four frame-based cameras and two additional event-based cameras. We developed a novel calibration approach to calibrate this multimodal perception system. For table tennis, spin estimation is crucial. Therefore, we introduced a novel, and more accurate spin estimation approach. Finally, we show how combining the output of an event-based camera and a Spiking Neural Network (SNN) can be used for accurate ball detection.

eWand: A calibration framework for wide baseline frame-based and event-based camera systems

Sep 22, 2023

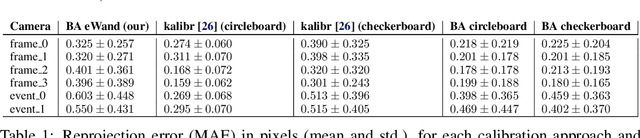

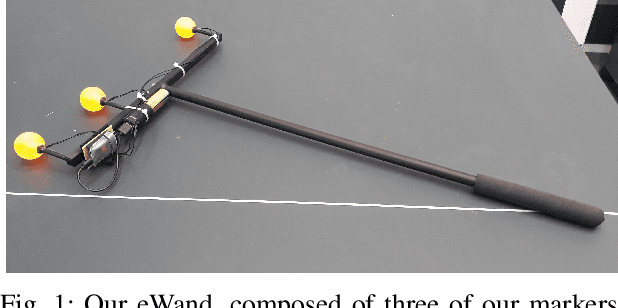

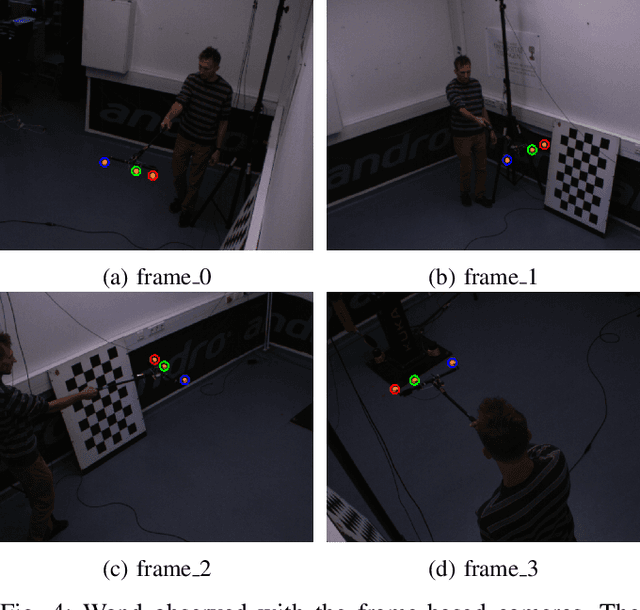

Abstract:Accurate calibration is crucial for using multiple cameras to triangulate the position of objects precisely. However, it is also a time-consuming process that needs to be repeated for every displacement of the cameras. The standard approach is to use a printed pattern with known geometry to estimate the intrinsic and extrinsic parameters of the cameras. The same idea can be applied to event-based cameras, though it requires extra work. By using frame reconstruction from events, a printed pattern can be detected. A blinking pattern can also be displayed on a screen. Then, the pattern can be directly detected from the events. Such calibration methods can provide accurate intrinsic calibration for both frame- and event-based cameras. However, using 2D patterns has several limitations for multi-camera extrinsic calibration, with cameras possessing highly different points of view and a wide baseline. The 2D pattern can only be detected from one direction and needs to be of significant size to compensate for its distance to the camera. This makes the extrinsic calibration time-consuming and cumbersome. To overcome these limitations, we propose eWand, a new method that uses blinking LEDs inside opaque spheres instead of a printed or displayed pattern. Our method provides a faster, easier-to-use extrinsic calibration approach that maintains high accuracy for both event- and frame-based cameras.

SpinDOE: A ball spin estimation method for table tennis robot

Mar 07, 2023Abstract:Spin plays a considerable role in table tennis, making a shot's trajectory harder to read and predict. However, the spin is challenging to measure because of the ball's high velocity and the magnitude of the spin values. Existing methods either require extremely high framerate cameras or are unreliable because they use the ball's logo, which may not always be visible. Because of this, many table tennis-playing robots ignore the spin, which severely limits their capabilities. This paper proposes an easily implementable and reliable spin estimation method. We developed a dotted-ball orientation estimation (DOE) method, that can then be used to estimate the spin. The dots are first localized on the image using a CNN and then identified using geometric hashing. The spin is finally regressed from the estimated orientations. Using our algorithm, the ball's orientation can be estimated with a mean error of 2.4{\deg} and the spin estimation has an relative error lower than 1%. Spins up to 175 rps are measurable with a camera of 350 fps in real time. Using our method, we generated a dataset of table tennis ball trajectories with position and spin, available on our project page.

Real-time event simulation with frame-based cameras

Sep 10, 2022

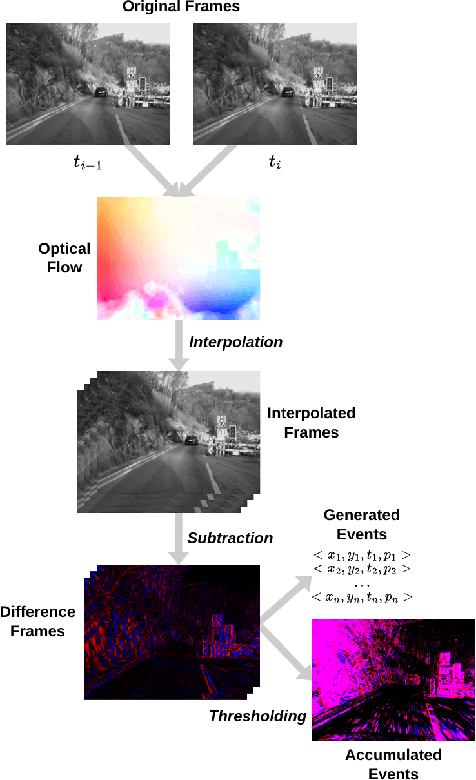

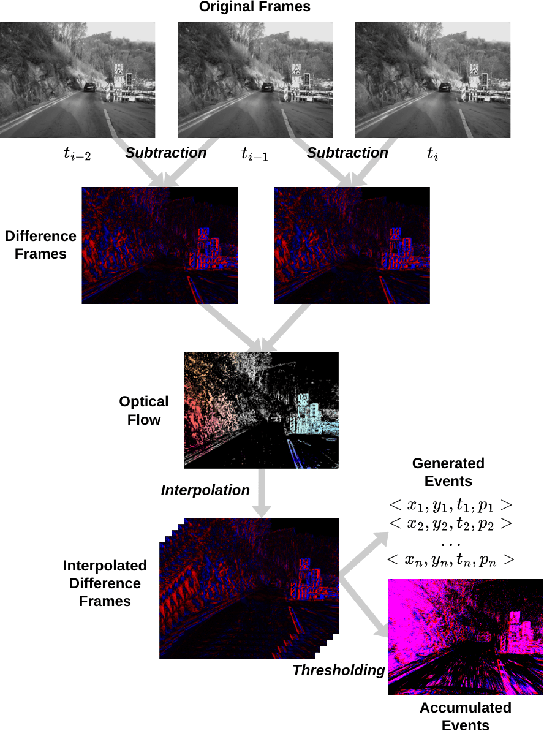

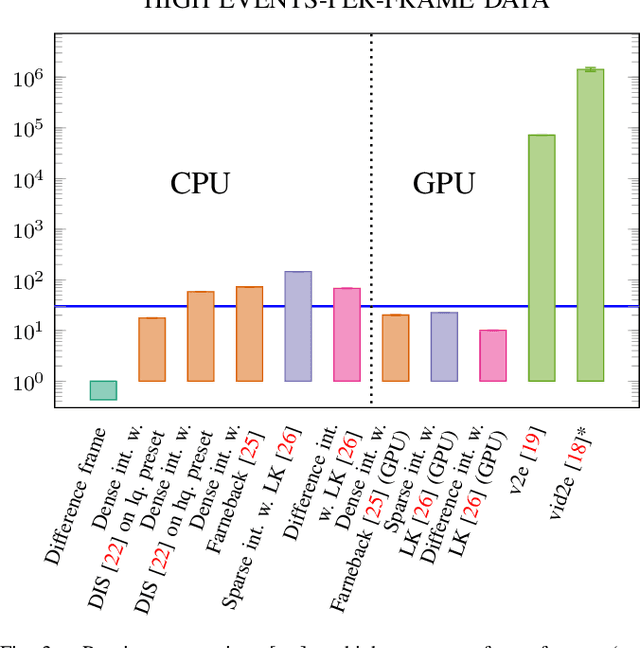

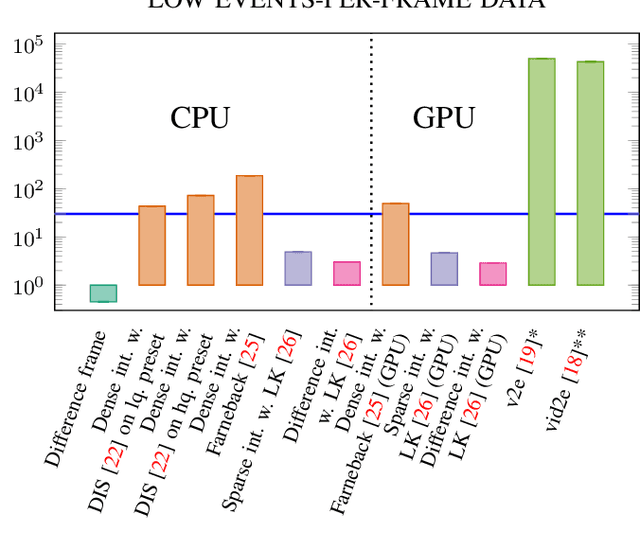

Abstract:Event cameras are becoming increasingly popular in robotics and computer vision due to their beneficial properties, e.g., high temporal resolution, high bandwidth, almost no motion blur, and low power consumption. However, these cameras remain expensive and scarce in the market, making them inaccessible to the majority. Using event simulators minimizes the need for real event cameras to develop novel algorithms. However, due to the computational complexity of the simulation, the event streams of existing simulators cannot be generated in real-time but rather have to be pre-calculated from existing video sequences or pre-rendered and then simulated from a virtual 3D scene. Although these offline generated event streams can be used as training data for learning tasks, all response time dependent applications cannot benefit from these simulators yet, as they still require an actual event camera. This work proposes simulation methods that improve the performance of event simulation by two orders of magnitude (making them real-time capable) while remaining competitive in the quality assessment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge