Thiago A. S. Pardo

Factual or Biased? Predicting Sentence-Level Factuality and Bias of News

Jan 27, 2023Abstract:We present a study on sentence-level factuality and bias of news articles across domains. While prior work in NLP has mainly focused on predicting the factuality of article-level news reporting and political-ideological bias of news media, we investigated the effects of framing bias in factual reporting across domains so as to predict factuality and bias at the sentence level, which may explain more accurately the overall reliability of the entire document. First, we manually produced a large sentence-level annotated dataset, titled FactNews, composed of 6,191 sentences from 100 news stories by three different outlets, resulting in 300 news articles. Further, we studied how biased and factual spans surface in news articles from different media outlets and different domains. Lastly, a baseline model for factual sentence prediction was presented by fine-tuning BERT. We also provide a detailed analysis of data demonstrating the reliability of the annotation and models.

SEMA: an Extended Semantic Evaluation Metric for AMR

May 28, 2019

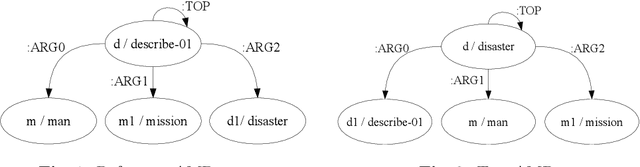

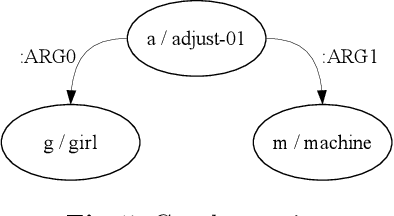

Abstract:Abstract Meaning Representation (AMR) is a recently designed semantic representation language intended to capture the meaning of a sentence, which may be represented as a single-rooted directed acyclic graph with labeled nodes and edges. The automatic evaluation of this structure plays an important role in the development of better systems, as well as for semantic annotation. Despite there is one available metric, smatch, it has some drawbacks. For instance, smatch creates a self-relation on the root of the graph, has weights for different error types, and does not take into account the dependence of the elements in the AMR structure. With these drawbacks, smatch masks several problems of the AMR parsers and distorts the evaluation of the AMRs. In view of this, in this paper, we introduce an extended metric to evaluate AMR parsers, which deals with the drawbacks of the smatch metric. Finally, we compare both metrics, using four well-known AMR parsers, and we argue that our metric is more refined, robust, fairer, and faster than smatch.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge