Rafael T. Anchieta

Essay-BR: a Brazilian Corpus of Essays

May 19, 2021

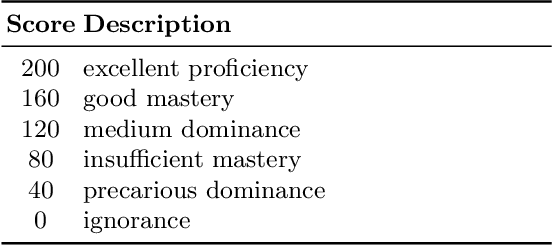

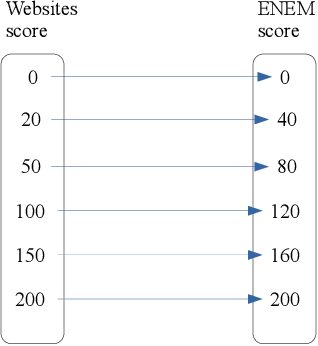

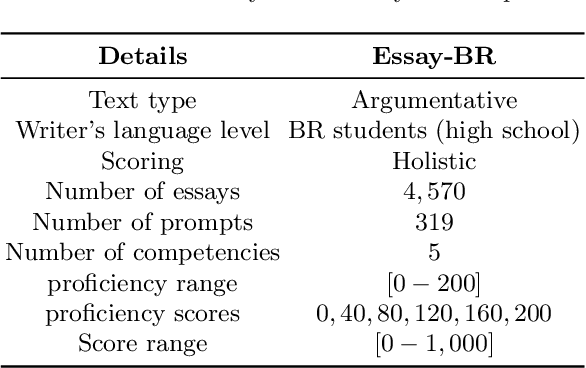

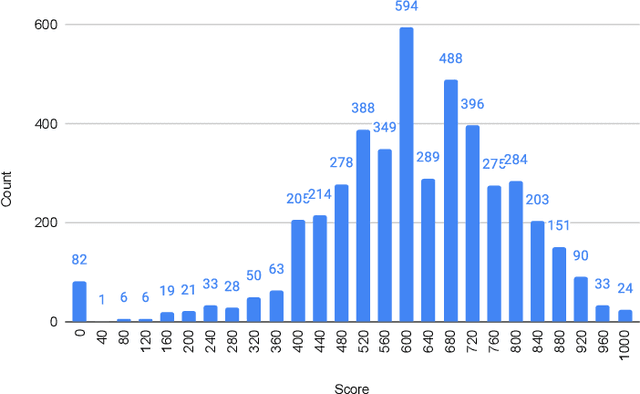

Abstract:Automatic Essay Scoring (AES) is defined as the computer technology that evaluates and scores the written essays, aiming to provide computational models to grade essays either automatically or with minimal human involvement. While there are several AES studies in a variety of languages, few of them are focused on the Portuguese language. The main reason is the lack of a corpus with manually graded essays. In order to bridge this gap, we create a large corpus with several essays written by Brazilian high school students on an online platform. All of the essays are argumentative and were scored across five competencies by experts. Moreover, we conducted an experiment on the created corpus and showed challenges posed by the Portuguese language. Our corpus is publicly available at https://github.com/rafaelanchieta/essay.

SEMA: an Extended Semantic Evaluation Metric for AMR

May 28, 2019

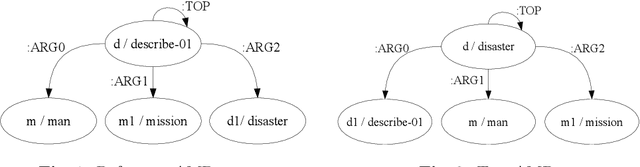

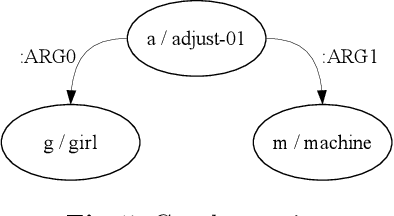

Abstract:Abstract Meaning Representation (AMR) is a recently designed semantic representation language intended to capture the meaning of a sentence, which may be represented as a single-rooted directed acyclic graph with labeled nodes and edges. The automatic evaluation of this structure plays an important role in the development of better systems, as well as for semantic annotation. Despite there is one available metric, smatch, it has some drawbacks. For instance, smatch creates a self-relation on the root of the graph, has weights for different error types, and does not take into account the dependence of the elements in the AMR structure. With these drawbacks, smatch masks several problems of the AMR parsers and distorts the evaluation of the AMRs. In view of this, in this paper, we introduce an extended metric to evaluate AMR parsers, which deals with the drawbacks of the smatch metric. Finally, we compare both metrics, using four well-known AMR parsers, and we argue that our metric is more refined, robust, fairer, and faster than smatch.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge