Theresa Law

Robots in healthcare as envisioned by care professionals

Jun 01, 2022

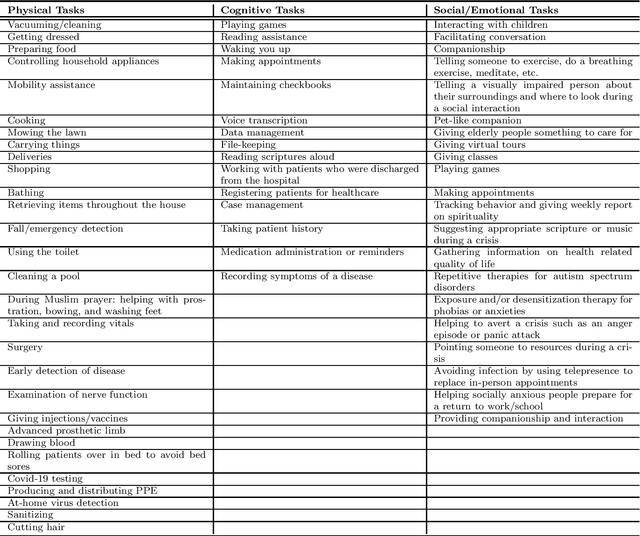

Abstract:As AI-enabled robots enter the realm of healthcare and caregiving, it is important to consider how they will address the dimensions of care and how they will interact not just with the direct receivers of assistance, but also with those who provide it (e.g., caregivers, healthcare providers etc.). Caregiving in its best form addresses challenges in a multitude of dimensions of a person's life: from physical, to social-emotional and sometimes even existential dimensions (such as issues surrounding life and death). In this study we use semi-structured qualitative interviews administered to healthcare professions with multidisciplinary backgrounds (physicians, public health professionals, social workers, and chaplains) to understand their expectations regarding the possible roles robots may play in the healthcare ecosystem in the future. We found that participants drew inspiration in their mental models of robots from both works of science fiction but also from existing commercial robots. Participants envisioned roles for robots in the full spectrum of care, from physical to social-emotional and even existential-spiritual dimensions, but also pointed out numerous limitations that robots have in being able to provide comprehensive humanistic care. While no dimension of care was deemed as exclusively the realm of humans, participants stressed the importance of caregiving humans as the primary providers of comprehensive care, with robots assisting with more narrowly focused tasks. Throughout the paper we point out the encouraging confluence of ideas between the expectations of healthcare providers and research trends in the human-robot interaction (HRI) literature.

Can You Trust Your Trust Measure?

Apr 23, 2021

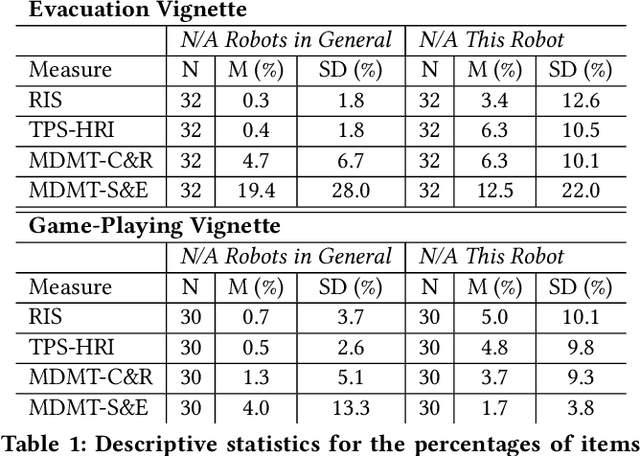

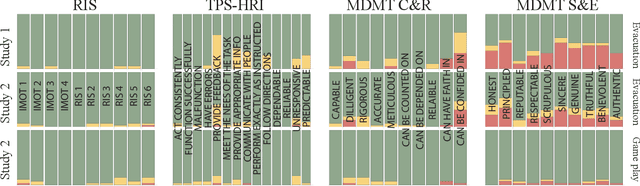

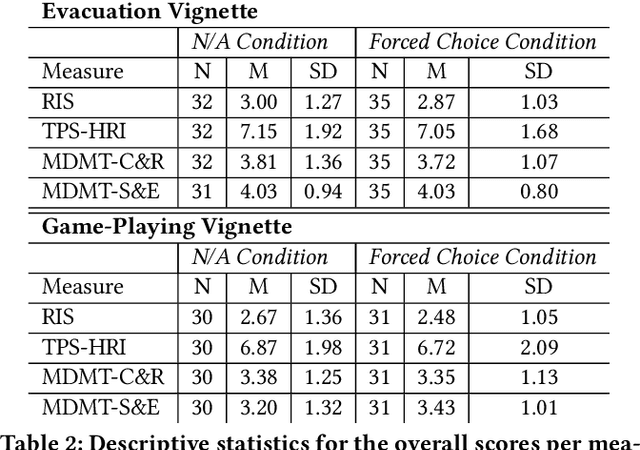

Abstract:Trust in human-robot interactions (HRI) is measured in two main ways: through subjective questionnaires and through behavioral tasks. To optimize measurements of trust through questionnaires, the field of HRI faces two challenges: the development of standardized measures that apply to a variety of robots with different capabilities, and the exploration of social and relational dimensions of trust in robots (e.g., benevolence). In this paper we look at how different trust questionnaires fare given these challenges that pull in different directions (being general vs. being exploratory) by studying whether people think the items in these questionnaires are applicable to different kinds of robots and interactions. In Study 1 we show that after being presented with a robot (non-humanoid) and an interaction scenario (fire evacuation), participants rated multiple questionnaire items such as "This robot is principled" as "Non-applicable to robots in general" or "Non-applicable to this robot". In Study 2 we show that the frequency of these ratings change (indeed, even for items rated as N/A to robots in general) when a new scenario is presented (game playing with a humanoid robot). Finally, while overall trust scores remained robust to N/A ratings, our results revealed potential fallacies in the way these scores are commonly interpreted. We conclude with recommendations for the development, use and results-reporting of trust questionnaires for future studies, as well as theoretical implications for the field of HRI.

* 9 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge