Thang H. Nguyen

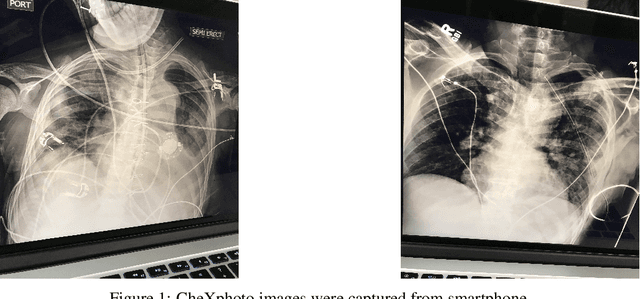

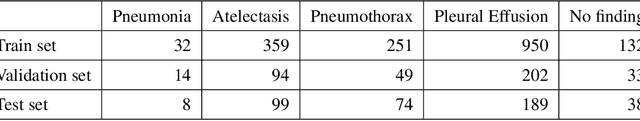

Interpretation of smartphone-captured radiographs utilizing a deep learning-based approach

Sep 13, 2020

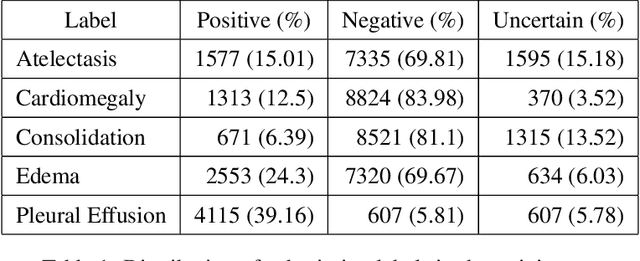

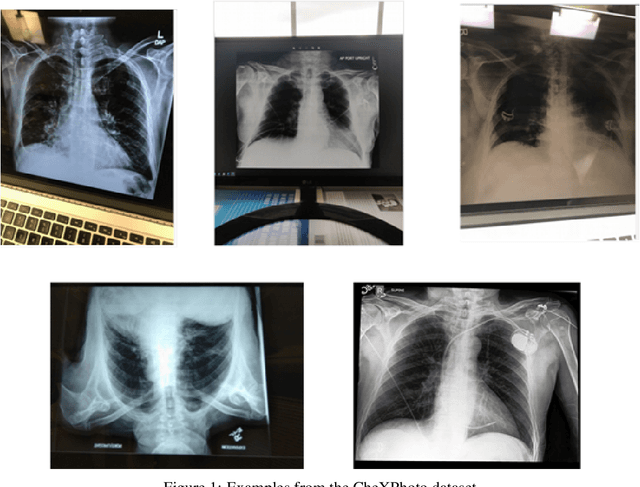

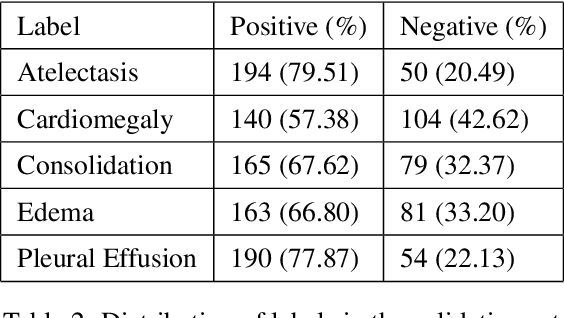

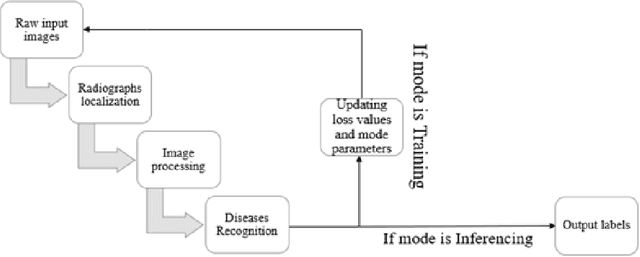

Abstract:Recently, computer-aided diagnostic systems (CADs) that could automatically interpret medical images effectively have been the emerging subject of recent academic attention. For radiographs, several deep learning-based systems or models have been developed to study the multi-label diseases recognition tasks. However, none of them have been trained to work on smartphone-captured chest radiographs. In this study, we proposed a system that comprises a sequence of deep learning-based neural networks trained on the newly released CheXphoto dataset to tackle this issue. The proposed approach achieved promising results of 0.684 in AUC and 0.699 in average F1 score. To the best of our knowledge, this is the first published study that showed to be capable of processing smartphone-captured radiographs.

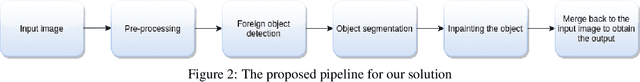

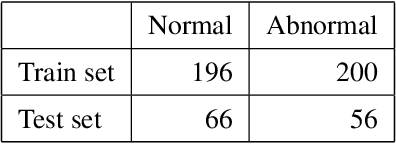

A novel approach to remove foreign objects from chest X-ray images

Aug 16, 2020

Abstract:We initially proposed a deep learning approach for foreign objects inpainting in smartphone-camera captured chest radiographs utilizing the cheXphoto dataset. Foreign objects which can significantly affect the quality of a computer-aided diagnostic prediction are captured under various settings. In this paper, we used multi-method to tackle both removal and inpainting chest radiographs. Firstly, an object detection model is trained to separate the foreign objects from the given image. Subsequently, the binary mask of each object is extracted utilizing a segmentation model. Each pair of the binary mask and the extracted object are then used for inpainting purposes. Finally, the in-painted regions are now merged back to the original image, resulting in a clean and non-foreign-object-existing output. To conclude, we achieved state-of-the-art accuracy. The experimental results showed a new approach to the possible applications of this method for chest X-ray images detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge