Phuong D. Nguyen

Advancing Wound Filling Extraction on 3D Faces: Auto-Segmentation and Wound Face Regeneration Approach

Jul 13, 2023

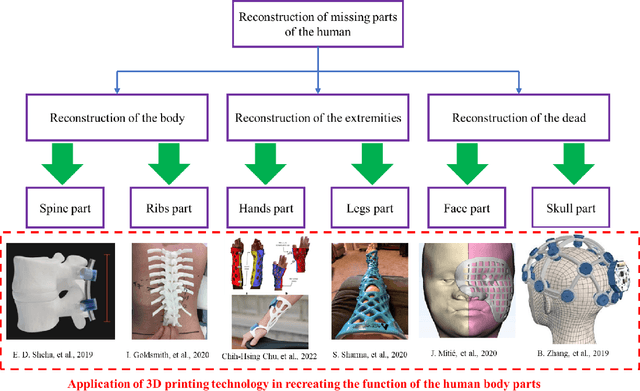

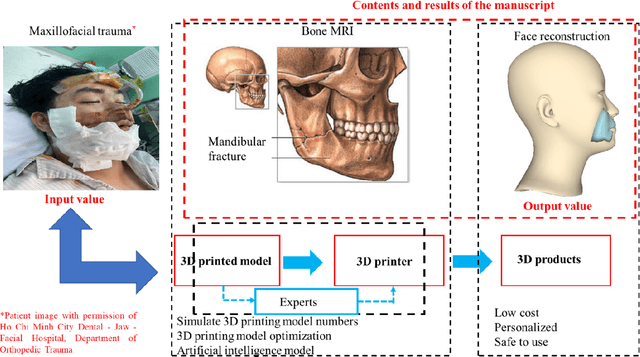

Abstract:Facial wound segmentation plays a crucial role in preoperative planning and optimizing patient outcomes in various medical applications. In this paper, we propose an efficient approach for automating 3D facial wound segmentation using a two-stream graph convolutional network. Our method leverages the Cir3D-FaIR dataset and addresses the challenge of data imbalance through extensive experimentation with different loss functions. To achieve accurate segmentation, we conducted thorough experiments and selected a high-performing model from the trained models. The selected model demonstrates exceptional segmentation performance for complex 3D facial wounds. Furthermore, based on the segmentation model, we propose an improved approach for extracting 3D facial wound fillers and compare it to the results of the previous study. Our method achieved a remarkable accuracy of 0.9999986\% on the test suite, surpassing the performance of the previous method. From this result, we use 3D printing technology to illustrate the shape of the wound filling. The outcomes of this study have significant implications for physicians involved in preoperative planning and intervention design. By automating facial wound segmentation and improving the accuracy of wound-filling extraction, our approach can assist in carefully assessing and optimizing interventions, leading to enhanced patient outcomes. Additionally, it contributes to advancing facial reconstruction techniques by utilizing machine learning and 3D bioprinting for printing skin tissue implants. Our source code is available at \url{https://github.com/SIMOGroup/WoundFilling3D}.

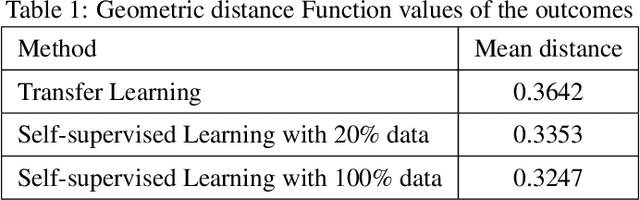

Application of Self-Supervised Learning to MICA Model for Reconstructing Imperfect 3D Facial Structures

Apr 08, 2023

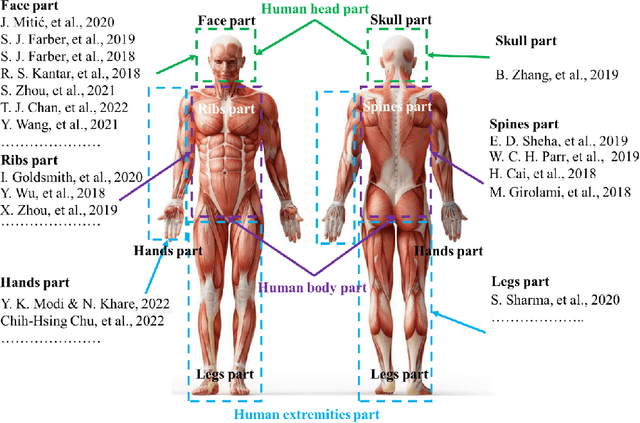

Abstract:In this study, we emphasize the integration of a pre-trained MICA model with an imperfect face dataset, employing a self-supervised learning approach. We present an innovative method for regenerating flawed facial structures, yielding 3D printable outputs that effectively support physicians in their patient treatment process. Our results highlight the model's capacity for concealing scars and achieving comprehensive facial reconstructions without discernible scarring. By capitalizing on pre-trained models and necessitating only a few hours of supplementary training, our methodology adeptly devises an optimal model for reconstructing damaged and imperfect facial features. Harnessing contemporary 3D printing technology, we institute a standardized protocol for fabricating realistic, camouflaging mask models for patients in a laboratory environment.

3D Facial Imperfection Regeneration: Deep learning approach and 3D printing prototypes

Mar 25, 2023

Abstract:This study explores the potential of a fully convolutional mesh autoencoder model for regenerating 3D nature faces with the presence of imperfect areas. We utilize deep learning approaches in graph processing and analysis to investigate the capabilities model in recreating a filling part for facial scars. Our approach in dataset creation is able to generate a facial scar rationally in a virtual space that corresponds to the unique circumstances. Especially, we propose a new method which is named 3D Facial Imperfection Regeneration(3D-FaIR) for reproducing a complete face reconstruction based on the remaining features of the patient face. To further enhance the applicable capacity of the present research, we develop an improved outlier technique to separate the wounds of patients and provide appropriate wound cover models. Also, a Cir3D-FaIR dataset of imperfect faces and open codes was released at https://github.com/SIMOGroup/3DFaIR. Our findings demonstrate the potential of the proposed approach to help patients recover more quickly and safely through convenient techniques. We hope that this research can contribute to the development of new products and innovative solutions for facial scar regeneration.

Interpretation of smartphone-captured radiographs utilizing a deep learning-based approach

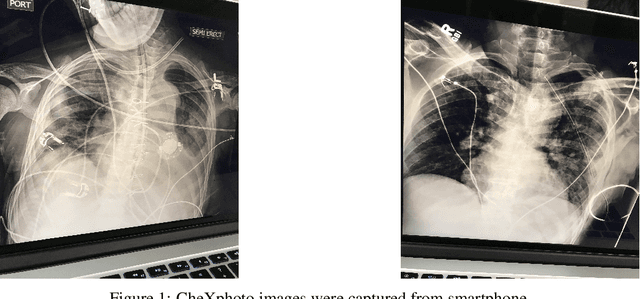

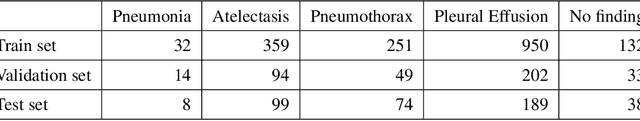

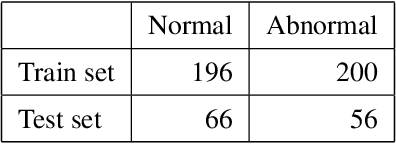

Sep 13, 2020

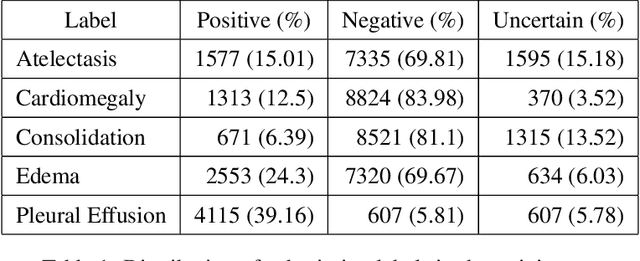

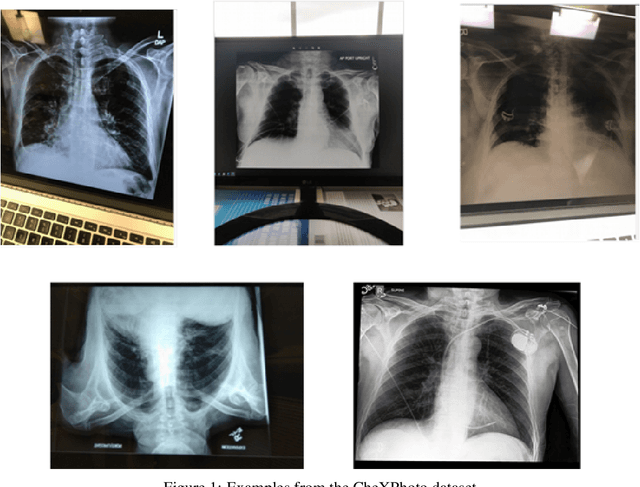

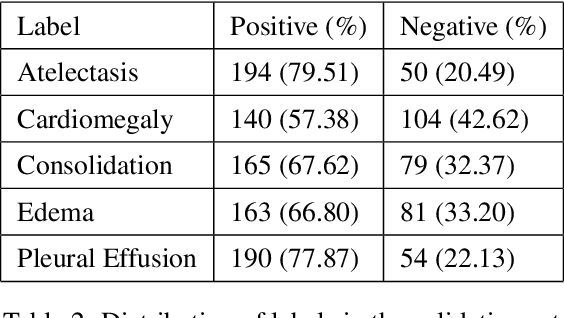

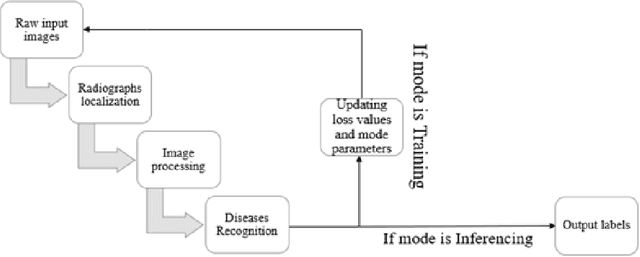

Abstract:Recently, computer-aided diagnostic systems (CADs) that could automatically interpret medical images effectively have been the emerging subject of recent academic attention. For radiographs, several deep learning-based systems or models have been developed to study the multi-label diseases recognition tasks. However, none of them have been trained to work on smartphone-captured chest radiographs. In this study, we proposed a system that comprises a sequence of deep learning-based neural networks trained on the newly released CheXphoto dataset to tackle this issue. The proposed approach achieved promising results of 0.684 in AUC and 0.699 in average F1 score. To the best of our knowledge, this is the first published study that showed to be capable of processing smartphone-captured radiographs.

A novel approach to remove foreign objects from chest X-ray images

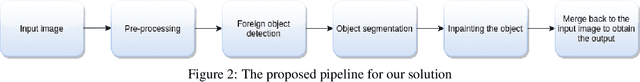

Aug 16, 2020

Abstract:We initially proposed a deep learning approach for foreign objects inpainting in smartphone-camera captured chest radiographs utilizing the cheXphoto dataset. Foreign objects which can significantly affect the quality of a computer-aided diagnostic prediction are captured under various settings. In this paper, we used multi-method to tackle both removal and inpainting chest radiographs. Firstly, an object detection model is trained to separate the foreign objects from the given image. Subsequently, the binary mask of each object is extracted utilizing a segmentation model. Each pair of the binary mask and the extracted object are then used for inpainting purposes. Finally, the in-painted regions are now merged back to the original image, resulting in a clean and non-foreign-object-existing output. To conclude, we achieved state-of-the-art accuracy. The experimental results showed a new approach to the possible applications of this method for chest X-ray images detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge