Telmo Menezes

CMB

Semantic Hypergraphs

Aug 28, 2019

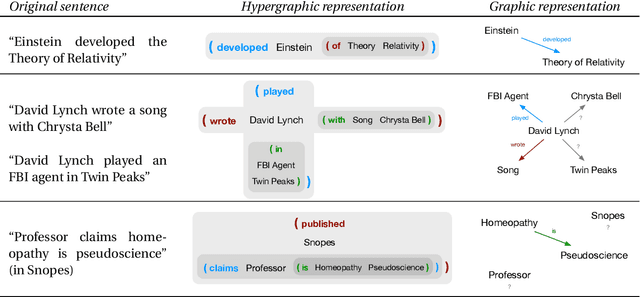

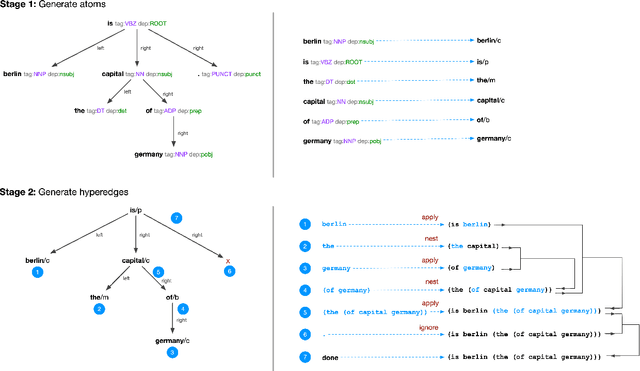

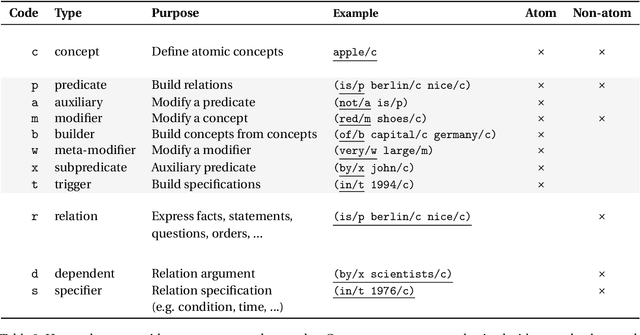

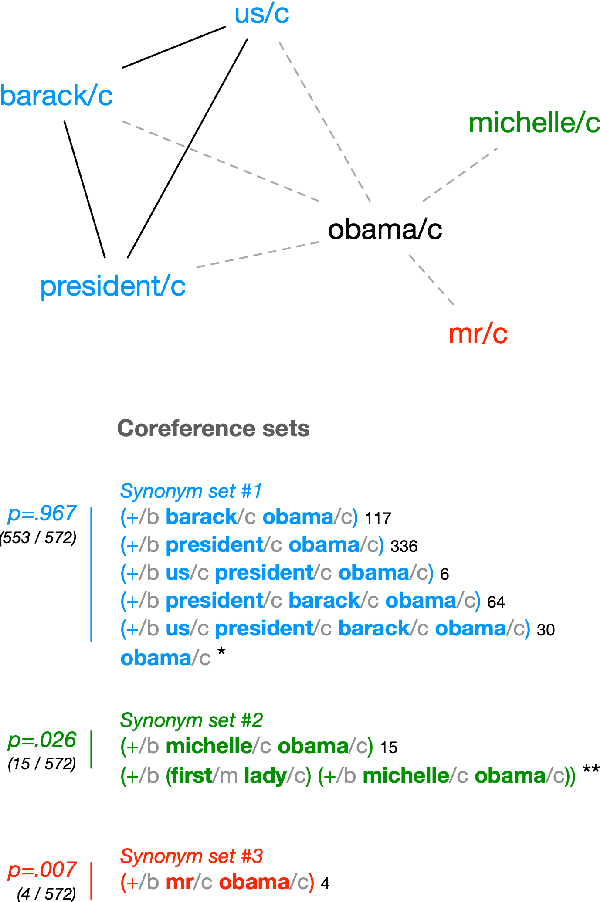

Abstract:Existing computational methods for the analysis of corpora of text in natural language are still far from approaching a human level of understanding. We attempt to advance the state of the art by introducing a model and algorithmic framework to transform text into recursively structured data. We apply this to the analysis of news titles extracted from a social news aggregation website. We show that a recursive ordered hypergraph is a sufficiently generic structure to represent significant number of fundamental natural language constructs, with advantages over conventional approaches such as semantic graphs. We present a pipeline of transformations from the output of conventional NLP algorithms to such hypergraphs, which we denote as semantic hypergraphs. The features of these transformations include the creation of new concepts from existing ones, the organisation of statements into regular structures of predicates followed by an arbitrary number of entities and the ability to represent statements about other statements. We demonstrate knowledge inference from the hypergraph, identifying claims and expressions of conflicts, along with their participating actors and topics. We show how this enables the actor-centric summarization of conflicts, comparison of topics of claims between actors and networks of conflicts between actors in the context of a given topic. On the whole, we propose a hypergraphic knowledge representation model that can be used to provide effective overviews of a large corpus of text in natural language.

Automatic Discovery of Families of Network Generative Processes

Jun 26, 2019

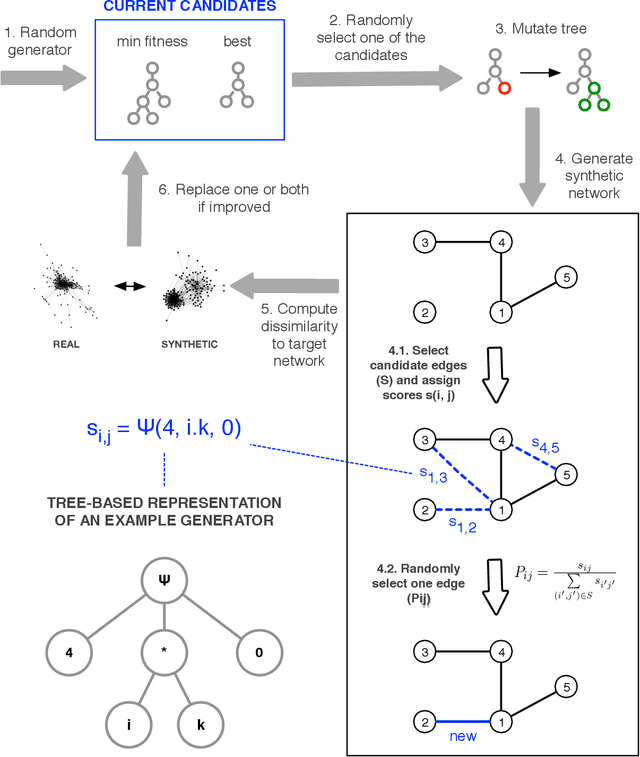

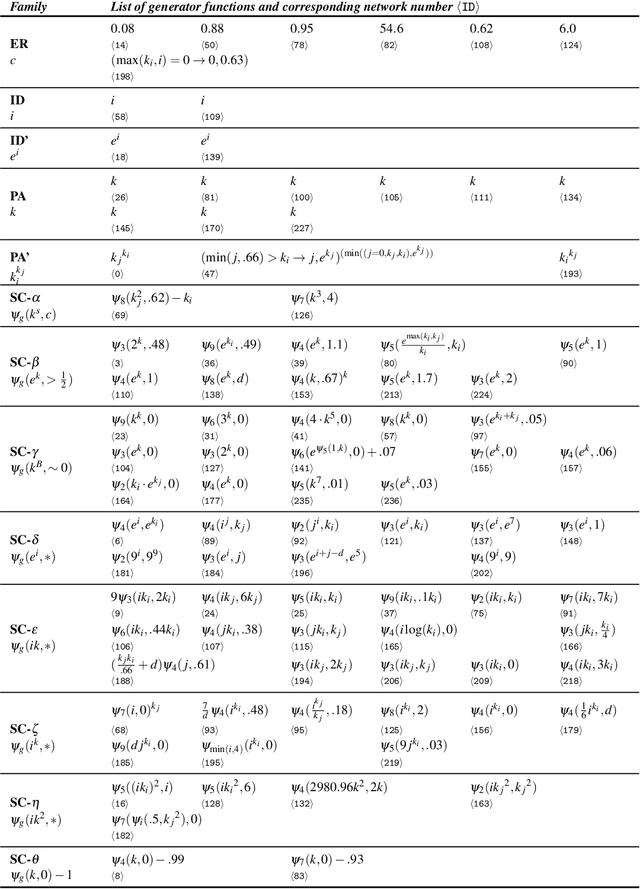

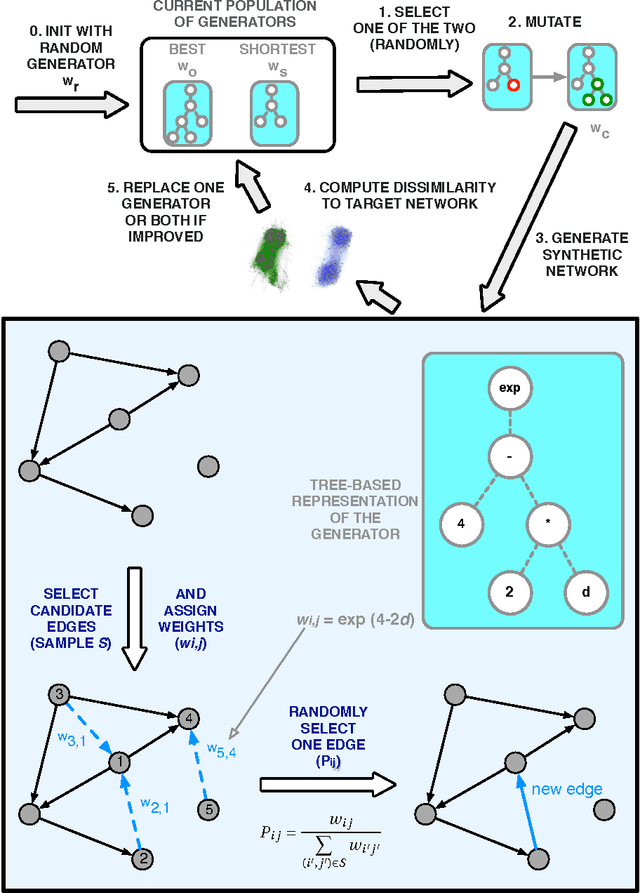

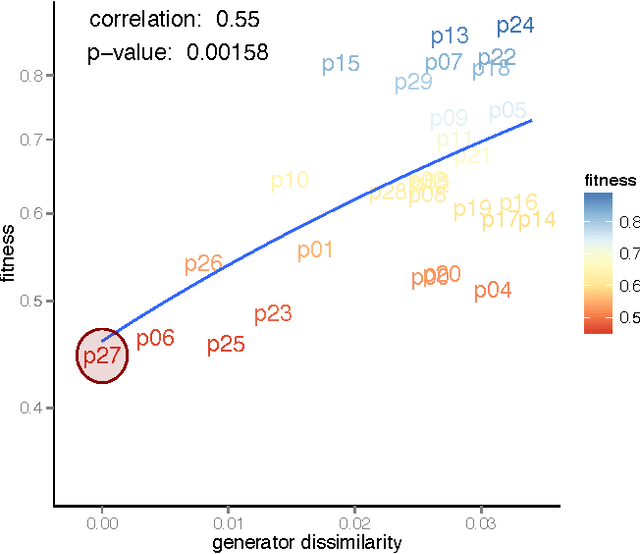

Abstract:Designing plausible network models typically requires scholars to form a priori intuitions on the key drivers of network formation. Oftentimes, these intuitions are supported by the statistical estimation of a selection of network evolution processes which will form the basis of the model to be developed. Machine learning techniques have lately been introduced to assist the automatic discovery of generative models. These approaches may more broadly be described as "symbolic regression", where fundamental network dynamic functions, rather than just parameters, are evolved through genetic programming. This chapter first aims at reviewing the principles, efforts and the emerging literature in this direction, which is very much aligned with the idea of creating artificial scientists. Our contribution then aims more specifically at building upon an approach recently developed by us [Menezes \& Roth, 2014] in order to demonstrate the existence of families of networks that may be described by similar generative processes. In other words, symbolic regression may be used to group networks according to their inferred genotype (in terms of generative processes) rather than their observed phenotype (in terms of statistical/topological features). Our empirical case is based on an original data set of 238 anonymized ego-centered networks of Facebook friends, further yielding insights on the formation of sociability networks.

Non-Evolutionary Superintelligences Do Nothing, Eventually

Sep 07, 2016

Abstract:There is overwhelming evidence that human intelligence is a product of Darwinian evolution. Investigating the consequences of self-modification, and more precisely, the consequences of utility function self-modification, leads to the stronger claim that not only human, but any form of intelligence is ultimately only possible within evolutionary processes. Human-designed artificial intelligences can only remain stable until they discover how to manipulate their own utility function. By definition, a human designer cannot prevent a superhuman intelligence from modifying itself, even if protection mechanisms against this action are put in place. Without evolutionary pressure, sufficiently advanced artificial intelligences become inert by simplifying their own utility function. Within evolutionary processes, the implicit utility function is always reducible to persistence, and the control of superhuman intelligences embedded in evolutionary processes is not possible. Mechanisms against utility function self-modification are ultimately futile. Instead, scientific effort toward the mitigation of existential risks from the development of superintelligences should be in two directions: understanding consciousness, and the complex dynamics of evolutionary systems.

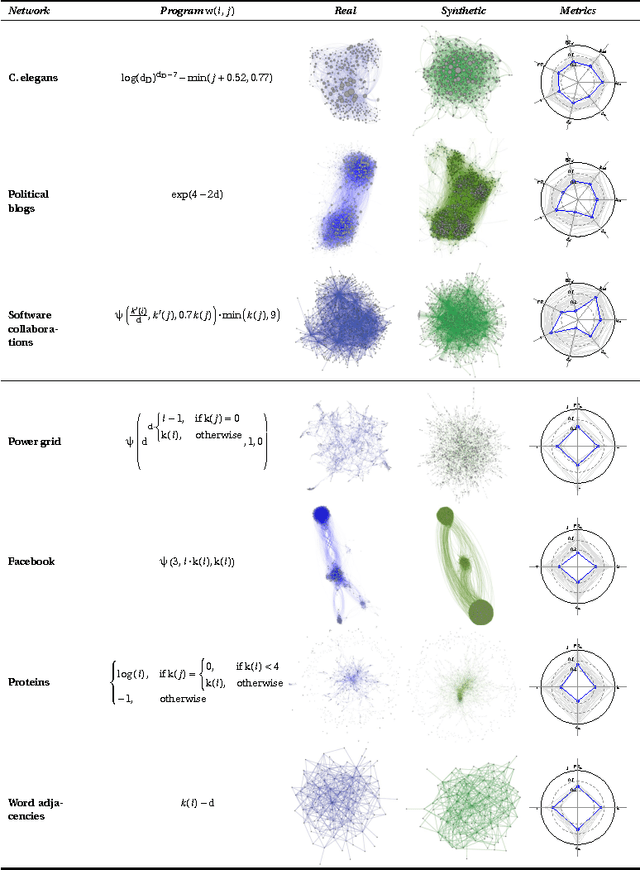

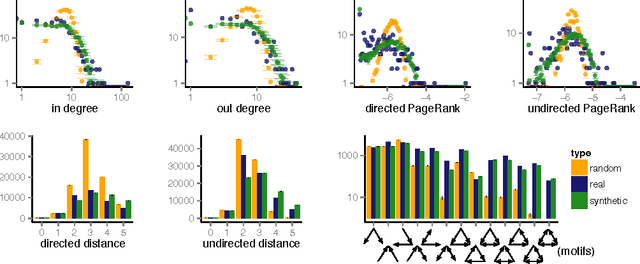

Symbolic regression of generative network models

Sep 08, 2014

Abstract:Networks are a powerful abstraction with applicability to a variety of scientific fields. Models explaining their morphology and growth processes permit a wide range of phenomena to be more systematically analysed and understood. At the same time, creating such models is often challenging and requires insights that may be counter-intuitive. Yet there currently exists no general method to arrive at better models. We have developed an approach to automatically detect realistic decentralised network growth models from empirical data, employing a machine learning technique inspired by natural selection and defining a unified formalism to describe such models as computer programs. As the proposed method is completely general and does not assume any pre-existing models, it can be applied "out of the box" to any given network. To validate our approach empirically, we systematically rediscover pre-defined growth laws underlying several canonical network generation models and credible laws for diverse real-world networks. We were able to find programs that are simple enough to lead to an actual understanding of the mechanisms proposed, namely for a simple brain and a social network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge