Tashnim Chowdhury

RescueNet: A High Resolution UAV Semantic Segmentation Benchmark Dataset for Natural Disaster Damage Assessment

Feb 24, 2022

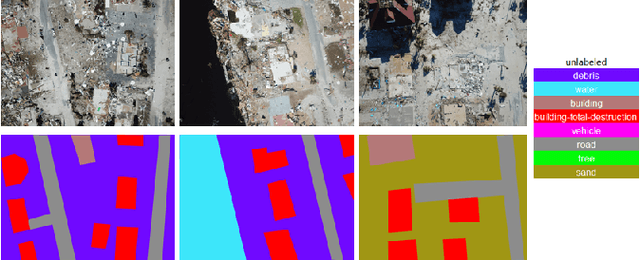

Abstract:Due to climate change, we can observe a recent surge of natural disasters all around the world. These disasters are causing disastrous impact on both nature and human lives. Economic losses are getting greater due to the hurricanes. Quick and prompt response of the rescue teams are crucial in saving human lives and reducing economic cost. Deep learning based computer vision techniques can help in scene understanding, and help rescue teams with precise damage assessment. Semantic segmentation, an active research area in computer vision, can put labels to each pixel of an image, and therefore can be a valuable arsenal in the effort of reducing the impacts of hurricanes. Unfortunately, available datasets for natural disaster damage assessment lack detailed annotation of the affected areas, and therefore do not support the deep learning models in total damage assessment. To this end, we introduce the RescueNet, a high resolution post disaster dataset, for semantic segmentation to assess damages after natural disasters. The RescueNet consists of post disaster images collected after Hurricane Michael. The data is collected using Unmanned Aerial Vehicles (UAVs) from several areas impacted by the hurricane. The uniqueness of the RescueNet comes from the fact that this dataset provides high resolution post-disaster images and comprehensive annotation of each image. While most of the existing dataset offer annotation of only part of the scene, like building, road, or river, RescueNet provides pixel level annotation of all the classes including building, road, pool, tree, debris, and so on. We further analyze the usefulness of the dataset by implementing state-of-the-art segmentation models on the RescueNet. The experiments demonstrate that our dataset can be valuable in further improvement of the existing methodologies for natural disaster damage assessment.

Attention Based Semantic Segmentation on UAV Dataset for Natural Disaster Damage Assessment

Jun 01, 2021

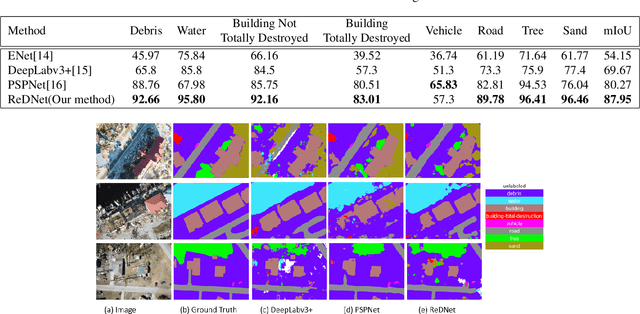

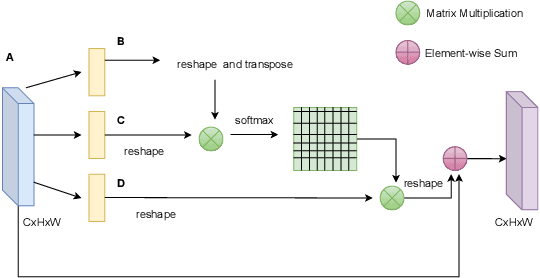

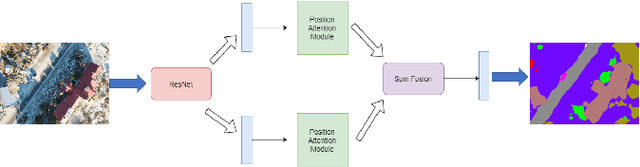

Abstract:The detrimental impacts of climate change include stronger and more destructive hurricanes happening all over the world. Identifying different damaged structures of an area including buildings and roads are vital since it helps the rescue team to plan their efforts to minimize the damage caused by a natural disaster. Semantic segmentation helps to identify different parts of an image. We implement a novel self-attention based semantic segmentation model on a high resolution UAV dataset and attain Mean IoU score of around 88% on the test set. The result inspires to use self-attention schemes in natural disaster damage assessment which will save human lives and reduce economic losses.

FloodNet: A High Resolution Aerial Imagery Dataset for Post Flood Scene Understanding

Dec 05, 2020

Abstract:Visual scene understanding is the core task in making any crucial decision in any computer vision system. Although popular computer vision datasets like Cityscapes, MS-COCO, PASCAL provide good benchmarks for several tasks (e.g. image classification, segmentation, object detection), these datasets are hardly suitable for post disaster damage assessments. On the other hand, existing natural disaster datasets include mainly satellite imagery which have low spatial resolution and a high revisit period. Therefore, they do not have a scope to provide quick and efficient damage assessment tasks. Unmanned Aerial Vehicle(UAV) can effortlessly access difficult places during any disaster and collect high resolution imagery that is required for aforementioned tasks of computer vision. To address these issues we present a high resolution UAV imagery, FloodNet, captured after the hurricane Harvey. This dataset demonstrates the post flooded damages of the affected areas. The images are labeled pixel-wise for semantic segmentation task and questions are produced for the task of visual question answering. FloodNet poses several challenges including detection of flooded roads and buildings and distinguishing between natural water and flooded water. With the advancement of deep learning algorithms, we can analyze the impact of any disaster which can make a precise understanding of the affected areas. In this paper, we compare and contrast the performances of baseline methods for image classification, semantic segmentation, and visual question answering on our dataset.

Comprehensive Semantic Segmentation on High Resolution UAV Imagery for Natural Disaster Damage Assessment

Sep 06, 2020

Abstract:In this paper, we present a large-scale hurricane Michael dataset for visual perception in disaster scenarios, and analyze state-of-the-art deep neural network models for semantic segmentation. The dataset consists of around 2000 high-resolution aerial images, with annotated ground-truth data for semantic segmentation. We discuss the challenges of the dataset and train the state-of-the-art methods on this dataset to evaluate how well these methods can recognize the disaster situations. Finally, we discuss challenges for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge