Tanny Chavez

Generating Realistic X-ray Scattering Images Using Stable Diffusion and Human-in-the-loop Annotations

Aug 22, 2024Abstract:We fine-tuned a foundational stable diffusion model using X-ray scattering images and their corresponding descriptions to generate new scientific images from given prompts. However, some of the generated images exhibit significant unrealistic artifacts, commonly known as "hallucinations". To address this issue, we trained various computer vision models on a dataset composed of 60% human-approved generated images and 40% experimental images to detect unrealistic images. The classified images were then reviewed and corrected by human experts, and subsequently used to further refine the classifiers in next rounds of training and inference. Our evaluations demonstrate the feasibility of generating high-fidelity, domain-specific images using a fine-tuned diffusion model. We anticipate that generative AI will play a crucial role in enhancing data augmentation and driving the development of digital twins in scientific research facilities.

DLSIA: Deep Learning for Scientific Image Analysis

Aug 02, 2023

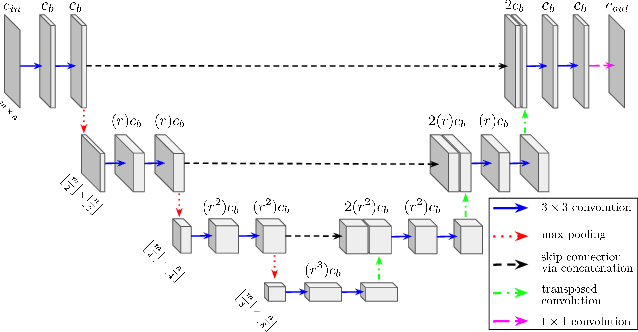

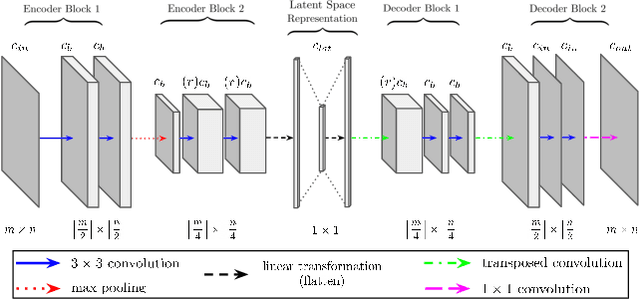

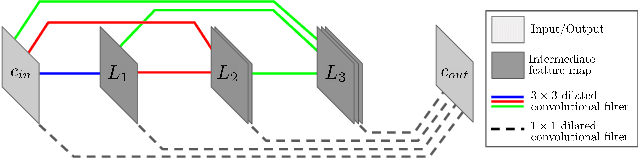

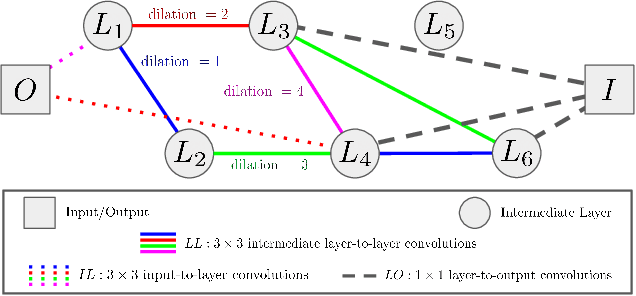

Abstract:We introduce DLSIA (Deep Learning for Scientific Image Analysis), a Python-based machine learning library that empowers scientists and researchers across diverse scientific domains with a range of customizable convolutional neural network (CNN) architectures for a wide variety of tasks in image analysis to be used in downstream data processing, or for experiment-in-the-loop computing scenarios. DLSIA features easy-to-use architectures such as autoencoders, tunable U-Nets, and parameter-lean mixed-scale dense networks (MSDNets). Additionally, we introduce sparse mixed-scale networks (SMSNets), generated using random graphs and sparse connections. As experimental data continues to grow in scale and complexity, DLSIA provides accessible CNN construction and abstracts CNN complexities, allowing scientists to tailor their machine learning approaches, accelerate discoveries, foster interdisciplinary collaboration, and advance research in scientific image analysis.

MLExchange: A web-based platform enabling exchangeable machine learning workflows

Aug 23, 2022

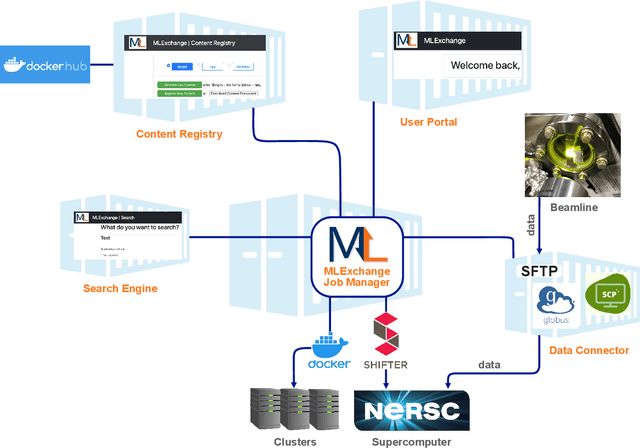

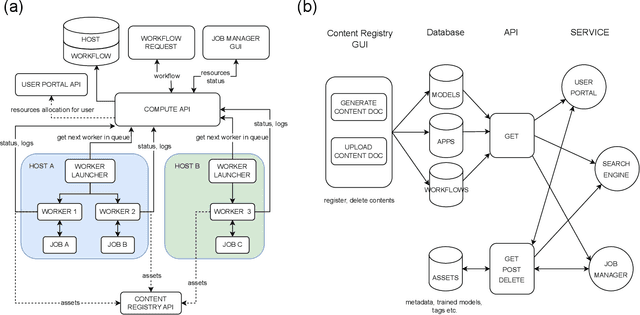

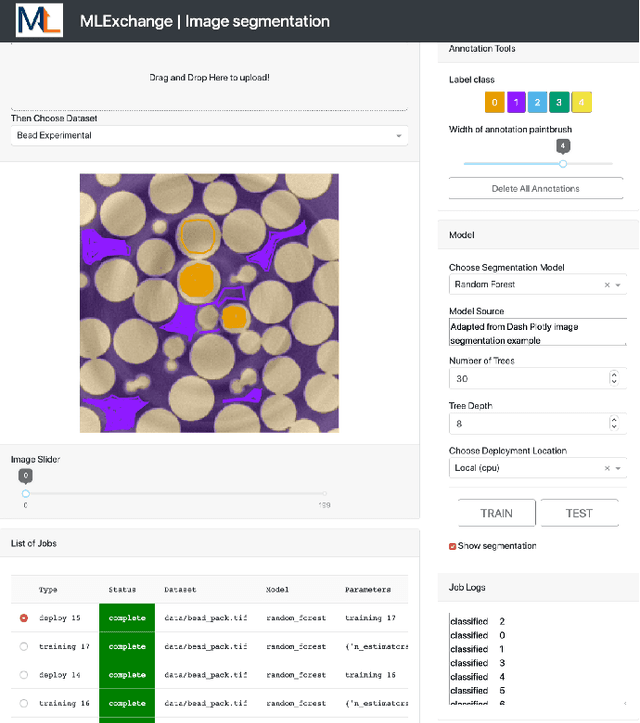

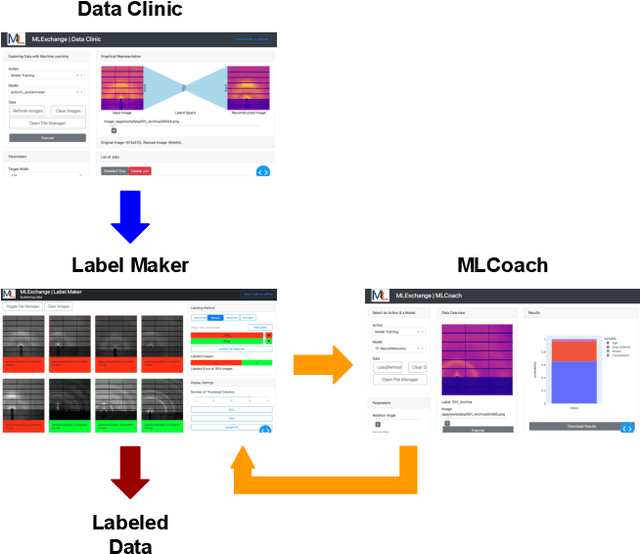

Abstract:Machine learning (ML) algorithms are showing a growing trend in helping the scientific communities across different disciplines and institutions to address large and diverse data problems. However, many available ML tools are programmatically demanding and computationally costly. The MLExchange project aims to build a collaborative platform equipped with enabling tools that allow scientists and facility users who do not have a profound ML background to use ML and computational resources in scientific discovery. At the high level, we are targeting a full user experience where managing and exchanging ML algorithms, workflows, and data are readily available through web applications. So far, we have built four major components, i.e, the central job manager, the centralized content registry, user portal, and search engine, and successfully deployed these components on a testing server. Since each component is an independent container, the whole platform or its individual service(s) can be easily deployed at servers of different scales, ranging from a laptop (usually a single user) to high performance clusters (HPC) accessed (simultaneously) by many users. Thus, MLExchange renders flexible using scenarios -- users could either access the services and resources from a remote server or run the whole platform or its individual service(s) within their local network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge