Sunil Reddy Tiyyagura

DeepPSL: End-to-end perception and reasoning with applications to zero shot learning

Oct 13, 2021

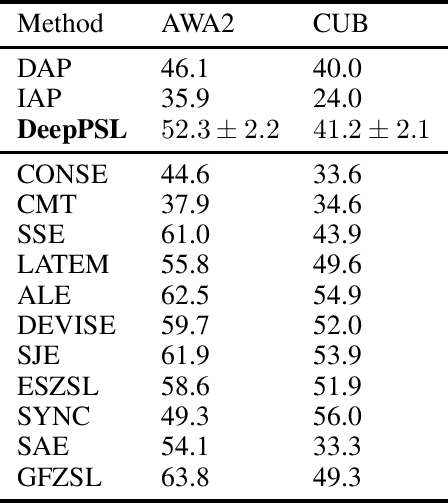

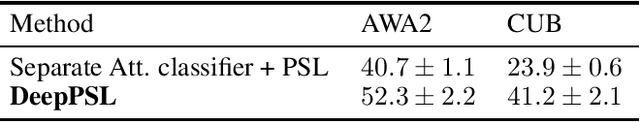

Abstract:We introduce DeepPSL a variant of Probabilistic Soft Logic (PSL) to produce an end-to-end trainable system that integrates reasoning and perception. PSL represents first-order logic in terms of a convex graphical model -- Hinge Loss Markov random fields (HL-MRFs). PSL stands out among probabilistic logic frameworks due to its tractability having been applied to systems of more than 1 billion ground rules. The key to our approach is to represent predicates in first-order logic using deep neural networks and then to approximately back-propagate through the HL-MRF and thus train every aspect of the first-order system being represented. We believe that this approach represents an interesting direction for the integration of deep learning and reasoning techniques with applications to knowledge base learning, multi-task learning, and explainability. We evaluate DeepPSL on a zero shot learning problem in image classification. State of the art results demonstrate the utility and flexibility of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge