Nigel P. Duffy

DeepPSL: End-to-end perception and reasoning with applications to zero shot learning

Oct 13, 2021

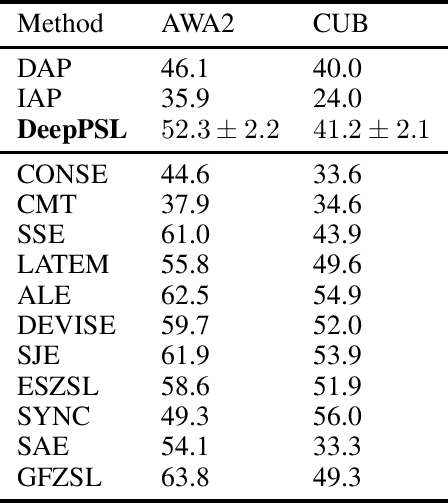

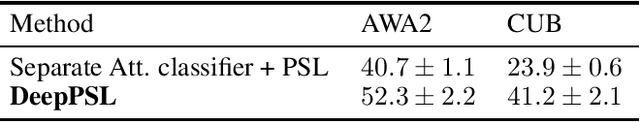

Abstract:We introduce DeepPSL a variant of Probabilistic Soft Logic (PSL) to produce an end-to-end trainable system that integrates reasoning and perception. PSL represents first-order logic in terms of a convex graphical model -- Hinge Loss Markov random fields (HL-MRFs). PSL stands out among probabilistic logic frameworks due to its tractability having been applied to systems of more than 1 billion ground rules. The key to our approach is to represent predicates in first-order logic using deep neural networks and then to approximately back-propagate through the HL-MRF and thus train every aspect of the first-order system being represented. We believe that this approach represents an interesting direction for the integration of deep learning and reasoning techniques with applications to knowledge base learning, multi-task learning, and explainability. We evaluate DeepPSL on a zero shot learning problem in image classification. State of the art results demonstrate the utility and flexibility of our approach.

DeepCPCFG: Deep Learning and Context Free Grammars for End-to-End Information Extraction

Mar 10, 2021

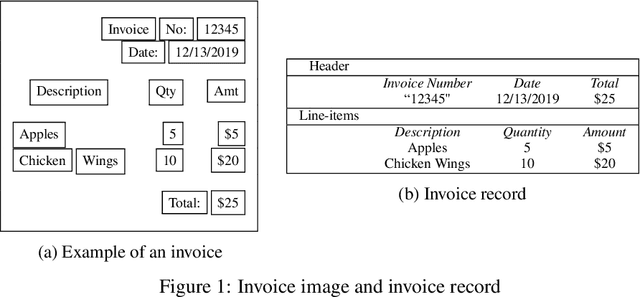

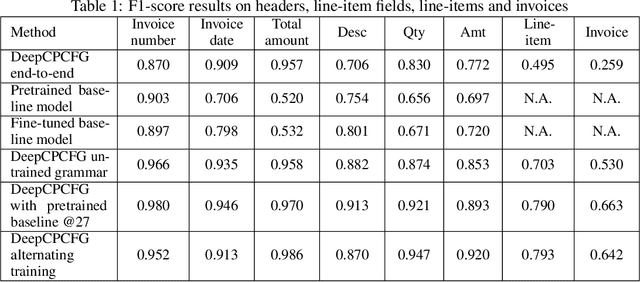

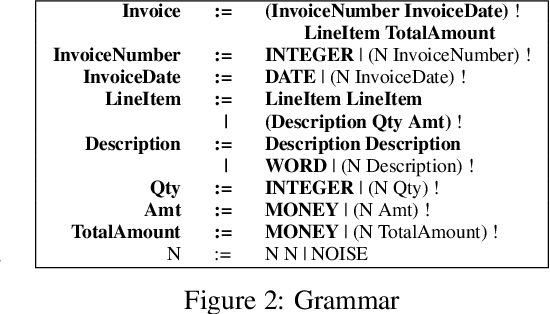

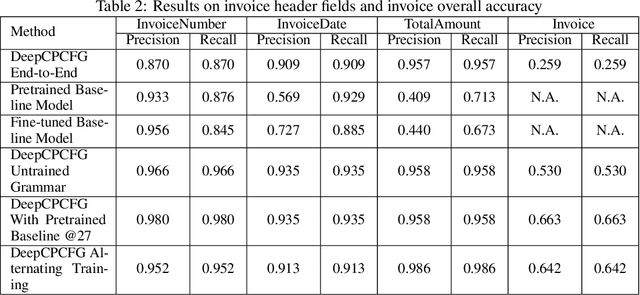

Abstract:We combine deep learning and Conditional Probabilistic Context Free Grammars (CPCFG) to create an end-to-end system for extracting structured information from complex documents. For each class of documents, we create a CPCFG that describes the structure of the information to be extracted. Conditional probabilities are modeled by deep neural networks. We use this grammar to parse 2-D documents to directly produce structured records containing the extracted information. This system is trained end-to-end with (Document, Record) pairs. We apply this approach to extract information from scanned invoices achieving state-of-the-art results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge