Subhankar Bose

ON-OFF Neuromorphic ISING Machines using Fowler-Nordheim Annealers

Jun 07, 2024Abstract:We introduce NeuroSA, a neuromorphic architecture specifically designed to ensure asymptotic convergence to the ground state of an Ising problem using an annealing process that is governed by the physics of quantum mechanical tunneling using Fowler-Nordheim (FN). The core component of NeuroSA consists of a pair of asynchronous ON-OFF neurons, which effectively map classical simulated annealing (SA) dynamics onto a network of integrate-and-fire (IF) neurons. The threshold of each ON-OFF neuron pair is adaptively adjusted by an FN annealer which replicates the optimal escape mechanism and convergence of SA, particularly at low temperatures. To validate the effectiveness of our neuromorphic Ising machine, we systematically solved various benchmark MAX-CUT combinatorial optimization problems. Across multiple runs, NeuroSA consistently generates solutions that approach the state-of-the-art level with high accuracy (greater than 99%), and without any graph-specific hyperparameter tuning. For practical illustration, we present results from an implementation of NeuroSA on the SpiNNaker2 platform, highlighting the feasibility of mapping our proposed architecture onto a standard neuromorphic accelerator platform.

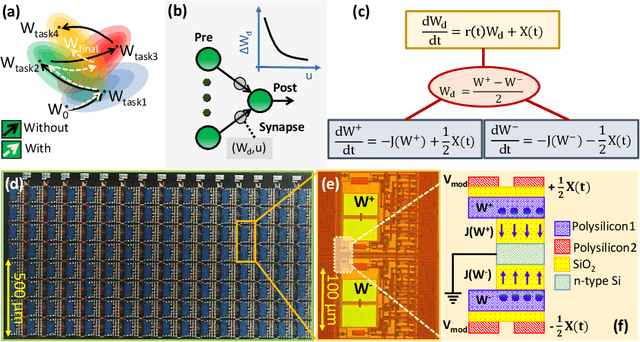

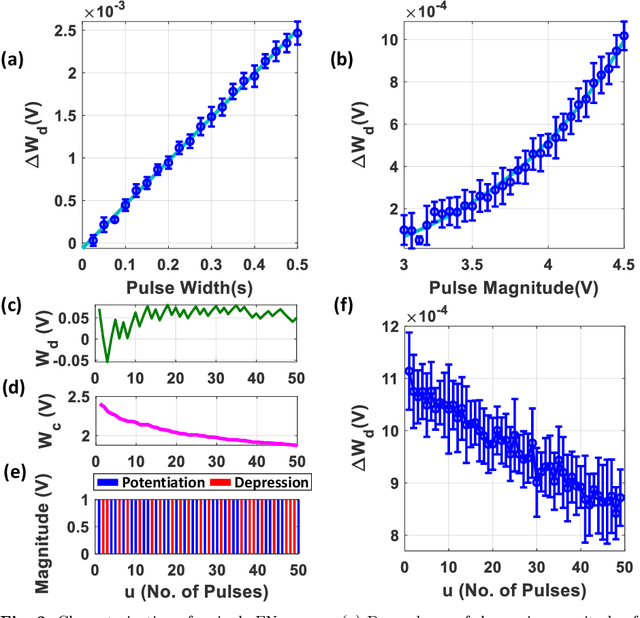

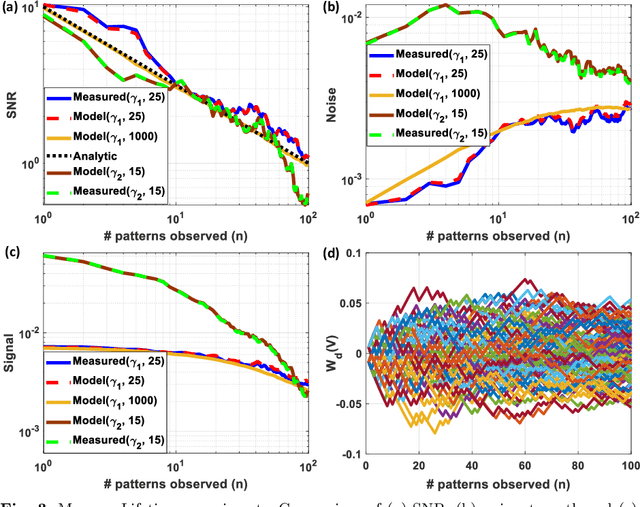

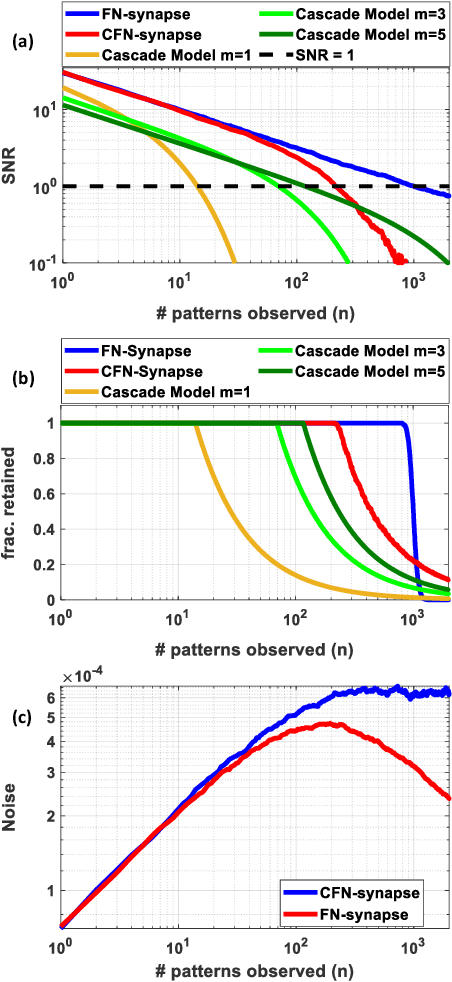

On-device Synaptic Memory Consolidation using Fowler-Nordheim Quantum-tunneling

Jun 27, 2022

Abstract:Synaptic memory consolidation has been heralded as one of the key mechanisms for supporting continual learning in neuromorphic Artificial Intelligence (AI) systems. Here we report that a Fowler-Nordheim (FN) quantum-tunneling device can implement synaptic memory consolidation similar to what can be achieved by algorithmic consolidation models like the cascade and the elastic weight consolidation (EWC) models. The proposed FN-synapse not only stores the synaptic weight but also stores the synapse's historical usage statistic on the device itself. We also show that the operation of the FN-synapse is near-optimal in terms of the synaptic lifetime and we demonstrate that a network comprising FN-synapses outperforms a comparable EWC network for a small benchmark continual learning task. With an energy footprint of femtojoules per synaptic update, we believe that the proposed FN-synapse provides an ultra-energy-efficient approach for implementing both synaptic memory consolidation and persistent learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge