Stylianos Piperakis

Probabilistic Contact State Estimation for Legged Robots using Inertial Information

Mar 04, 2023Abstract:Legged robot navigation in unstructured and slippery terrains depends heavily on the ability to accurately identify the quality of contact between the robot's feet and the ground. Contact state estimation is regarded as a challenging problem and is typically addressed by exploiting force measurements, joint encoders and/or robot kinematics and dynamics. In contrast to most state of the art approaches, the current work introduces a novel probabilistic method for estimating the contact state based solely on proprioceptive sensing, as it is readily available by Inertial Measurement Units (IMUs) mounted on the robot's end effectors. Capitalizing on the uncertainty of IMU measurements, our method estimates the probability of stable contact. This is accomplished by approximating the multimodal probability density function over a batch of data points for each axis of the IMU with Kernel Density Estimation. The proposed method has been extensively assessed against both real and simulated scenarios on bipedal and quadrupedal robotic platforms such as ATLAS, TALOS and Unitree's GO1.

Robust Contact State Estimation in Humanoid Walking Gaits

Jul 30, 2022

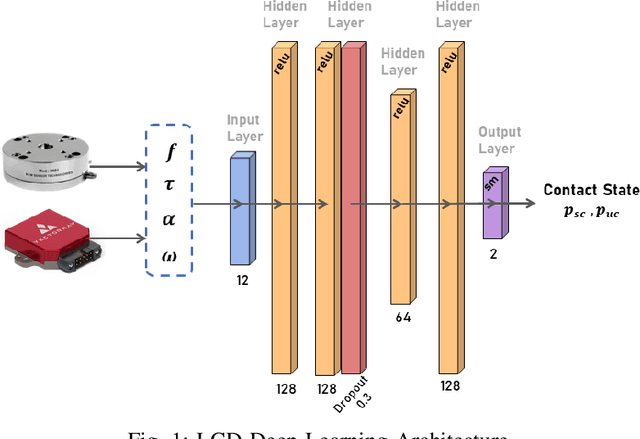

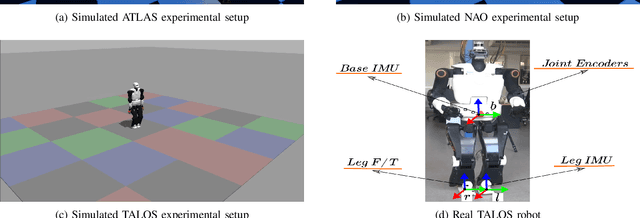

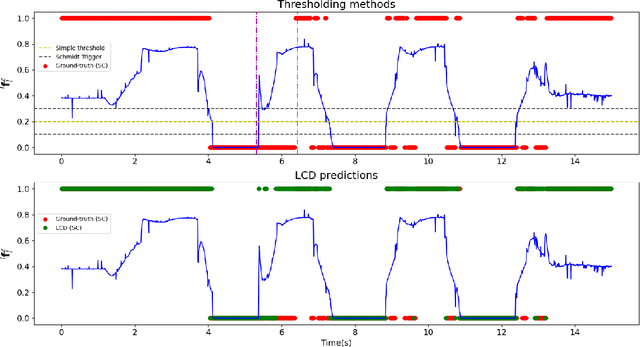

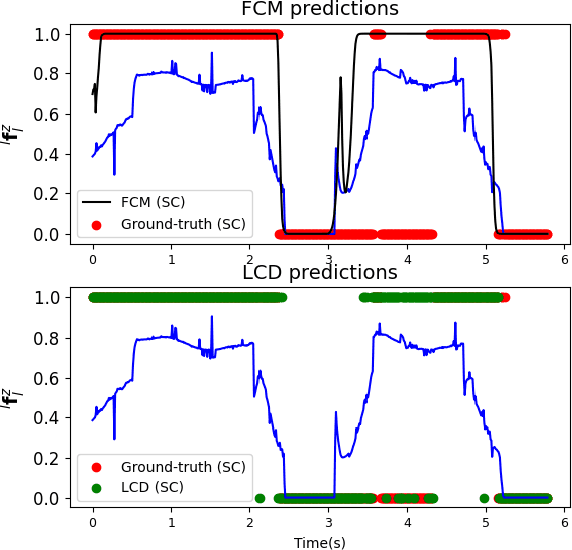

Abstract:In this article, we propose a deep learning framework that provides a unified approach to the problem of leg contact detection in humanoid robot walking gaits. Our formulation accomplishes to accurately and robustly estimate the contact state probability for each leg (i.e., stable or slip/no contact). The proposed framework employs solely proprioceptive sensing and although it relies on simulated ground-truth contact data for the classification process, we demonstrate that it generalizes across varying friction surfaces and different legged robotic platforms and, at the same time, is readily transferred from simulation to practice. The framework is quantitatively and qualitatively assessed in simulation via the use of ground-truth contact data and is contrasted against state of-the-art methods with an ATLAS, a NAO, and a TALOS humanoid robot. Furthermore, its efficacy is demonstrated in base estimation with a real TALOS humanoid. To reinforce further research endeavors, our implementation is offered as an open-source ROS/Python package, coined Legged Contact Detection (LCD).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge