Styliani Katsarou

Frequency Matters: When Time Series Foundation Models Fail Under Spectral Shift

Nov 06, 2025Abstract:Time series foundation models (TSFMs) have shown strong results on public benchmarks, prompting comparisons to a "BERT moment" for time series. Their effectiveness in industrial settings, however, remains uncertain. We examine why TSFMs often struggle to generalize and highlight spectral shift (a mismatch between the dominant frequency components in downstream tasks and those represented during pretraining) as a key factor. We present evidence from an industrial-scale player engagement prediction task in mobile gaming, where TSFMs underperform domain-adapted baselines. To isolate the mechanism, we design controlled synthetic experiments contrasting signals with seen versus unseen frequency bands, observing systematic degradation under spectral mismatch. These findings position frequency awareness as critical for robust TSFM deployment and motivate new pretraining and evaluation protocols that explicitly account for spectral diversity.

Understanding Players as if They Are Talking to the Game in a Customized Language: A Pilot Study

Oct 24, 2024

Abstract:This pilot study explores the application of language models (LMs) to model game event sequences, treating them as a customized natural language. We investigate a popular mobile game, transforming raw event data into textual sequences and pretraining a Longformer model on this data. Our approach captures the rich and nuanced interactions within game sessions, effectively identifying meaningful player segments. The results demonstrate the potential of self-supervised LMs in enhancing game design and personalization without relying on ground-truth labels.

On a Scale-Invariant Approach to Bundle Recommendations in Candy Crush Saga

Aug 14, 2024Abstract:A good understanding of player preferences is crucial for increasing content relevancy, especially in mobile games. This paper illustrates the use of attentive models for producing item recommendations in a mobile game scenario. The methodology comprises a combination of supervised and unsupervised approaches to create user-level recommendations while introducing a novel scale-invariant approach to the prediction. The methodology is subsequently applied to a bundle recommendation in Candy Crush Saga. The strategy of deployment, maintenance, and monitoring of ML models that are scaled up to serve millions of users is presented, along with the best practices and design patterns adopted to minimize technical debt typical of ML systems. The recommendation approach is evaluated both offline and online, with a focus on understanding the increase in engagement, click- and take rates, novelty effects, recommendation diversity, and the impact of degenerate feedback loops. We have demonstrated that the recommendation enhances user engagement by 30% concerning click rate and by more than 40% concerning take rate. In addition, we empirically quantify the diminishing effects of recommendation accuracy on user engagement.

player2vec: A Language Modeling Approach to Understand Player Behavior in Games

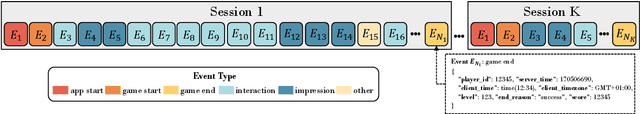

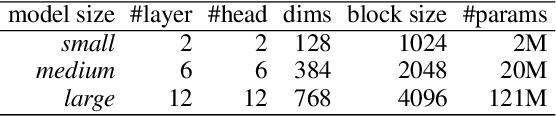

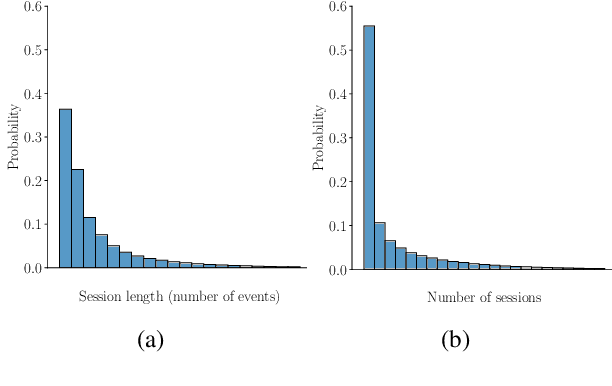

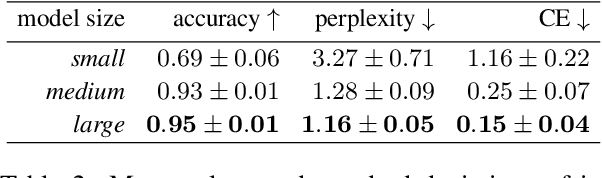

Apr 08, 2024Abstract:Methods for learning latent user representations from historical behavior logs have gained traction for recommendation tasks in e-commerce, content streaming, and other settings. However, this area still remains relatively underexplored in video and mobile gaming contexts. In this work, we present a novel method for overcoming this limitation by extending a long-range Transformer model from the natural language processing domain to player behavior data. We discuss specifics of behavior tracking in games and propose preprocessing and tokenization approaches by viewing in-game events in an analogous way to words in sentences, thus enabling learning player representations in a self-supervised manner in the absence of ground-truth annotations. We experimentally demonstrate the efficacy of the proposed approach in fitting the distribution of behavior events by evaluating intrinsic language modeling metrics. Furthermore, we qualitatively analyze the emerging structure of the learned embedding space and show its value for generating insights into behavior patterns to inform downstream applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge