Stuart Ambler

Fairness-Aware Ranking in Search & Recommendation Systems with Application to LinkedIn Talent Search

May 21, 2019

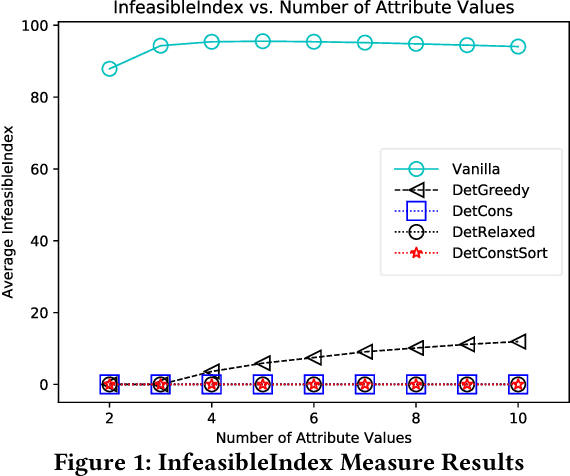

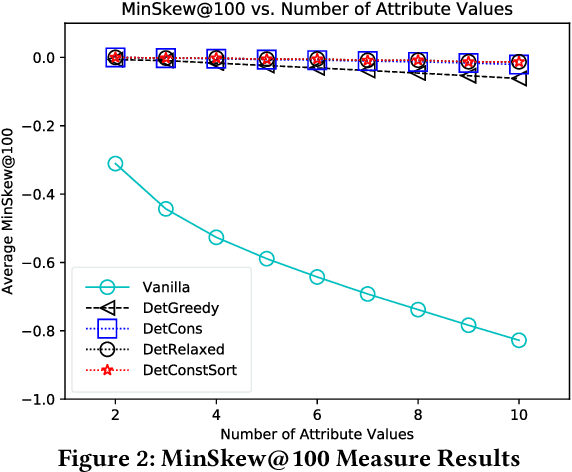

Abstract:We present a framework for quantifying and mitigating algorithmic bias in mechanisms designed for ranking individuals, typically used as part of web-scale search and recommendation systems. We first propose complementary measures to quantify bias with respect to protected attributes such as gender and age. We then present algorithms for computing fairness-aware re-ranking of results. For a given search or recommendation task, our algorithms seek to achieve a desired distribution of top ranked results with respect to one or more protected attributes. We show that such a framework can be tailored to achieve fairness criteria such as equality of opportunity and demographic parity depending on the choice of the desired distribution. We evaluate the proposed algorithms via extensive simulations over different parameter choices, and study the effect of fairness-aware ranking on both bias and utility measures. We finally present the online A/B testing results from applying our framework towards representative ranking in LinkedIn Talent Search, and discuss the lessons learned in practice. Our approach resulted in tremendous improvement in the fairness metrics (nearly three fold increase in the number of search queries with representative results) without affecting the business metrics, which paved the way for deployment to 100% of LinkedIn Recruiter users worldwide. Ours is the first large-scale deployed framework for ensuring fairness in the hiring domain, with the potential positive impact for more than 630M LinkedIn members.

Bringing Salary Transparency to the World: Computing Robust Compensation Insights via LinkedIn Salary

Sep 01, 2017

Abstract:The recently launched LinkedIn Salary product has been designed with the goal of providing compensation insights to the world's professionals and thereby helping them optimize their earning potential. We describe the overall design and architecture of the statistical modeling system underlying this product. We focus on the unique data mining challenges while designing and implementing the system, and describe the modeling components such as Bayesian hierarchical smoothing that help to compute and present robust compensation insights to users. We report on extensive evaluation with nearly one year of de-identified compensation data collected from over one million LinkedIn users, thereby demonstrating the efficacy of the statistical models. We also highlight the lessons learned through the deployment of our system at LinkedIn.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge