Steven Schreck

Height Change Feature Based Free Space Detection

Aug 02, 2023Abstract:In the context of autonomous forklifts, ensuring non-collision during travel, pick, and place operations is crucial. To accomplish this, the forklift must be able to detect and locate areas of free space and potential obstacles in its environment. However, this is particularly challenging in highly dynamic environments, such as factory sites and production halls, due to numerous industrial trucks and workers moving throughout the area. In this paper, we present a novel method for free space detection, which consists of the following steps. We introduce a novel technique for surface normal estimation relying on spherical projected LiDAR data. Subsequently, we employ the estimated surface normals to detect free space. The presented method is a heuristic approach that does not require labeling and can ensure real-time application due to high processing speed. The effectiveness of the proposed method is demonstrated through its application to a real-world dataset obtained on a factory site both indoors and outdoors, and its evaluation on the Semantic KITTI dataset [2]. We achieved a mean Intersection over Union (mIoU) score of 50.90 % on the benchmark dataset, with a processing speed of 105 Hz. In addition, we evaluated our approach on our factory site dataset. Our method achieved a mIoU score of 63.30 % at 54 Hz

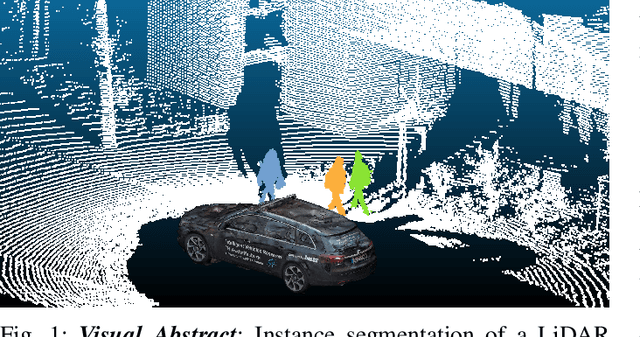

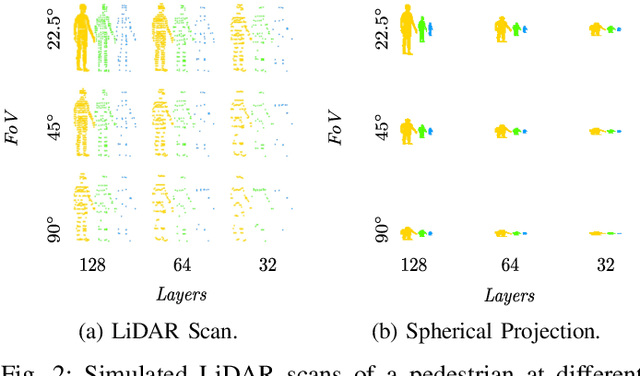

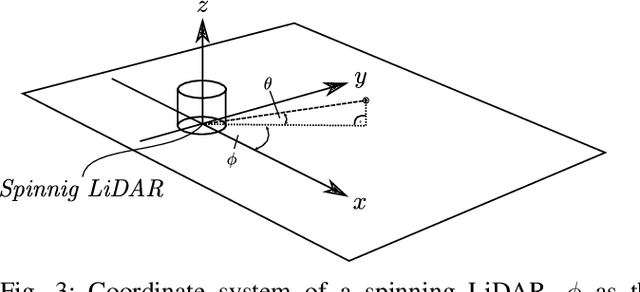

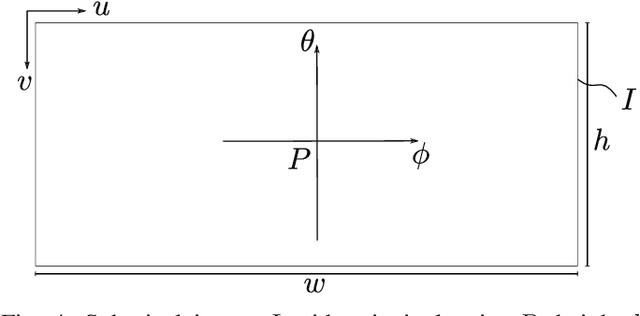

Sensor Equivariance by LiDAR Projection Images

Apr 29, 2023

Abstract:In this work, we propose an extension of conventional image data by an additional channel in which the associated projection properties are encoded. This addresses the issue of sensor-dependent object representation in projection-based sensors, such as LiDAR, which can lead to distorted physical and geometric properties due to variations in sensor resolution and field of view. To that end, we propose an architecture for processing this data in an instance segmentation framework. We focus specifically on LiDAR as a key sensor modality for machine vision tasks and highly automated driving (HAD). Through an experimental setup in a controlled synthetic environment, we identify a bias on sensor resolution and field of view and demonstrate that our proposed method can reduce said bias for the task of LiDAR instance segmentation. Furthermore, we define our method such that it can be applied to other projection-based sensors, such as cameras. To promote transparency, we make our code and dataset publicly available. This method shows the potential to improve performance and robustness in various machine vision tasks that utilize projection-based sensors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge