Steven Sandoval

Unifying Common Signal Analyses with Instantaneous Time-Frequency Atoms

Aug 07, 2025Abstract:In previous work, we presented a general framework for instantaneous time-frequency analysis but did not provide any specific details of how to compute a particular instantaneous spectrum (IS). In this work, we use instantaneous time-frequency atoms to obtain an IS associated with common signal analyses: time domain analysis, frequency domain analysis, fractional Fourier transform, synchrosqueezed short-time Fourier transform, and synchrosqueezed short-time fractional Fourier transform. By doing so, we demonstrate how the general framework can be used to unify these analyses and we develop closed-form expressions for the corresponding ISs. This is accomplished by viewing these analyses as decompositions into AM--FM components and recognizing that each uses a specialized (or limiting) form of a quadratic chirplet as a template during analysis. With a two-parameter quadratic chirplet, we can organize these ISs into a 2D continuum with points in the plane corresponding to a decomposition related to one of the signal analyses. Finally, using several example signals, we compute in closed-form the ISs for the various analyses.

Estimation of non-uniform blur using a patch-based regression convolutional neural network

Feb 12, 2024

Abstract:The non-uniform blur of atmospheric turbulence can be modeled as a superposition of linear motion blur kernels at a patch level. We propose a regression convolutional neural network (CNN) to predict angle and length of a linear motion blur kernel for varying sized patches. We analyze the robustness of the network for different patch sizes and the performance of the network in regions where the characteristics of the blur are transitioning. Alternating patch sizes per epoch in training, we find coefficient of determination scores across a range of patch sizes of $R^2>0.78$ for length and $R^2>0.94$ for angle prediction. We find that blur predictions in regions overlapping two blur characteristics transition between the two characteristics as overlap changes. These results validate the use of such a network for prediction of non-uniform blur characteristics at a patch level.

Estimation of motion blur kernel parameters using regression convolutional neural networks

Aug 02, 2023Abstract:Many deblurring and blur kernel estimation methods use MAP or classification deep learning techniques to sharpen an image and predict the blur kernel. We propose a regression approach using neural networks to predict the parameters of linear motion blur kernels. These kernels can be parameterized by its length of blur and the orientation of the blur.This paper will analyze the relationship between length and angle of linear motion blur. This analysis will help establish a foundation to using regression prediction in uniformed motion blur images.

Deep learning estimation of modified Zernike coefficients for image point spread functions

Apr 05, 2023Abstract:Recovering the turbulence-degraded point spread function from a single intensity image is important for a variety of imaging applications. Here, a deep learning model based on a convolution neural network is applied to intensity images to predict a modified set of Zernike polynomial coefficients corresponding to wavefront aberrations in the pupil due to turbulence. The modified set assigns an absolute value to coefficients of even radial orders due to a sign ambiguity associated with this problem and is shown to be sufficient for specifying the intensity point spread function. Simulated image data of a point object and simple extended objects over a range of turbulence and detection noise levels are created for the learning model. The MSE results for the learning model show that the best prediction is found when observing a point object, but it is possible to recover a useful set of modified Zernike coefficients from an extended object image that is subject to detection noise and turbulence.

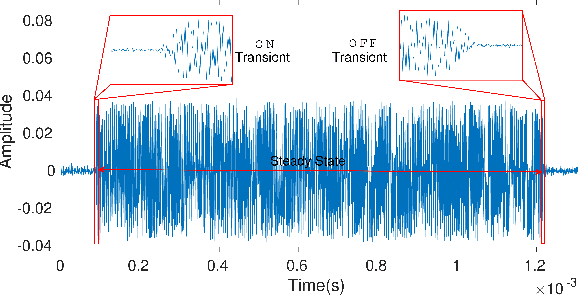

WIDEFT: A Corpus of Radio Frequency Signals for Wireless Device Fingerprint Research

Aug 10, 2021

Abstract:Wireless network security may be improved by identifying networked devices via traits that are tied to hardware differences, typically related to unique variations introduced in the manufacturing process. One way these variations manifest is through unique transient events when a radio transmitter is activated or deactivated. Features extracted from these signal bursts have in some cases, shown to provide a unique "fingerprint" for a wireless device. However, only recently have researchers made such data available for research and comparison. Herein, we describe a publicly-available corpus of radio frequency signals that can be used for wireless device fingerprint research. The WIDEFT corpus contains signal bursts from 138 unique devices (100 bursts per device), including Bluetooth- and WiFi-enabled devices, from 79 unique models. Additionally, to demonstrate the utility of the WIDEFT corpus, we provide four baseline evaluations using a minimal subset of previously-proposed features and a simple ensemble classifier.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge