Stephan D. Ewert

Combined assessment of auditory distance perception and externalization

Aug 26, 2024

Abstract:This study investigates frontal auditory distance perception (ADP) and externalization in virtual audio-visual environments, considering effects of headphone rendering method, room size, reverberation, and visual representation of the room. Either head-related impulse responses from an artificial head or a spherical head model were used for diotic (monophonic) and binaural auralizations with and without real-time head tracking. The visuals were presented through a head-mounted display. Two differently sized rooms as well as an infinitely extending space (echoic and anechoic) were used in which an invisible frontal virtual sound source was located. Additionally, the effect of a freely movable loudspeaker for visually indicating perceived distances was investigated. Both ADP and externalization were significantly affected by room size, but otherwise the two perceptual quantities differed in their outcomes. Room visibility significantly affected ADP, leading to considerable overestimations and more variability in the absence of a visual environment, although externalization was not affected. The movable loudspeaker improved distance estimation significantly, however, did not affect externalization. For reverberation, a (non-significant) trend of improved ADP was observed, however, externalization was significantly improved. Different headphone renderings did not significantly affect ADP or externalization, although a clear trend was observed for externalization.

The effect of self-motion and room familiarity on sound source localization in virtual environments

Aug 25, 2024Abstract:This paper investigates the influence of lateral horizontal self-motion of participants during signal presentation on distance and azimuth perception for frontal sound sources in a rectangular room. Additionally, the effect of deviating room acoustics for a single sound presentation embedded in a sequence of presentations using a baseline room acoustics for familiarization is analyzed. For this purpose, two experiments were conducted using audiovisual virtual reality technology with dynamic head-tracking and real-time auralization over headphones combined with visual rendering of the room using a head-mounted display. Results show an improved distance perception accuracy when participants moved laterally during signal presentation instead of staying at a fixed position, with only head movements allowed. Adaptation to the room acoustics also improves distance perception accuracy. Azimuth perception seems to be independent of lateral movements during signal presentation and could even be negatively influenced by the familiarity of the used room acoustics.

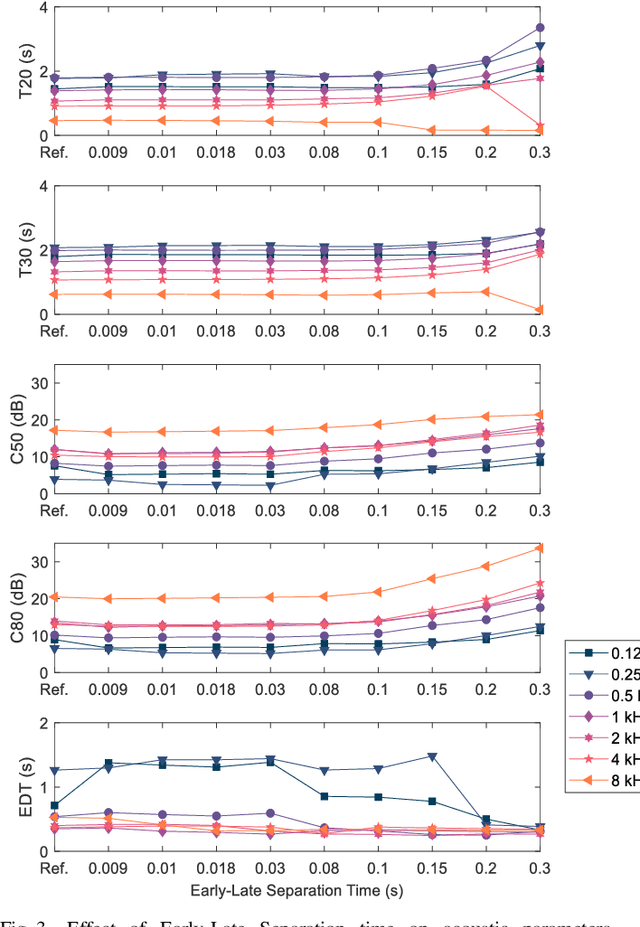

Computationally-efficient and perceptually-motivated rendering of diffuse reflections in room acoustics simulation

Jun 29, 2023Abstract:Geometrical acoustics is well suited for simulating room reverberation in interactive real-time applications. While the image source model (ISM) is exceptionally fast, the restriction to specular reflections impacts its perceptual plausibility. To account for diffuse late reverberation, hybrid approaches have been proposed, e.g., using a feedback delay network (FDN) in combination with the ISM. Here, a computationally-efficient, digital-filter approach is suggested to account for effects of non-specular reflections in the ISM and to couple scattered sound into a diffuse reverberation model using a spatially rendered FDN. Depending on the scattering coefficient of a room boundary, energy of each image source is split into a specular and a scattered part which is added to the diffuse sound field. Temporal effects as observed for an infinite ideal diffuse (Lambertian) reflector are simulated using cascaded all-pass filters. Effects of scattering and multiple (inter-) reflections caused by larger geometric disturbances at walls and by objects in the room are accounted for in a highly simplified manner. Using a single parameter to quantify deviations from an empty shoebox room, each reflection is temporally smeared using cascaded all-pass filters. The proposed method was perceptually evaluated against dummy head recordings of real rooms.

Evaluation of Virtual Acoustic Environments with Different Acoustic Level of Detail

Jun 29, 2023Abstract:Virtual acoustic environments enable the creation and simulation of realistic and ecologically valid daily-life situations with applications in hearing research and audiology. Hereby, reverberant indoor environments play an important role. For real-time applications, simplifications in the room acoustics simulation are required, however, it remains unclear what acoustic level of detail (ALOD) is necessary to capture all perceptually relevant effects. This study investigates the effect of varying ALOD in the simulation of three different real environments, a living room with a coupled kitchen, a pub, and an underground station. ALOD was varied by generating different numbers of image sources for early reflections, or by excluding geometrical room details specific for each environment. The simulations were perceptually evaluated using headphones in comparison to binaural room impulse responses measured with a dummy head in the corresponding real environments. The study assessed the perceived overall difference for a pink pulse, and a speech token. Furthermore, plausibility and externalization were evaluated. The results show that a strong reduction in ALOD is possible while obtaining similar plausibility and externalization as with dummy head recordings. The number and accuracy of early reflections appear less relevant, provided diffuse late reverberation is appropriately accounted for.

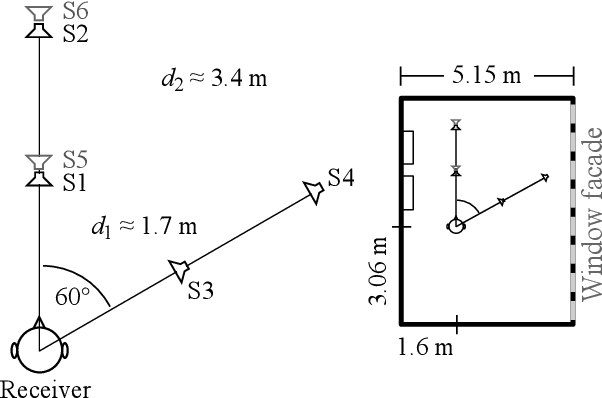

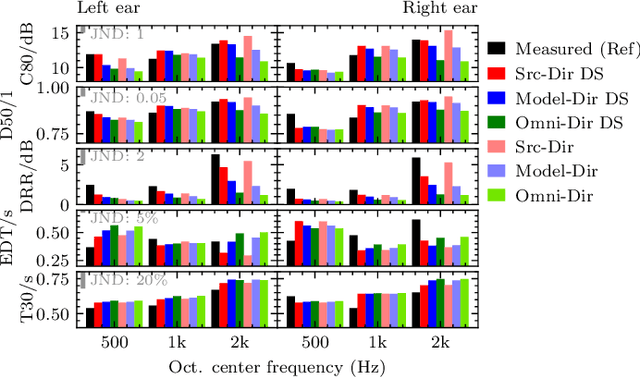

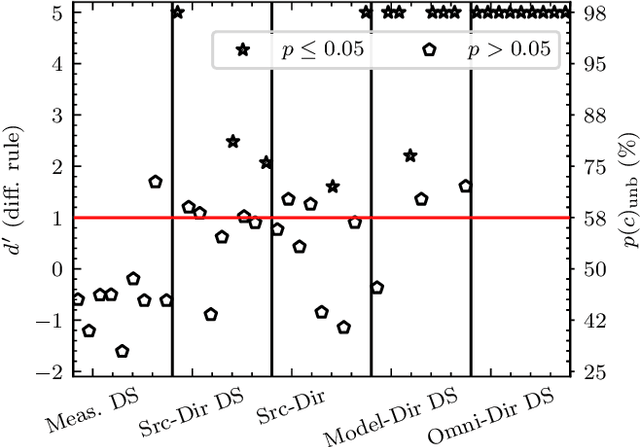

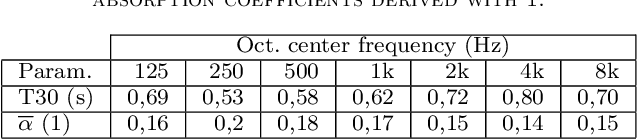

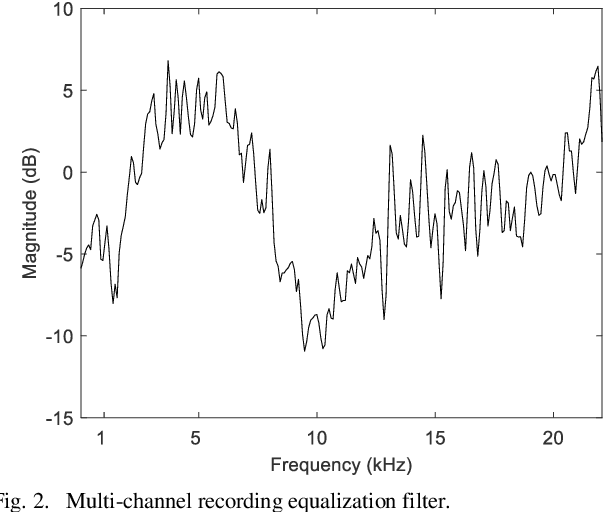

On the relevance of acoustic measurements for creating realistic virtual acoustic environments

Jun 29, 2023

Abstract:Geometrical approaches for room acoustics simulation have the advantage of requiring limited computational resources while still achieving a high perceptual plausibility. A common approach is using the image source model for direct and early reflections in connection with further simplified models such as a feedback delay network for the diffuse reverberant tail. When recreating real spaces as virtual acoustic environments using room acoustics simulation, the perceptual relevance of individual parameters in the simulation is unclear. Here we investigate the importance of underlying acoustical measurements and technical evaluation methods to obtain high-quality room acoustics simulations in agreement with dummy-head recordings of a real space. We focus on the role of source directivity. The effect of including measured, modelled, and omnidirectional source directivity in room acoustics simulations was assessed in comparison to the measured reference. Technical evaluation strategies to verify and improve the accuracy of various elements in the simulation processing chain from source, the room properties, to the receiver are presented. Perceptual results from an ABX listening experiment with random speech tokens are shown and compared with technical measures for a ranking of simulation approaches.

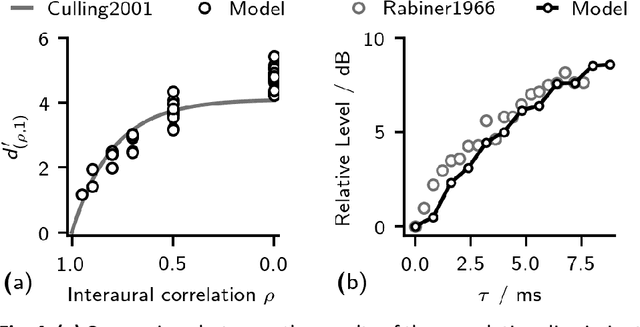

Interaural Coherence Across Frequency Channels Accounts for Binaural Detection in Complex Maskers

Oct 06, 2021

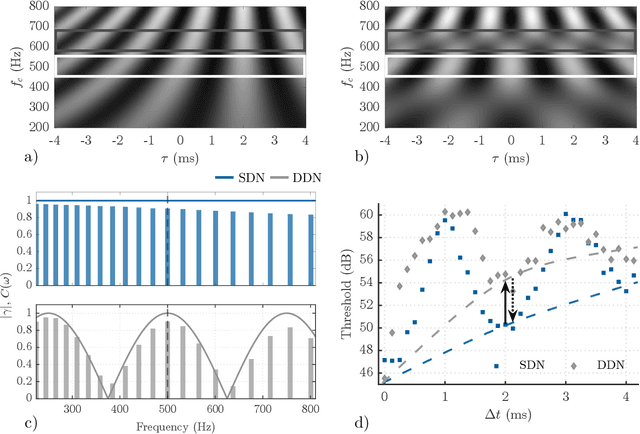

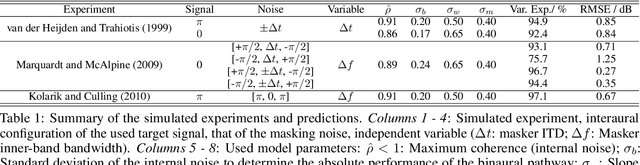

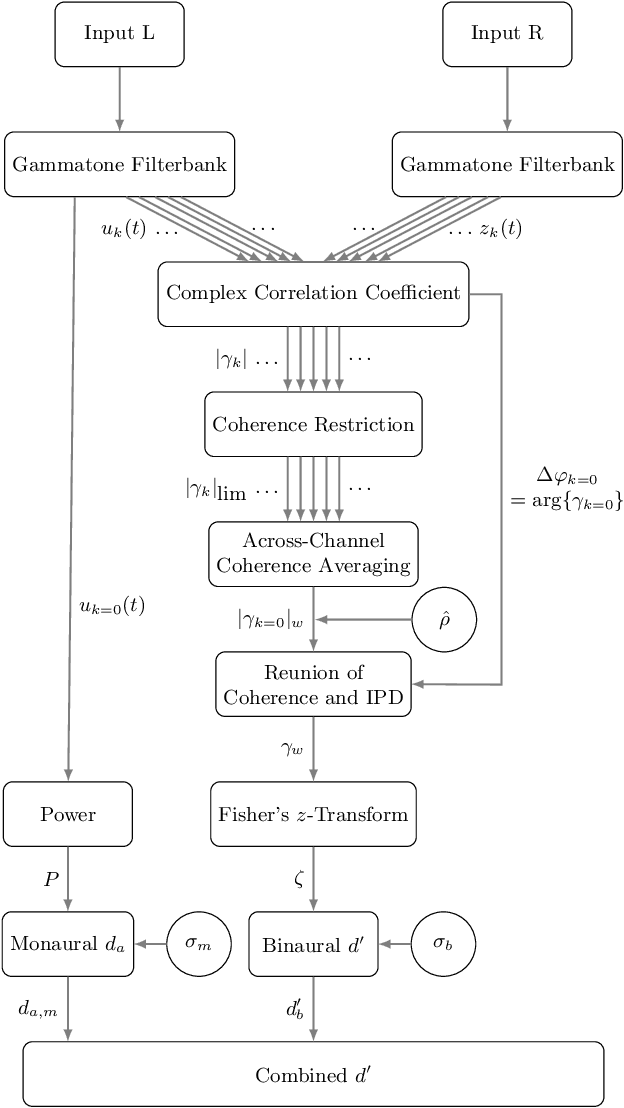

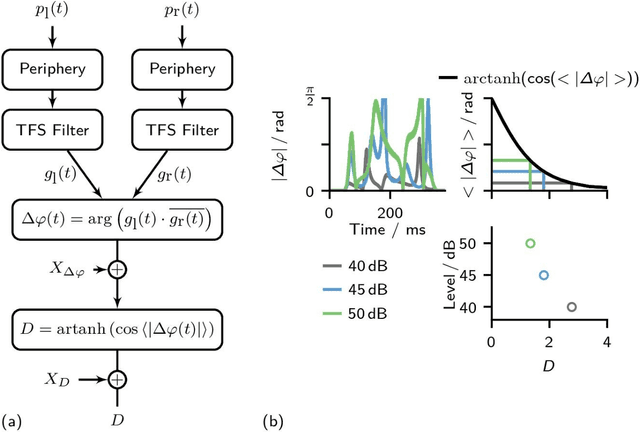

Abstract:Differences in interaural phase configuration between a target and a masker can lead to substantial binaural unmasking. This effect is decreased for masking noise having an interaural time difference (ITD). Adding a second noise with the opposite ITD further reduces binaural unmasking. Thus far, simulation of the detection threshold required both a mechanism for internal ITD compensation and an increased binaural processing bandwidth. An alternative explanation for the reduction is that unmasking is impaired by the lower interaural coherence in off-frequency regions caused by the second masker (Marquardt and McAlpine 2009, JASA pp. EL177 - EL182). Based on this hypothesis the current work proposes a quantitative multi-channel model using monaurally derived peripheral filter bandwidths and an across-channel incoherence interference mechanism. This mechanism differs from wider filters since it is moot when the masker coherence is constant across frequency bands. Combined with a monaural energy discrimination pathway, the model predicts the differences between single- and double-delayed noise, as well as four other data sets. It can help resolving the inconsistency that simulation of some data sets requires wide filters while others require narrow filters.

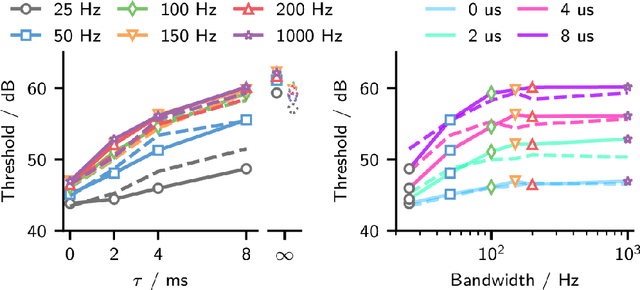

Prediction of tone detection thresholds in interaurally delayed noise based on interaural phase difference fluctuations

Jul 01, 2021

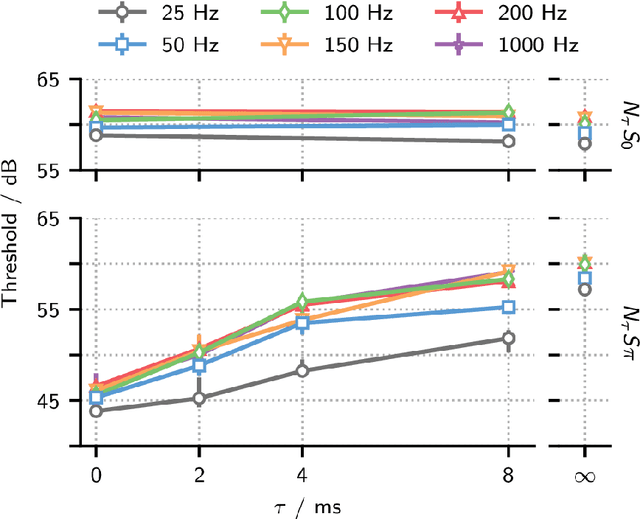

Abstract:Differences between the interaural phase of a noise and a target tone improve detection thresholds. The maximum masking release is obtained for detecting an antiphasic tone (S$\pi$) in diotic noise (N0). It has been shown in several studies that this benefit gradually declines as an interaural delay is applied to the N0S$\pi$ complex. This decline has been attributed to the reduced interaural coherence of the noise. Here, we report detection thresholds for a 500 Hz tone in masking noise with up to 8 ms interaural delay and bandwidths from 25 to 1000 Hz. When reducing the noise bandwidth from 100 to 50 and 25 Hz, the masking release at 8 ms delay increases, as expected for increasing temporal coherence with decreasing bandwidth. For bandwidths of 100 to 1000 Hz, no significant difference was observed and detection thresholds with these noises have a delay dependence that is fully described by the temporal coherence imposed by the typical monaurally determined auditory filter bandwidth. A minimalistic binaural model is suggested based on interaural phase difference fluctuations without the assumption of delay lines.

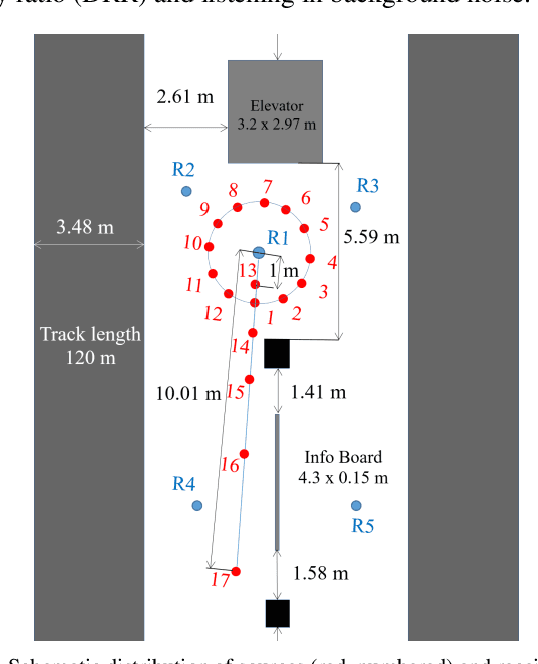

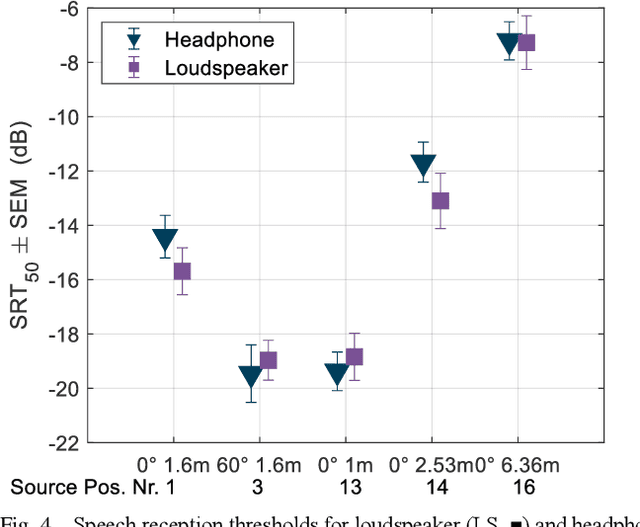

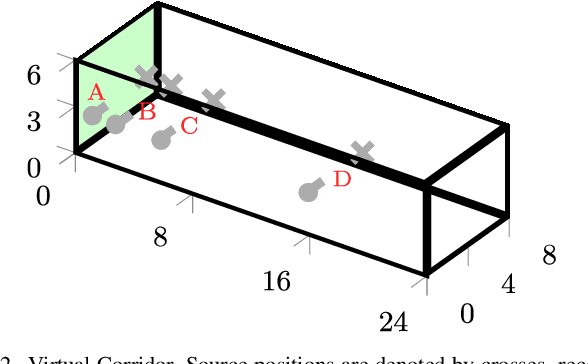

Communication conditions in virtual acoustic scenes in an underground station

Jun 30, 2021

Abstract:Underground stations are a common communication situation in towns: we talk with friends or colleagues, listen to announcements or shop for titbits while background noise and reverberation are challenging communication. Here, we perform an acoustical analysis of two communication scenes in an underground station in Munich and test speech intelligibility. The acoustical conditions were measured in the station and are compared to simulations in the real-time Simulated Open Field Environment (rtSOFE). We compare binaural room impulse responses measured with an artificial head in the station to modeled impulse responses for free-field auralization via 60 loudspeakers in the rtSOFE. We used the image source method to model early reflections and a set of multi-microphone recordings to model late reverberation. The first communication scene consists of 12 equidistant (1.6 m) horizontally spaced source positions around a listener, simulating different direction-dependent spatial unmasking conditions. The second scene mimics an approaching speaker across six radially spaced source positions (from 1 m to 10 m) with varying direct sound level and thus direct-to-reverberant energy. The acoustic parameters of the underground station show a moderate amount of reverberation (T30 in octave bands was between 2.3 s and 0.6 s and early-decay times between 1.46 s and 0.46 s). The binaural and energetic parameters of the auralization were in a close match to the measurement. Measured speech reception thresholds were within the error of the speech test, letting us to conclude that the auralized simulation reproduces acoustic and perceptually relevant parameters for speech intelligibility with high accuracy.

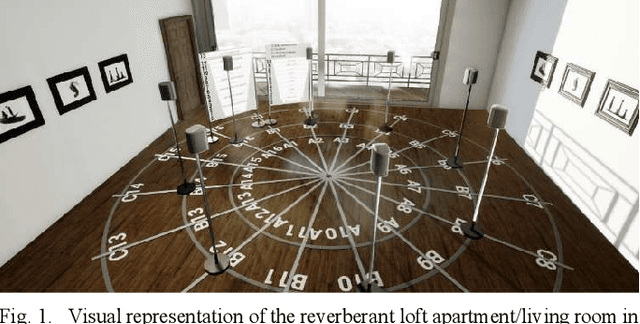

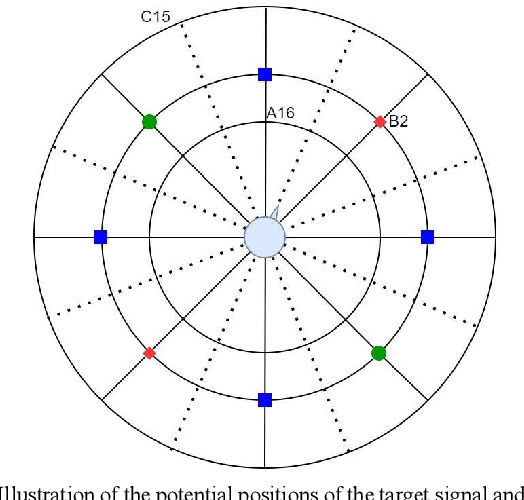

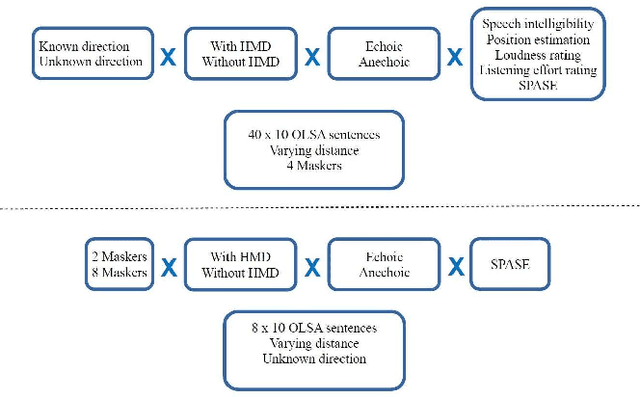

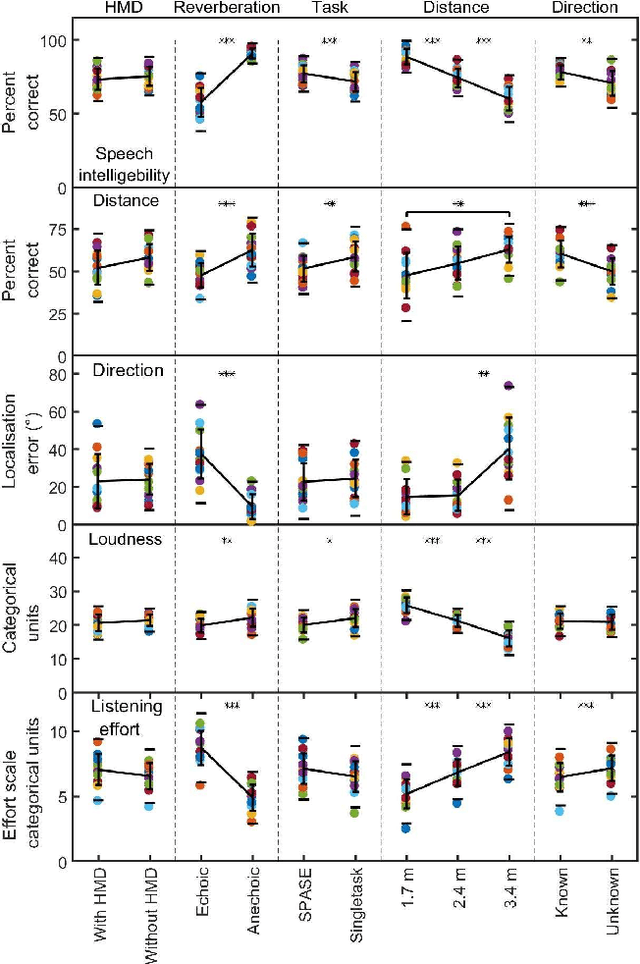

Effect of acoustic scene complexity and visual scene representation on auditory perception in virtual audio-visual environments

Jun 30, 2021

Abstract:In daily life, social interaction and acoustic communication often take place in complex acoustic environments (CAE) with a variety of interfering sounds and reverberation. For hearing research and evaluation of hearing systems simulated CAEs using virtual reality techniques have gained interest in the context of ecologically validity. In the current study, the effect of scene complexity and visual representation of the scene on psychoacoustic measures like sound source location, distance perception, loudness, speech intelligibility, and listening effort in a virtual audio-visual environment was investigated. A 3-dimensional, 86-channel loudspeaker array was used to render the sound field in combination with or without a head-mounted display (HMD) to create an immersive stereoscopic visual representation of the scene. The scene consisted of a ring of eight (virtual) loudspeakers which played a target speech stimulus and non-sense speech interferers in several spatial conditions. Either an anechoic (snowy outdoor scenery) or echoic environment (loft apartment) with a reverberation time (T60) of about 1.5 s was simulated. In addition to varying the number of interferers, scene complexity was varied by assessing the psychoacoustic measures in isolated consecutive measurements or simultaneously. Results showed no significant effect of wearing the HMD on the data. Loudness and distance perception showed significantly different results when they were measured simultaneously instead of consecutively in isolation. The advantage of the suggested setup is that it can be directly transferred to a corresponding real room, enabling a 1:1 comparison and verification of the perception experiments in the real and virtual environment.

Computationally efficient spatial rendering of late reverberation in virtual acoustic environments

Jun 30, 2021

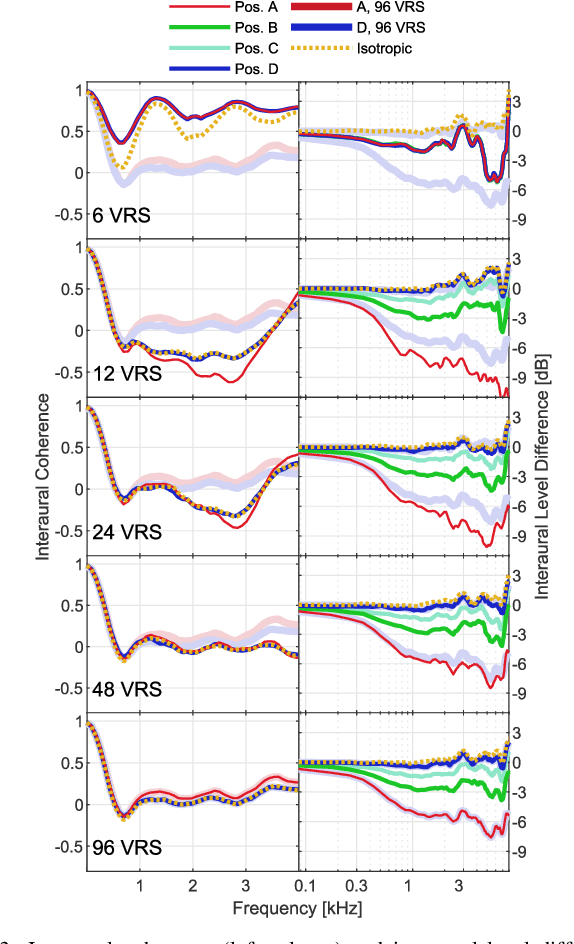

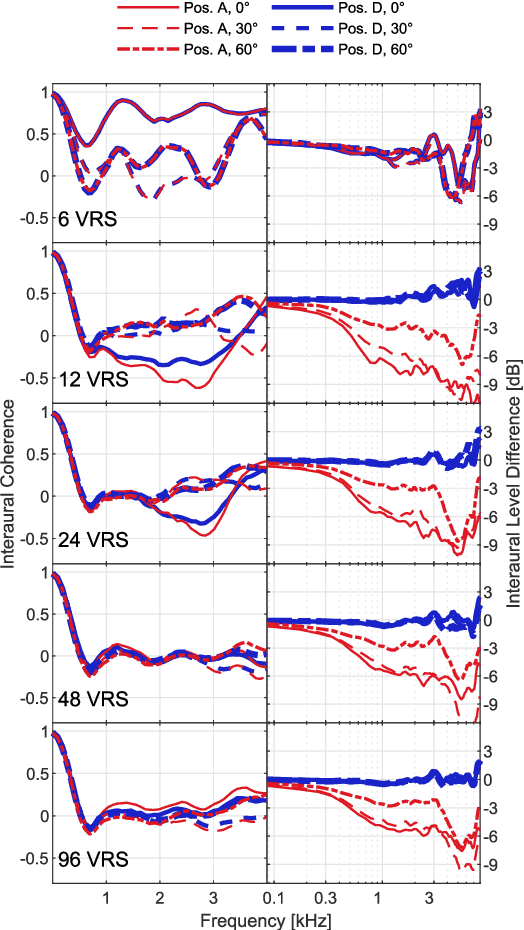

Abstract:For 6-DOF (degrees of freedom) interactive virtual acoustic environments (VAEs), the spatial rendering of diffuse late reverberation in addition to early (specular) reflections is important. In the interest of computational efficiency, the acoustic simulation of the late reverberation can be simplified by using a limited number of spatially distributed virtual reverb sources (VRS) each radiating incoherent signals. A sufficient number of VRS is needed to approximate spatially anisotropic late reverberation, e.g., in a room with inhomogeneous distribution of absorption at the boundaries. Here, a highly efficient and perceptually plausible method to generate and spatially render late reverberation is suggested, extending the room acoustics simulator RAZR [Wendt et al., J. Audio Eng. Soc., 62, 11 (2014)]. The room dimensions and frequency-dependent absorption coefficients at the wall boundaries are used to determine the parameters of a physically-based feedback delay network (FDN) to generate the incoherent VRS signals. The VRS are spatially distributed around the listener with weighting factors representing the spatially subsampled distribution of absorption coefficients on the wall boundaries. The minimum number of VRS required to be perceptually distinguishable from the maximum (reference) number of 96 VRS was assessed in a listening test conducted with a spherical loudspeaker array within an anechoic room. For the resulting low numbers of VRS suited for spatial rendering, optimal physically-based parameter choices for the FDN are discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge